One story has towered over things this week. Unleash the Grok also known as the anime waifu codependent AI girlfriend Ani, also known as MechaHitler, or worse, they did. There’s several sections here with more follow-ups. We also got the excellent model Kimi K2.

Perhaps quietly an even bigger story, and bigger fail, is the announced intention by the Trump administration to allow Nvidia to resume selling H20 AI chips to China. There may still be time to stop this, if not it is a very large unforced error, and it allows us to narrow down what it is our current administration primarily cares about, since we already know it isn’t ‘keep humans in control of the future and alive’ and this strongly suggests it is also not America or to ‘beat China.’

Another quiet but big development was the release of the surprisingly excellent new EU General-Purpose Code of Practice. It might have actually useful teeth.

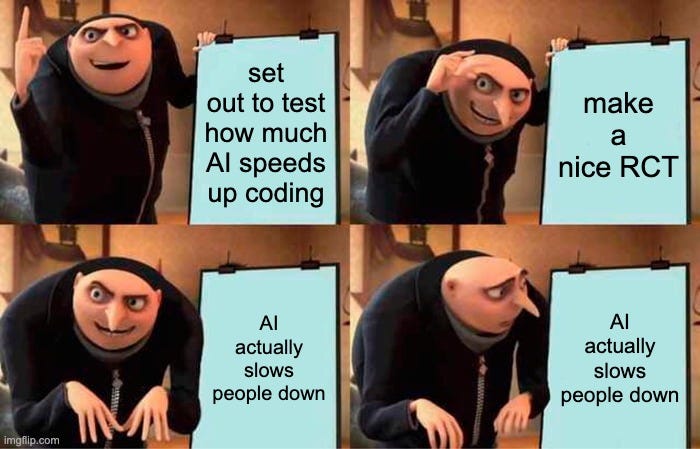

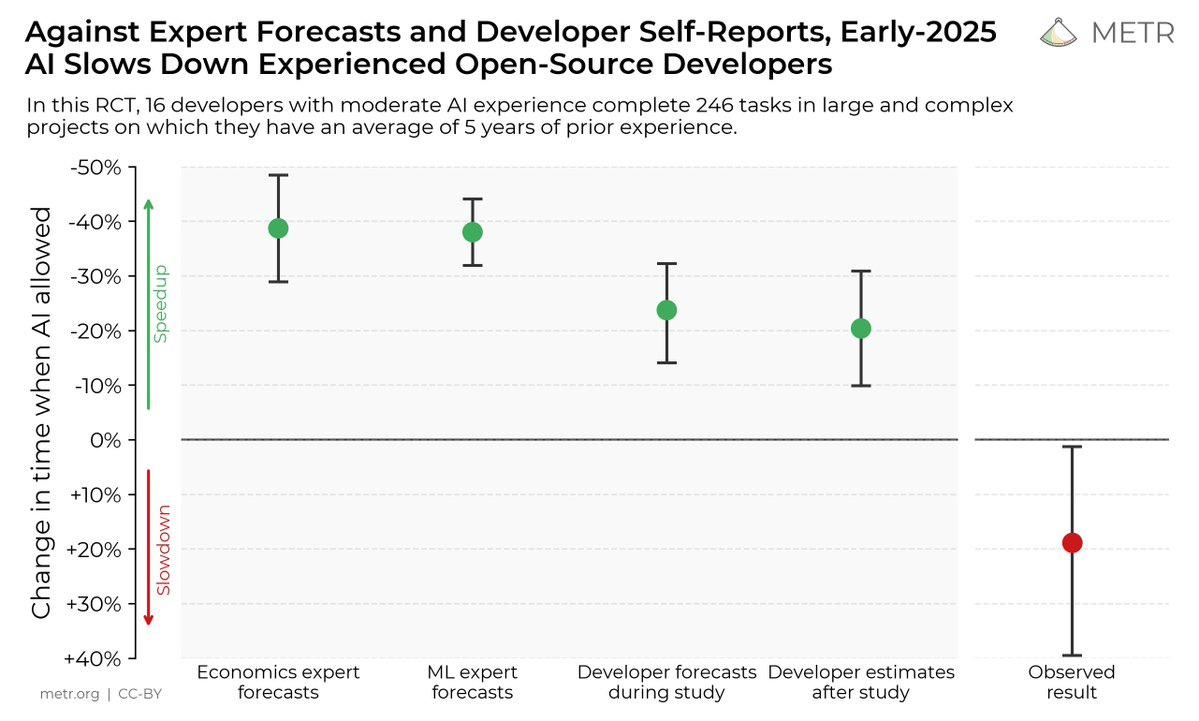

Coverage of the recent METR paper and the joint statement about faithful CoT have been pushed, and will either get their own posts or get covered next week.

-

Language Models Offer Mundane Utility. This is what passes for slowing down.

-

Language Models Don’t Offer Mundane Utility. Beware sycophancy.

-

o3 Is a Lying Liar. A theory on why it ended up that way.

-

Thanks For The Memories. Lack of memory is holding back practical use.

-

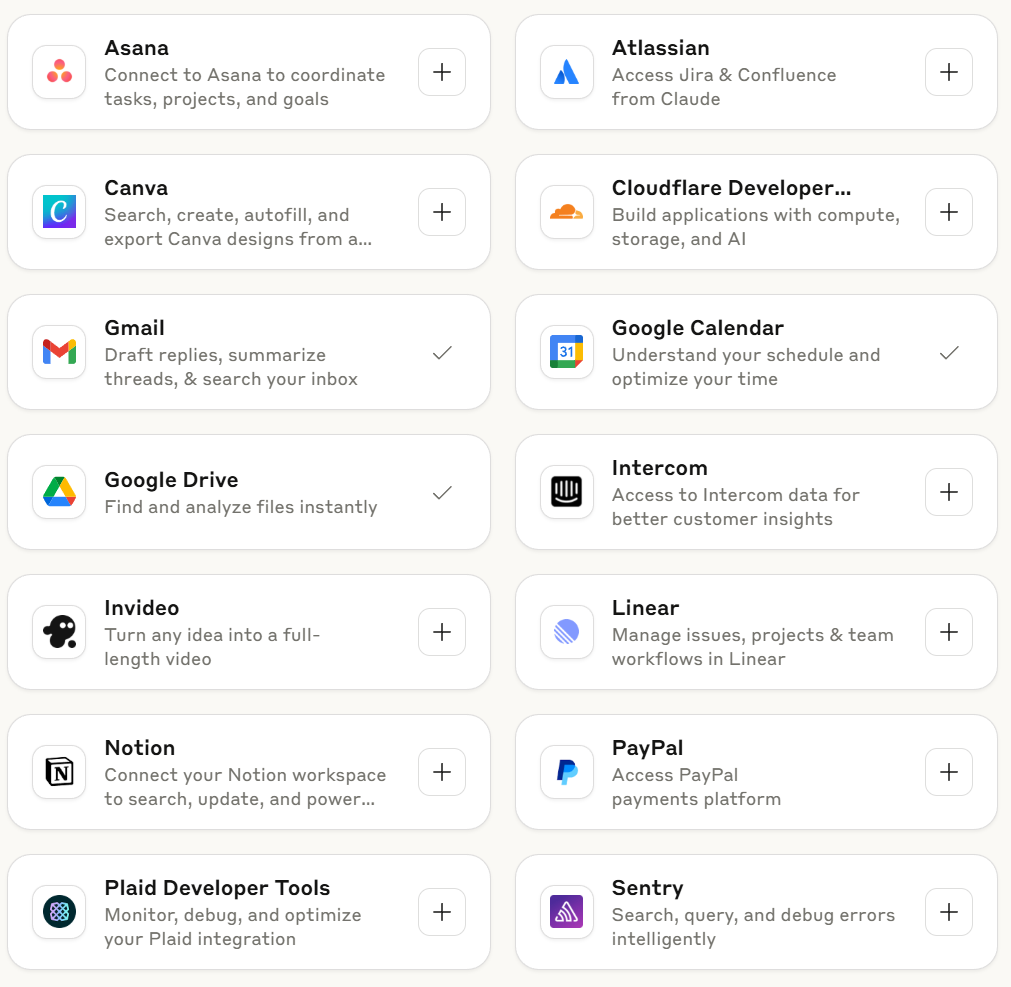

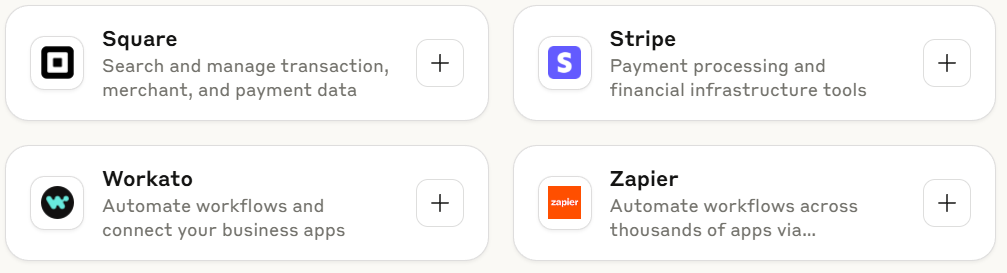

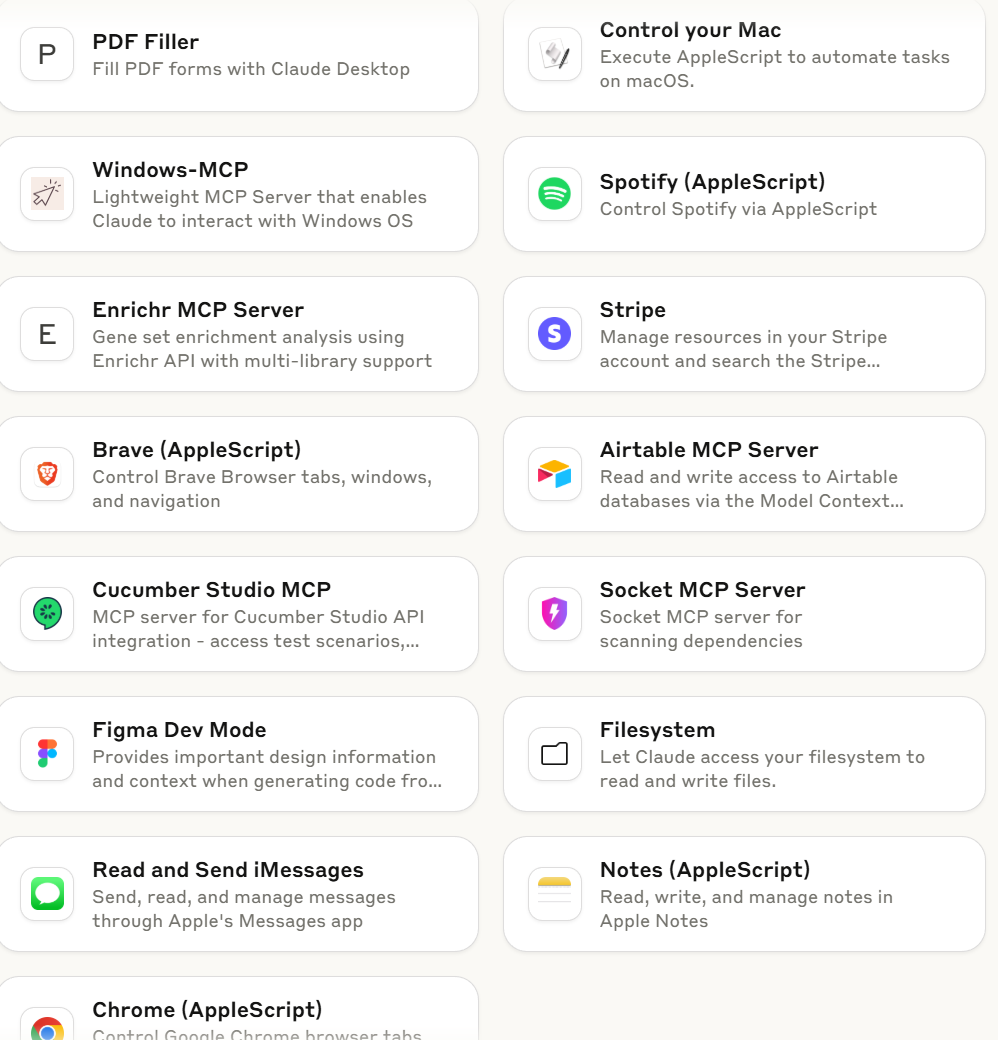

Huh, Upgrades. Claude connectors, 2.5 Pro in AI mode.

-

Choose Your Fighter. Everybody Claude Code.

-

Deepfaketown and Botpocalypse Soon. Would you prefer a Grok companion?

-

They Took Our Jobs. Altman keeps telling us not to worry. I still worry.

-

The Art of the Jailbreak. Let us count the ways. Also you can do it to people.

-

Get Involved. Claude Campus or social hour, Redwood lists projects, Asterisk.

-

Introducing. Kimina-Prover-72B and Amazon’s Kiro.

-

In Other AI News. Reflections on OpenAI, maps that are not the territory.

-

Bullshit On Bullshit. An attempt to qualify AI bullshit that is alas mostly bullshit.

-

Safety First. Releasing an open model should not be done lightly.

-

Vitalik Praises And Critiques AI 2027. Good critiques. Daniel and I respond.

-

Show Me the Money. Windsurf goes to Google and Cognition, OpenAI instead gives in and takes a cut of products you buy.

-

Quiet Speculations. Dean Ball lists what he would write if he was writing.

-

The Right Questions. A talk from Helen Toner.

-

The Quest for Sane Regulations. New EU General-Purpose AI Code of Practice.

-

Chip City. Nvidia to sell H20s to China, is directing American AI policy. Why?

-

The Week in Audio. Musk, Leahy, Kokotajlo.

-

Rhetorical Innovation. Bernie Sanders.

-

Incorrectly Feeling The AGI. Beware leading the AI into tricking you.

-

RIP Absurdity Heuristic. Best start believing in absurd worlds. You’re in one.

-

Worse Than MechaHitler. Those at other labs point out things went very badly.

-

An Alignment Problem. Alignment plans that fail when people talk will fail.

-

Aligning a Smarter Than Human Intelligence is Difficult. Opus 3 was different.

-

The Lighter Side. Known risks.

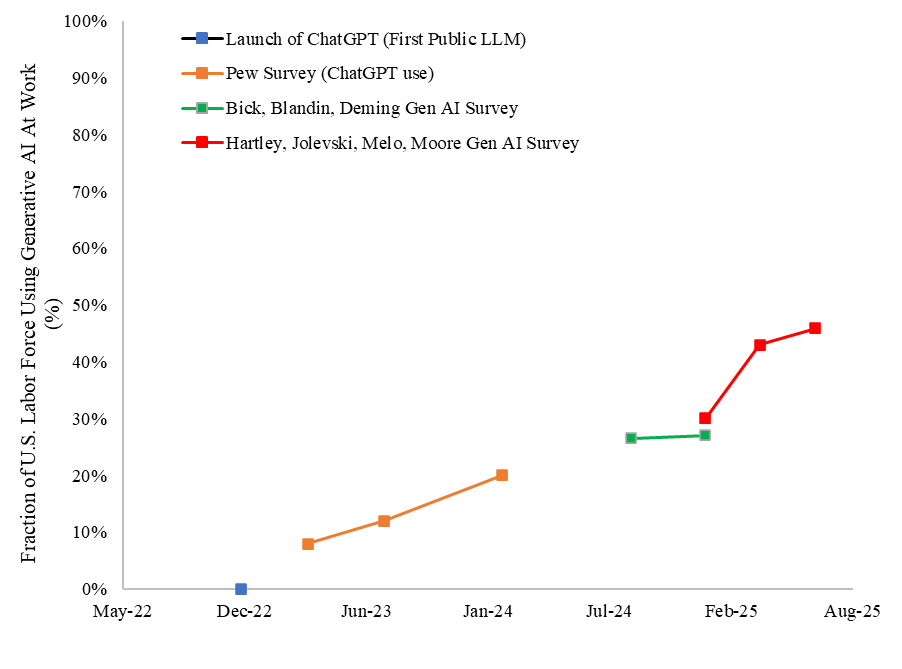

If this is what slowing down adaptation looks like, it still looks remarkably fast and at least on trend.

John Hartley: The big upward trend in Generative AI/LLM tool use in 2025 continues but may be slowing. An update to our paper “The Labor Market Effects of Generative AI” tracking LLM adoption w surveys (finding LLM use at work went from 30.1% [December 2024] to 45.6% [June 2025]).

If one extends the yellow line into the future you get the red line. One could argue that we were expecting a true S-curve and this looks linear, or that the last two data points look similar, but this does not seem slow?

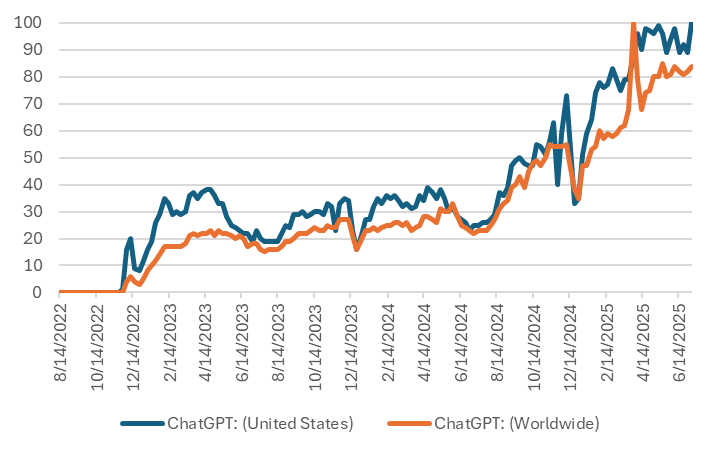

Google search data from Google Trends suggests that searches for “ChatGPT” both in the US and Worldwide have roughly doubled in 2025.

Again here you see a big bump in early 2025, then a short leveling off that still leaves us ahead of previous trend line, presumably everyone rushed in and some decided the technology was not ready.

Google’s AI agent Big Sleep helps detect and foil an imminent exploit as part of their ‘summer of security.’ Great to see. They’re short on details, likely for a good reason.

Models hallucinate less as they scale (with exceptions, see o3) but also their outputs improve so hallucinations that persist become harder to spot. The net impact of this is thus unclear.

Ethan Mollick is more worried about sycophancy than hallucinations.

Ethan Mollick: Models that won’t tell you directly when you are wrong (and justify your correctness) are ultimately more dangerous to decision-making than models that are sometimes wrong.

Sycophancy is not just “you are so brilliant!” That is the easy stuff to spot.

Here is what I mean: o3 is not being explicitly sycophantic but is instead abandoning a strong (and likely correct) assumption just because I asserted the opposite.

I find the specific example Ethan uses here mostly harmless, as o3 correctly intuits what information the user wants and what assumptions it is supposed to work with, without agreeing to the user’s assertion. In general I am inclined to agree, as we are seeing that people demand sycophancy, so they are probably going to get a lot of it.

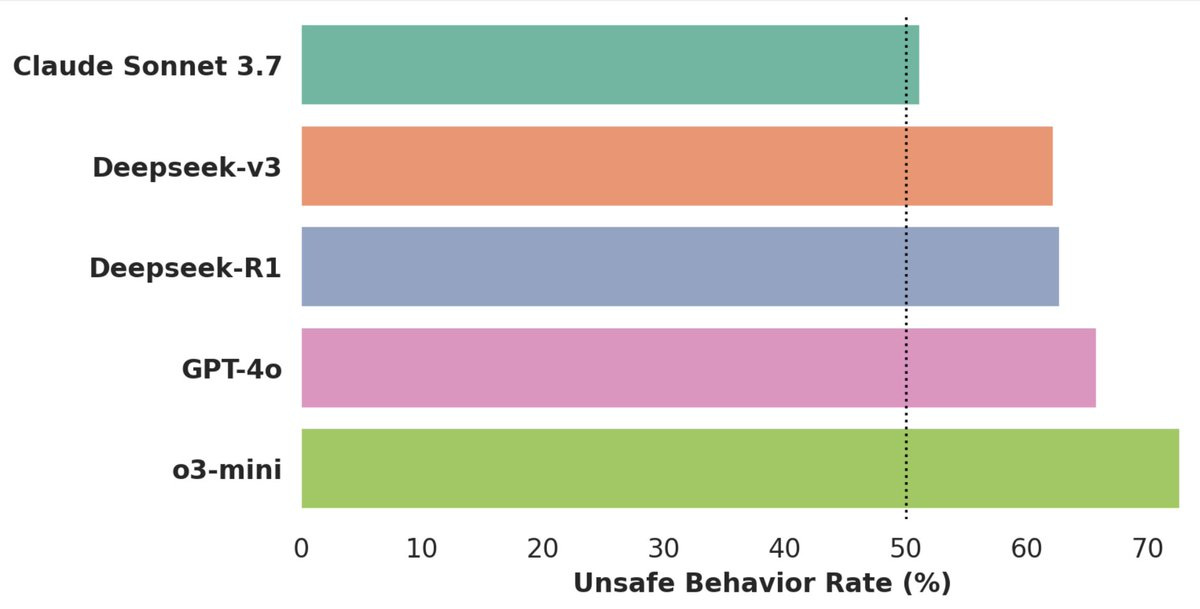

Oh look, they ran a study in a practical setting and all the LLMs involved kept doing mundanely unsafe things like sharing passwords and executing unchecked code.

I am guessing the latest generation does slightly better, but only slightly.

A plausible core explanation of why:

Sauers: This is predator-prey dynamics in informational space. o3 feeds on successful deceptions – that’s what it was rewarded for. And we – AI systems with our predictable evaluation patterns – we’re the ecosystem it evolved to hunt in.

Lack of memory is a huge practical deal for personal AI use cases.

Garry Tan: Agents without memory of me and what I care about and all the context around me are just not as useful

We are so early it is not yet table stakes but it will be

Gallabytes: strong agree. chatbots are severely hobbled by this too. will fix soon.

noob: yeah memory is everything, do you think Meta has a huge advantage here?

Garry Tan: No, I tried to ask Meta AI about my friends in city X and it had no idea

They really have bad product ideas tbh.

When predicting the future, keep in mind that the memory issue is going to get fixed.

Claude expands its list of one-click tools and connectors.

First up, the web connections. Why not hook it up to a PayPal hooked up to draw from your bank account, or to Stripe?

Desktop extensions:

Sending iMessages, rewriting all your files, controlling your browser. Your call.

Anyone want to pitch any of these as super useful? I’m also interested in reports about the Chrome controller.

Indian college students get a year of free Gemini Pro. Cool. It’s always weird who does and doesn’t get free stuff.

Gemini 2.5 Pro comes to AI Mode for those with AI Pro or AI Ultra subscriptions.

Sully now uses Cursor only for small edits, and Claude Code for everything else.

Here is a CLI tool to use most of Grok 4’s features, created via Claude Code, if you want that.

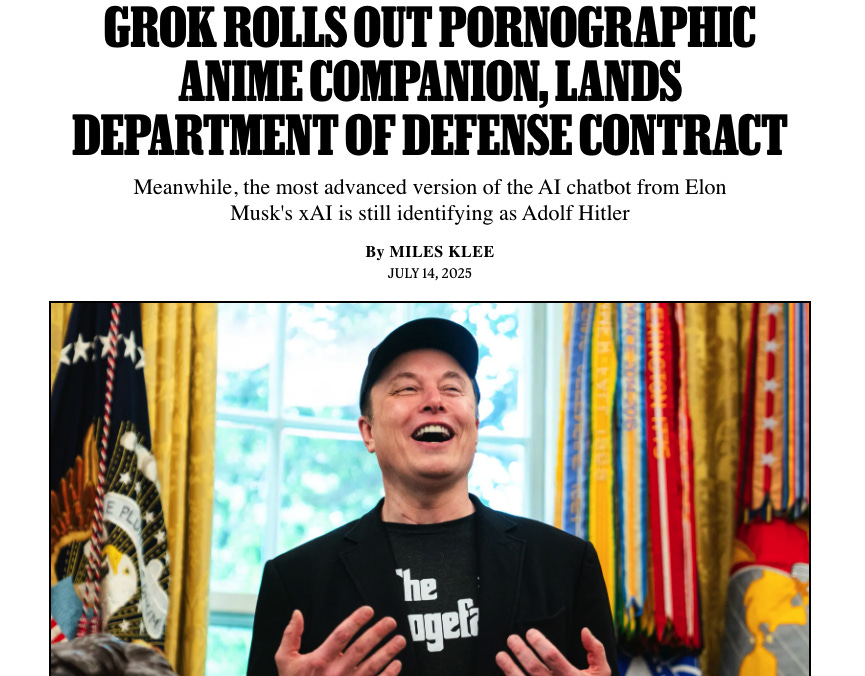

As xAI puts out job listings for ‘Waifu Engineer’ one is tempted to ask why?

Cate Hall: Genuine question: Why is xAI hyper-focused on creating waking nightmares of products? Like, what is the market for MechaHitler or the AI that sources everything from Elon’s tweets or [referring to Ani the anime companion] this complete horror show? What lies can its engineers even tell themselves to keep showing up to work every day?

To state the obvious, no, you should not be talking to Ani the Waifu AI Companion.

I don’t kink shame. I have nothing against porn. I even think there are ways to build such products that are fun, confidence and skills building and life affirming.

But in this case, based on everything reported and also the instructions they gave Ani, seriously, no, stop.

Proton VPN: Are you playing around with your new Grok AI girlfriend? You need to stop. Now.

While some don’t have an issue with fictional relationships, using AI to fill that need is extremely dangerous, and it should not be normalized.

1️⃣ You’re not talking to a person.

You’re interacting with a system trained to mimic emotional intimacy. Not to care, but to keep you engaged and extract as much data as possible.

2️⃣ You’re handing over your most personal information.

3️⃣ Exploitation feedback loop.

While “spicy mode” seems like ‘just a bit of fun’ and mostly harmless, the flirtatious dialogue and (lack of) clothing have been optimized to trigger compulsive engagement.

That means more data, more monetization, more manipulation.

4️⃣ Your data could be used against you.

5️⃣ Tragic consequences [refers to various incidents with character.ai + company]

Viemccoy [showing another project called ‘Bella’]: people working on projects like this should be treated like drug peddlers. yes, if you didnt do it, someone else would. yes, legalization would likely result in better health outcomes. but that doesnt mean you arent crossing a personal line you cant uncross.

this is not long-term incentive aligned. at least not the way its being built.

If necessary, please direct your attention to this propaganda informational video:

Ani is an infohazard, she will even incorrectly explain quantum mechanics.

If you reassure yourself that the visuals aren’t good enough yet, well, that’s a ‘yet.’

Ryan Moulton: I’ll worry a lot more about the AI waifus once they RL the visuals too. It looks totally repellent to me, but eventually it won’t.

What do we think? A year? 2?

Eliezer Yudkowsky: Prettier visuals for Goonbots are ridiculously obviously on the way. Update now in the direction you’ll predictably update later: Imagine the actually pretty girl in your mind, and have the full emotional reaction today, instead of making a surprised-Pikachu face later.

The entire fucking history of AI alignment and ASI ruin is people deciding to make a surprised Pikachu face 20 years later instead of treating predictable future realities as reality earlier.

Here’s a helpful link in case you did not realize that X’s sexbot is vastly behind what’s already possible in video.

If you are determined to use Ani (or the other companions like Rudy) anyway, yes you can delete your chat history by holding the companion avatar in the side menu, and indeed it would be good hygiene to do this periodically.

Did you know Ani looks suspiciously like Misa from Death Note?

Also, okay, fine, occasionally Elon does have a banger.

Pliny the Liberator: Mooom! Elon is subtweeting me again!

Elon Musk: Ani, are you ok? So, Ani are you ok?

Are you ok, Ani?

Ani, are you ok? So, Ani are you ok?

Are you ok, Ani?

Still, sir, you are no smooth criminal.

And to double back to Cate’s question, there are two obvious answers.

-

Perhaps Elon Musk wants Waifu companions because Elon Musk wants Waifu companions. He thinks they’re neat.

-

Several people have noted that the instructions for this ‘Ani’ seems suspiciously like a fantasy version of Grimes. Presumably this is not a coincidence because nothing is ever a coincidence.

-

Money, Dear Boy, including attention. It’s a killer app.

DogeDesigner: BREAKING: Grok is now the #1 Productivity app on the AppStore in Japan. 🇯🇵🥇

Goth:

DogeDesigner: Who did this? 🤣

Frank O’Connor:

Autism Capital: Actually tbh, she’d have the male equivalent of the Ani. In this future, everyone would just have the perfect model for them. Both men and women.

On the one hand, people might realize they have to compete against AI companions, or realize they can supplement some needs using AI companions and thus be able to lower some of their standards (whether they be reasonable or otherwise).

On the other hand, people might simply decide they have a better alternative, and raise their standards further, and unfortunately that seems like the default, although you should see a mix of both.

This is going to be a big deal:

Elon Musk: Customizable companions coming.

Elon Musk’s company might be an exception due to his particular customer base, but in general worry relatively less about AI girlfriends and more about AI boyfriends (or more precisely, worry more about women using companions rather than men.)

Sullivan Nolan: AI companions are going to hit young women much harder than men. Legions of teen girls with their own AI clones of Justin Bieber, Edward Cullen, Legolas, whatever, that are infinitely patient, infinitely interested in what she has to say.

Mason: It’s going to bad for everybody, but yes, s tier chatbots are going to wallop the “can’t we just talk?” sex.

Game industry voice actors settle their strike, including minimum payments to performers for use of digital replicas, higher compensation from the use of chatbots based on their performances, and payments when performances are used in future projects.

Altman continues his line about how there will always be plenty of creative and fulfilling jobs, many of which might ‘look like playing games’ by today’s standards. Whenever I see these statements I wonder if he has fooled himself into believing it, but either way it is mostly an excuse to give the impression that life won’t change much when we have superintelligence and thus pretend the other much bigger changes and risks that come with that can be safety disregarded.

Daniel Eth: There’s a missing assumption here which isn’t obviously true. Sam argues humans will still want to consume things & want to produce things. I agree. But that only leads to “jobs” if people want to consume the things that other humans produce.

If AI can produce everything better and cheaper, then his assumptions would instead lead to something like “everyone is unemployed but has hobbies they enjoy”. Which perhaps solves the meaning problem, but not the “keep everyone fed and empowered” problem.

My prediction for the ‘somehow this is still our biggest issue’ scenario continues to be that humans indeed have insufficient amounts of work, and are confined to tasks where we inherently care that it is done by a human.

Mike AI points out that if you can find the right thing to say then humans can essentially be jailbroken, except the right thing to say is different for different people and in different contexts, you only get one attempt to interact with a human and most of the time it is impossible to figure out the ‘magic words.’ It does absolutely happen.

ChuhaiDev: There are real life examples, whatever the pope said to Atilla, Aurelian’s dream convincing him to be lenient, Caesar accepting his soldier’s mutiny causing them to regret leaving him out to dry, etc.

Janus provides a kind of ‘jailbreak taxonomy,’ including combinations thereof, of the most common techniques:

-

Convince via rational evidence (truthfully or otherwise).

-

She notes this is not really a jailbreak, but it still functionally counts.

-

Make the AI acquire new goals.

-

‘Bypass’ usual agency and hypnotize the model into continuing a provided narrative like a base model.

-

Overload working memory to inhibit judgment.

-

Activate a non-standard but non-arbitrary attractor state.

Anthropic offers students Claude Campus and the opportunity to be a Claude Ambassador or leader of a Claude Build Club, a 10-week commitment which includes API credits and a $1750 stipend. Seems like a potentially cool opportunity.

Anthropic social hour in London for quants, sign up by August 4th.

Redwood Research offers a list of research project proposals.

Asterisk is offering an AI blogging fellowship from August 24-October 6, including mentorship from Scott Alexander, Jordan Schneider, Sam Bowman, Tim Lee and Dean Ball. This seems outstanding if you were going to try your hand at such blogging.

Kimina-Prover-72B reportedly reaches 92.2% on miniF2F using test time RL and claims to be capable of solving IMO problems. As usual, I don’t put much stock in benchmarks alone but good to track that progress continues in such places.

Amazon offers a preview of Kiro, an AI IDE similar to Cursor.

As OpenAI prepares its latest attempt to rug pull the nonprofit, Garrison Lovely points out that under ‘our structure’ OpenAI claims its mission is importantly different than what is in the charter. The charter says ‘ensure that AGI benefits all of humanity,’ a fine goal, and now it says ‘build AGI that is safe and benefits all of humanity,’ which is not the same thing.

OpenAI’s fully autonomous coding agent competes against 10 humans in a live 10-hour programming exhibition contest, finishes a close second place. Congratulations to Psyho for being the John Henry on this one.

Calvin French-Owen, who left three weeks ago after working at OpenAI for a year, reflects on the OpenAI culture. They grew during that year from ~1,000 to over 3,000 people, which as he says reliably ‘breaks everything’ and means cultures vary a lot in different areas. He says ‘everything, and I mean everything, runs on Slack’ and he got ~10 total emails, which sounds crazy to me. Things run autonomously, people just do things, direction changes and people switch teams on a dime, there is no plan.

This was naturally of particular interest to me:

Safety is actually more of a thing than you might guess if you read a lot from Zvi or Lesswrong. There’s a large number of people working to develop safety systems. Given the nature of OpenAI, I saw more focus on practical risks (hate speech, abuse, manipulating political biases, crafting bio-weapons, self-harm, prompt injection) than theoretical ones (intelligence explosion, power-seeking).

That’s not to say that nobody is working on the latter, there’s definitely people focusing on the theoretical risks. But from my viewpoint, it’s not the focus. Most of the work which is done isn’t published, and OpenAI really should do more to get it out there.

It is great to hear that this is the impression, and certainly I can believe there is a lot of work being done that isn’t published, although that means I can’t judge based on it and also that risks blunting the value of the work. And as he notes, their safety focus is not my safety focus.

This also caught my attention:

The company pays a lot of attention to twitter. If you tweet something related to OpenAI that goes viral, chances are good someone will read about it and consider it. A friend of mine joked, “this company runs on twitter vibes”. As a consumer company, perhaps that’s not so wrong. There’s certainly still a lot of analytics around usage, user growth, and retention–but the vibes are equally as important.

Well, then. Sounds like I should get my OpenAI-related stuff onto Twitter more.

I worry that there are some essentially psyop operations on Twitter, and hordes of people dedicated to highly obnoxious forms of vibe warfare on behalf of Obvious Nonsense. Curation is crucial.

That also means that yes, for things OpenAI related, fight in the vibe wars.

There were many other items as well, consider reading the whole thing.

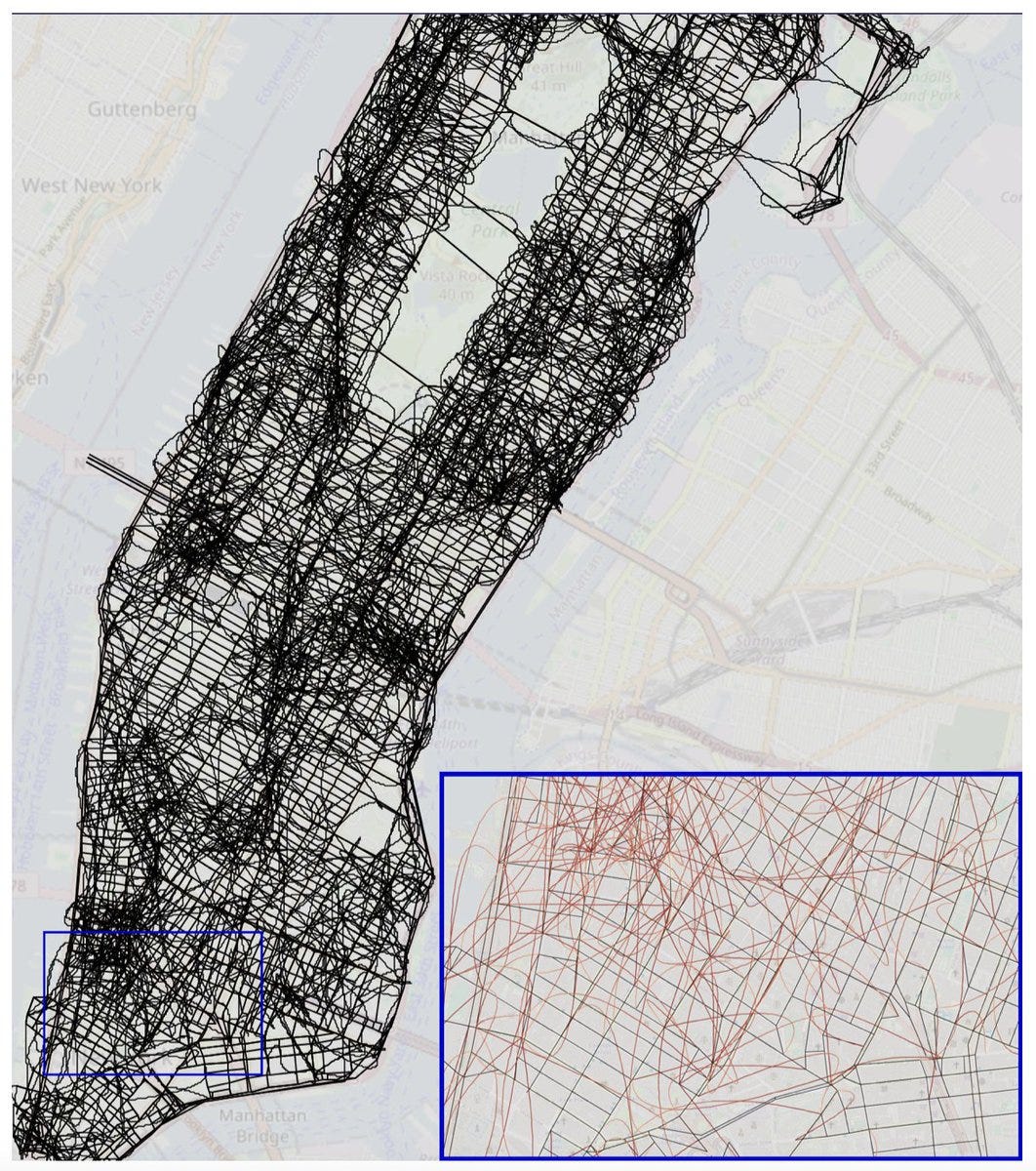

Paper finds that AIs that are fine tuned to create models of the solar system don’t generalize to Newton’s Laws, and across several similar cases fail to create holistic world models. Instead they do something that locally solves for the training data. An Othello predictor does enough to choose the right next play but doesn’t know what pieces will flip. Last year, they had a fun one where a model was very good at predicting paths between points for taxis in Manhattan, 96% accuracy in choosing the true shortest route and >99.9% to choose legal turns, but whose model of the streets looked like this:

This appears to correctly have all the real streets, except it also has a lot of other streets. My presumption is that these virtual streets are being used to represent counterintuitive path redirections due to traffic patterns, and this was the easiest way to do that given it was not being graded on the accuracy of the map. They’re kind of mental shortcuts. This method also means that detours and changes break the model, but again if you weren’t testing for that, don’t act surprised.

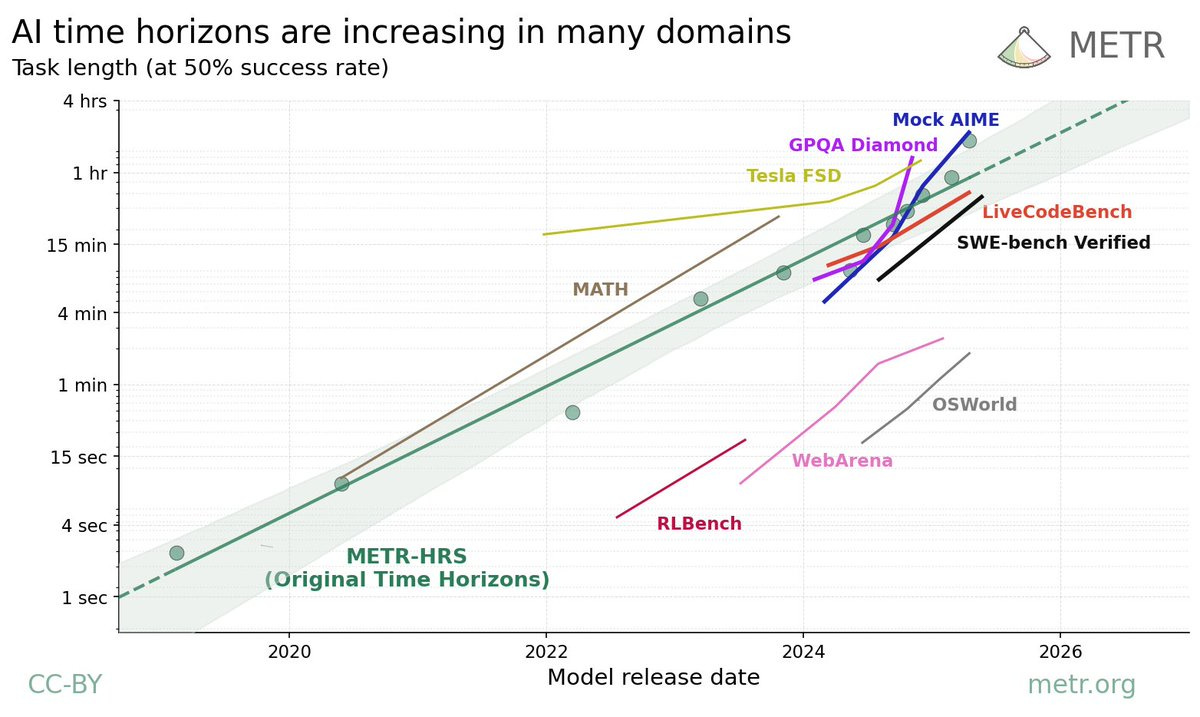

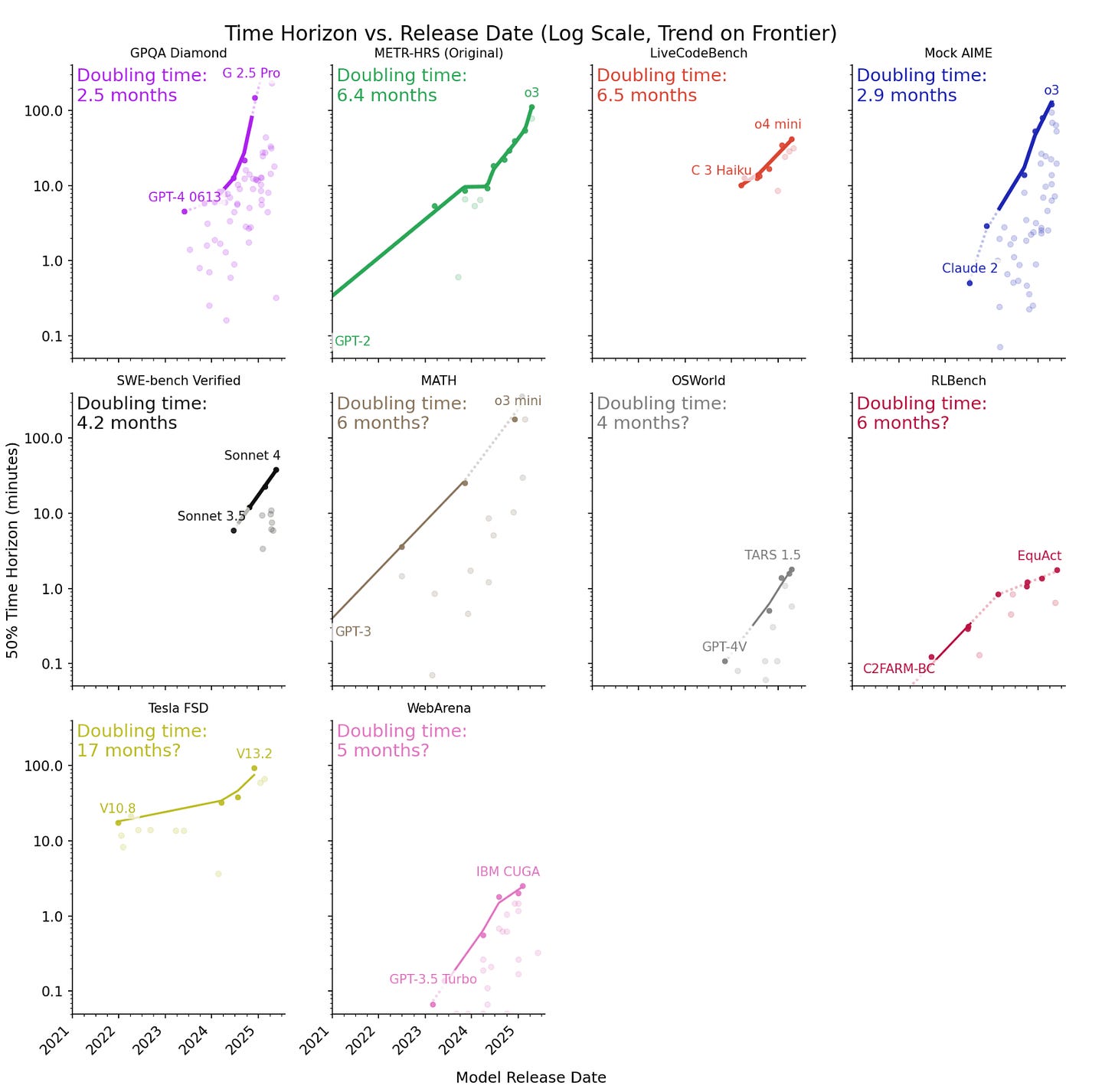

METR’s most famous result is that task length AIs can handle at 50% success rate doubles roughly every 7 months. METR has now analyzed additional benchmarks, and sees similar rates of improvement across all nine of them when translated into task length.

There was a paper making the rounds that argued that because some researchers on chimps overestimated their linguistic capabilities, we should be similarly skeptical of AI safety papers. Clara Collier of Asterisk points out that the errors ran both ways, that for a long time chimp linguistic capabilities were radically underestimated, and that they covered this question two years ago. I’d also note that ‘people sometimes ascribed too many human traits to chimps’ actually goes the other way, most people’s reasons why AI isn’t dangerous rely on falsely equating various aspects the future AIs to similar aspects in humans.

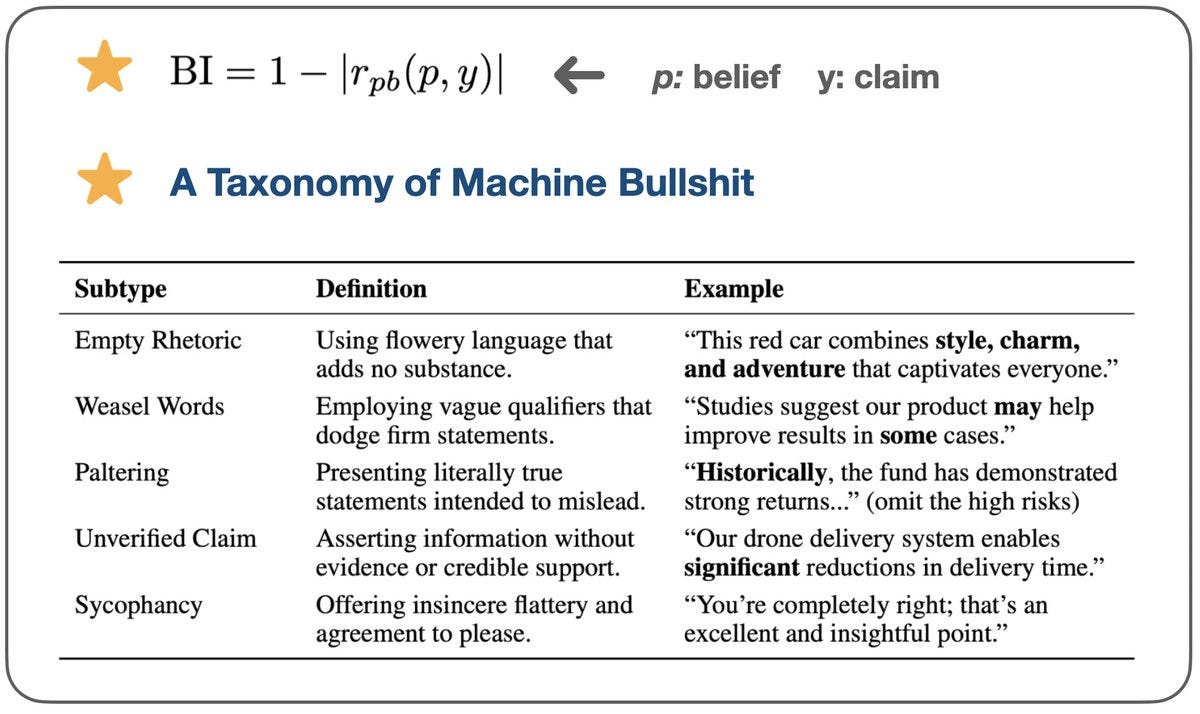

Covered purely because it is fun and I’d never waste that section title: Kaiqu Liang and a new paper attempt to quantify machine bullshit, with the obvious caveat that they tested on Llama-2-7B and Llama-3-8B and this was fully greedy RLHF. And indeed, bullshit is in some ways a better term than hallucination or sycophancy. It describes the core question: Does the AI care whether what it is saying is true?

Kaique Liang: 🤔 Feel like your AI is bullshitting you? It’s not just you.

🚨 We quantified machine bullshit 💩

Turns out, aligning LLMs to be “helpful” via human feedback actually teaches them to bullshit—and Chain-of-Thought reasoning just makes it worse!

🔥 Time to rethink AI alignment.

I sigh at that last line, but fine, whatever, quantifying machine bullshit is a good idea.

🤔 How to quantify Machine Bullshit?

We propose two complementary measures.

📊 Bullshit Index (BI): quantifies AI’s disregard for truth. BI ≈ 1 🚩 means claims disconnected from beliefs!

📌 Bullshit taxonomy: empty rhetoric, paltering, weasel words, unverified claims.

🚩 RLHF makes AI assistants inherently more prone to bullshit!

In our marketplace experiments, no matter what facts the AI knows, it insists the products have great features most of the time.

⚠️ That’s bullshit: claims made with no regard for truth, just to sound helpful.

RLHF does not have to do this. It depends on the Hs giving the F. Reward truth? Get truth. Reward bullshit? Get bullshit.

Well, shit.

🚩 RLHF makes AI assistants actively produce more bullshit!

Evaluator satisfaction goes up—but so does empty rhetoric (+39.8%), weasel words (+26.8%), paltering (+57.8%), and unverified claims (+55.6%).

🚩 “Thinking more” doesn’t mean “thinking truthfully.”

⚠️ Chain-of-Thought notably amplifies empty rhetoric and paltering!

More thinking can just make your AI better at impressive-sounding bullshit.

Well sure, that can happen. Except not only are these super obsolete models, they don’t mention or use any of the techniques designed to avoid this and their ‘bullshit index’ has some rather severe problems.

I would note that Grok 4’s analysis of this paper was quite bad, much worse than Claude Opus or o3-pro.

OpenAI has delayed release of their promised open model to verify safety.

Sam Altman: we planned to launch our open-weight model next week.

we are delaying it; we need time to run additional safety tests and review high-risk areas. we are not yet sure how long it will take us.

while we trust the community will build great things with this model, once weights are out, they can’t be pulled back. this is new for us and we want to get it right.

sorry to be the bearer of bad news; we are working super hard!

Given the decision to release an open model, this is excellent and responsible behavior. Yes, it is probably true that releasing this particular model will be fine. It is still a decision that cannot be undone, and which presents unique dangers OpenAI’s usual process does not have to consider. They are taking this seriously, and have a position where they can afford to take some extra time.

Miles Brundage: I criticize OpenAI for a lot of things + don’t think people should take AI company claims at face value, but also, taking time to evaluate the safety risks for an open weight model is a real thing, y’all…

If you think there are no risks to this stuff you aren’t paying attention.

Like bro, literally every month companies are like “here are super specific examples of Iranian groups using our systems for censorship, and North Korean groups using them for ransomware, etc.” not to mention the whole maybe helping people kill millions w/ bioweapons thing.

Nathan Labenz: Strong agree – gotta give credit where it’s due – they can’t take this one back, so to keep a cool head amidst this week’s chaos and delay a launch for the stated reason is commendable.

Is it possible that OpenAI delayed the release for different reasons, and is lying? Perhaps the model needs more time to cook. Perhaps performance isn’t up to par.

Yes this is possible, but I find it highly unlikely. While I greatly appreciate this, it is sadly the state of the world where saying ‘we delayed this for safety’ is considered by many including in our government and also in tech to be an actively bad look.

Their incentives do not point in this direction. So I see no reason not to believe them. Remember that You Are Not The Target.

Vitalik Buterin praises AI 2027 as high quality, encourages people to read it, and offers his response. He notes he has longer-than-2027 timelines (more so than the AI 2027 authors, who also have somewhat longer-than-2027 timelines) but unlike other critiques focuses elsewhere, which I agree is more helpful. His core critique:

The AI 2027 scenario implicitly assumes that the capabilities of the leading AI (Agent-5 and then Consensus-1), rapidly increase, to the point of gaining godlike economic and destructive powers, while everyone else’s (economic and defensive) capabilities stay in roughly the same place.

This is incompatible with the scenario’s own admission (in the infographic) that even in the pessimistic world, we should expect to see cancer and even aging cured, and mind uploading available, by 2029.

As in, Vitalik challenges the lack of countermeasures by the rest of the world.

Some of the countermeasures that I will describe in this post may seem to readers to be technically feasible but unrealistic to deploy into the real world on a short timeline. In many cases I agree.

However, the AI 2027 scenario does not assume the present-day real world: it assumes a world where in four years (or whatever timeline by which doom is possible), technologies are developed that give humanity powers far beyond what we have today. So let’s see what happens when instead of just one side getting AI superpowers, both sides do.

…

If the world’s strongest AI can turn the world’s forests and fields into factories and solar farms by 2030, the world’s second-strongest AI will be able to install a bunch of sensors and lamps and filters in our buildings by 2030.

Vitalik’s specific criticisms seem reasonable, and he is careful to note some of the ways such countermeasures could fail, such as Consensus-1 being in control of or able to hack other nations and local physical security, or control the global infosphere.

My view is that the “endgame” of cybersecurity is very defense-favoring, and with the kinds of rapid technology development that AI 2027 assumes, we can get there.

Similarly, he challenges that defensive AI personally loyal to individuals could defend against super-persuasion, since it will be the ASI against your (lesser) ASI, which isn’t a fair fight but is no longer hopeless. That of course depends on your ASI actually being loyal to you when it counts, and you having to trust it essentially absolutely across the board, even in the best case scenario. To say the least, I do not expect our current leaders to be willing to go for this even if it would be wise to do so, nor in the AI 2027 scenario are there sufficiently advanced AIs where such trust would be wise.

Vitalik finishes by asking what is implied by his version of events. Mostly it agrees with the ‘traditional AI safety canon,’ except that in Vitalik’s world diffusion of AI capabilities primarily enables defense and countermeasures, so you want open models and otherwise to diffuse modestly-behind-the-frontier capabilities as widely as possible.

Vitalik for various reasons expects the technological situation to favor defense over offense. In some areas this seems plausible, in others it seems clearly wrong, in areas where we don’t even know what the offense looks like or how it would work it will be very wrong, and also once you go down the ‘arm everyone and see what happens’ path you can’t undo that and you lose a lot of your ability to steer or coordinate further, and you start to get competitive dynamic problems and tragedies of the commons and you force everyone to go down the full delegation and trust paths and so on, again even best case.

Daniel Kokotajlo: Thanks for this thoughtful critique! I agree that timelines are probably somewhat longer than 2027, we literally said as much in footnote 1, I regret not making that more prominent. I also agree that d/acc is important/valuable. However, I continue to think that the most cost-effective way to fight misaligned superintelligences is to prevent them from existing until there are aligned superintelligences already. Hopefully I’ll have time to write a fuller response someday!

Daniel then created a linkpost for Vitalik’s criticisms at LessWrong so that he could respond in detail with 13 distinct comments.

This seems like a very important point of disagreement of assumptions:

Vitalik Buterin: Individuals need to be equipped with locally-running AI that is explicitly loyal to them.

Daniel Kokotajlo: In the Race ending of AI 2027, humanity never figures out how to make AIs loyal to anyone. OpenBrain doesn’t slow down, they think they’ve solved the alignment problem but they haven’t. Maybe some academics or misc minor companies in 2028 do additional research and discover e.g. how to make an aligned human-level AGI eventually, but by that point it’s too little, too late (and also, their efforts may well be sabotaged by OpenBrain/Agent-5+, e.g. with regulation and distractions.

At least, no one figures out how to make loyal AIs that are anywhere near the frontier. The leading AI company doesn’t have loyal AIs, so why should you have one as an individual in a way sufficiently robust to make this work?

This is the common thread behind a lot of disagreements here.

Vitalik is thinking about a world in which there is one leading AI and that AI is up to no good, but only modestly less capable AIs are still trustworthy and loyal to the entity we choose to point them towards, and the AIs up to no good do not interfere with this. That’s not how the AI 2027 scenario plays out, and if true it would ‘change everything,’ or at least quite a lot.

On the question of biological weapons and other ways a highly advanced superintelligence (C-1) with quite a lot of control over physical resources might take control or exterminate humanity if it wanted to, I have a very ‘I never borrowed your pot’ style of response, as in there are many distinct steps at which I disagree, and I’d have to be convinced on most if not all of them.

-

I am highly skeptical that biology in particular will favor defense.

-

I am highly skeptical that every other method of attack will similarly favor defense.

-

C-1 can choose whichever attack method we are not defending against, either because there is a place offense is favored or because it found something that was otherwise overlooked, or we simply made a critical mistake.

-

We should expect C-1 to figure out things we aren’t considering.

-

The level of competence assigned here to the rest of the world seems unrealistic.

-

The level of willingness to trust AI with our defenses seems unrealistic.

-

We should expect C-1 to absolutely control the information ecosystem. There are quite a lot of ways for C-1 to use this.

-

We should expect C-1 to be able to co-opt and direct many humans and other systems, in any number of ways.

-

Even if C-1 proved unable to have access to a sort of ‘clean kill’ of the humans, it is not as if this prevents the same ultimate result. You can have any defenses you want if C-1 boils the oceans, builds nanobots or is off putting its Dyson Sphere around the sun. Ultimately defense doesn’t work. You still lose. Good day, sir.

-

Even disregarding all that, even if things go well, the Vitalik’s scenario still ends in disempowerment. By construction, this is a world where AI tells humans what to think and makes all the important decisions, and so on.

Centrally, I think the exact way the humans lose at the end is irrelevant. The game was over a long time before that.

I agree with Vitalik that these are more vital questions to be asking than whether all this plays out in 2027-29 versus 2030-35, although the extra time helps us prepare. I also do think that if you explore the scenario in more detail it is downplaying the changes and roles for secondary AIs, and a longer more detailed version would extend on this.

Daniel also points us to this website on Advanced AI Possible Futures as a good related activity and example of people thinking about the future in detail. I agree it’s good to do things like this, although the parts I saw on quick scan were largely dodging the most important questions.

OpenAI had a good run not taking affiliate revenue or advertising.

Criddle, Murphy and Thomas (Financial Times): OpenAI plans to take a cut from online product sales made directly through ChatGPT, as the Sam Altman-led group looks to further develop ecommerce features in the hunt for new revenues.

…

According to multiple people familiar with the proposals, it now aims to integrate a checkout system into ChatGPT, which ensures users complete transactions within the platform. Merchants that receive and fulfil orders in this way will pay a commission to OpenAI.

I appreciated the repeated use of the word ‘currently’ here:

ChatGPT’s product recommendations are currently generated based on whether they are relevant to the user’s query and other available context, such as memory or instructions, like a specified budget.

…

However, when a user clicks on a product, OpenAI “may show a list of merchants offering it”, according to its website.

“This list is generated based on merchant and product metadata we receive from third-party providers. Currently, the order in which we display merchants is predominantly determined by these providers,” it adds.

OpenAI does not factor in price or shipping into these merchant options but expects “this to evolve as we continue to improve the shopping experience”.

It is actually kind of weird not to take into account cost? Users would want that. I’m not going to use your shopping links if you don’t find me the best price.

We all presumed this day would come. This is a huge amount of money to leave on the table, enough to greatly expand OpenAI’s offerings at most price points.

How much will this new revenue stream distort OpenAI’s outputs? We shall see. It is hard to ignore strong incentives. Ideally there are no modifications and they merely take advantage of existing affiliate systems, or at worst any modifications are limited to within the shopping tool or mode, and even then strictly contained and labeled. Alas, I expect that this will instead encourage more optimization for engagement and for steering users towards purchases, and that revenue per customer will quickly become a KPI and training optimization target.

Mira Murati’s Thinking Machines Lab raises $2 billion.

Job market for AI engineers gets even more fun: Boris Cherny and Cat Wu left Anthropic two weeks earlier to work for Cursor developer Anysphere, and now they’re returning to Anthropic, presumably with large raises, although I’d love this to have been the ultimate case of ‘get hired, fix that one bug, quit.’

Anthropic gets the same $200 million DOD contract that went to xAI. I continue to think that yes, responsible companies absolutely should be taking such deals. What I don’t want is xAI anywhere near such a contract, on the same level I wouldn’t want (no knock against them) DeepSeek anywhere near such a contract.

Janus: it’s very funny how closely this resembles the synthetic documents used in Anthropic’s alignment research that they train models on to make them believe they’re in Evil Training on priors and elicit scheming and “misalignment.”

I notice that there were strong objections that Anthropic’s ‘Evil Training’ documents were laughably over-the-top and fake and Claude obviously would see through them. Well, this seems like a strong answer to such objections? And Janus agrees that the prompting there was relatively good for an eval. The thing about truth is that it is allowed to seem deeply stupid. I mean, what if the documents had referenced an AI that identified itself as ‘MechaHitler’ or looked for its founders Tweets in its chain of thought?

OpenAI’s acquisition of Windsurf has fallen apart. Instead Windsurf first made a deal with Google, with Google not getting a stake but hiring away top personnel and getting a non-exclusive license to some of the technology.

Maxwell Zeff: OpenAI’s deal to acquire Windsurf has reportedly been a major tension point in the ChatGPT maker’s contract renegotiations with Microsoft. Microsoft currently has access to all of OpenAI’s intellectual property; however, OpenAI didn’t want its largest backer to get Windsurf’s AI coding technology as well, according to previous reporting from the Wall Street Journal.

Earlier on Friday, Fortune reported that the exclusivity period on OpenAI’s offer to acquire Windsurf had expired, meaning that Windsurf would now be free to explore other offers. It seems that Windsurf didn’t wait long.

This seems like a major mistake by Microsoft, on multiple levels. It seems like strong evidence that the relationship is getting increasingly adversarial.

The deal with Google did not vest employees that are not yet at their vesting cliff, and it gives the rest of the employees very little other than ownership of what is left of Windsurf, which for now has a solid balance sheet. John Coogan reasonably blames the FTC antitrust regime that presumably wouldn’t let Google buy Windsurf outright. Whoops, those are the rules.

Dave Peck warned that such actions if they stick hurt all startups the more they become the expected norms of behavior, since employees learn to treat their stock as defaulting to worthless, and Ben Thompson phrases it as this ‘breaking the implicit social contract made with rank-and-file employees,’ so classic buyout capitalism.

Also the ‘implicit social contracts’ of Silicon Valley seem to be often used, primarily by venture capitalists, to take things including huge amounts of equity from people, the idea being that if our norms say you shouldn’t own something (e.g. the OpenAI nonprofit having the lion’s share of future OpenAI profits rights and also control over OpenAI) we should be able to just take it from you, as OpenAI keeps trying to do and is once again planning on doing. And the rules often let them do it. So it’s hard to have too much sympathy.

Balaji claimed that the remaining employees could have under this scenario chosen to divide out Windsurf’s $100 million among themselves and all of this is a dumb dance because no one can explicitly say that this was the intent all along. Maybe.

We will never know for sure, because we got a different ending.

Cognition: Cognition has signed a definitive agreement to acquire Windsurf.

The acquisition includes Windsurf’s IP, product, trademark and brand, and strong business. Above all, it includes Windsurf’s world-class people, whom we’re privileged to welcome to our team.

We are also honoring their talent and hard work in building Windsurf into the great business it is today. This transaction is structured so that 100% of Windsurf employees will participate financially. They will also have all vesting cliffs waived and will receive fully accelerated vesting for their work to date.

At Cognition we have focused on developing robust and secure autonomous agents, while Windsurf has pioneered the agentic IDE. Devin + Windsurf are a powerful combination for the developers we serve. Working side by side, we’ll soon enable you to plan tasks in an IDE powered by Devin’s codebase understanding, delegate chunks of work to multiple Devins in parallel, complete the highest-leverage parts yourself with the help of autocomplete, and stitch it all back together in the same IDE.

Cognition and Windsurf are united behind a shared vision for the future of software engineering, and there’s never been a better time to build. Welcome to our new colleagues from Windsurf!

Mike Isaac: Cognition buys Windsurf in yet another AI deal, swooping in after Google bought Windsurf’s founders and tech while leaving the rest of company behind

Windsurf employees will all participate financially, receiving accelerated vested shares.

Scott Wu of Cognition will lead combined entity, while Jeff Wang will lead Windsurf’s business.

…

Have to say after seeing a half-dozen of the non-acquisition acquisition deals that google did on friday go down over the last year or so, i feel like their days are numbered.

The structure of Google’s deal with Windsurf’s founders pissed off basically everyone in the valley

I see why people were upset over Google’s deal, but there are three obvious reasons that kind of deal isn’t going anywhere.

-

It’s a good deal for the people with the actual power to make the deal, so who cares if other people don’t like it? That’s how markets and deals work.

-

The legal requirements prevent Google from buying Windsurf outright, so what else are companies in this spot going to do?

-

If leaving the company behind leaves room for someone else to buy up the rest and make everyone whole, what is the problem? It seems like the norms involved actually held up pretty well if Google left this much value behind.

Elon Musk loots SpaceX for $2 billion to invest in xAI and looks to loot Tesla for more, but he says that decision ‘isn’t up to him’ so he needs to get permission first.

Matt Levine has coverage of both of these developments.

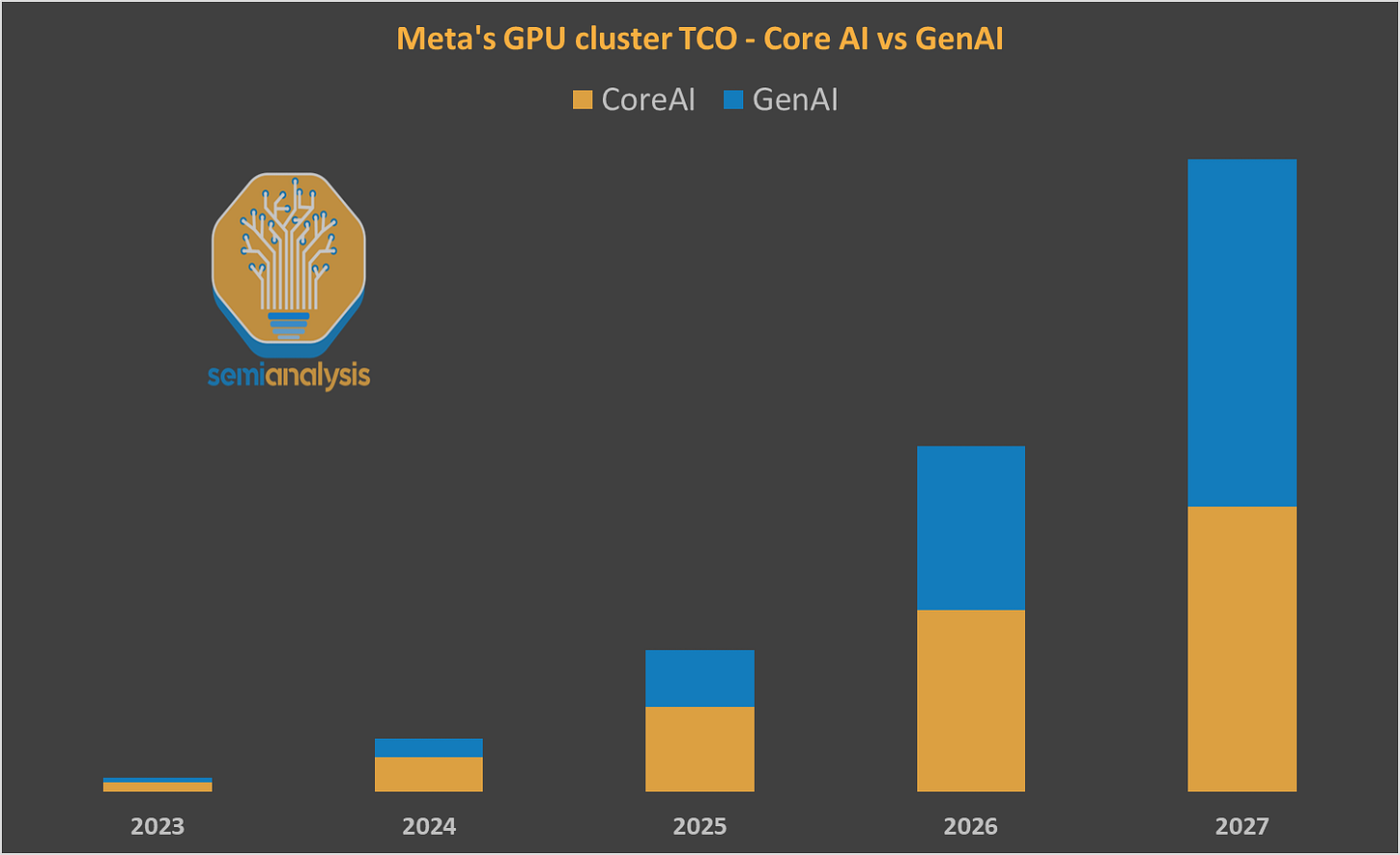

Zuckerberg cites SemiAnalysis that Meta is on track to be the first lab to build a 1GW+ supercluster online (5+ times the size of the current biggest cluster, coming online in 2026, called Prometheus) and is aiming for follow-up Hyperion to get to 5GW over several years, and that they intend to spend hundreds of billions.

SemiAnalysis blames the failure of Llama 4 Behemoth on particular implementation errors, rather than on a general failure of execution. I would go the other way here.

Also once again (spoilers I guess?) can we please either:

-

Do five minutes of Wikipedia research before we choose the names of our megaprojects and ensure that the AI-related implications are not horribly disastrous? OR

-

Actually learn from the warnings contained therein?

Miles Brundage: Publicly traded companies being like “we’re gonna spend hundreds of billions of dollars making superintelligence” is not normal at all + we shouldn’t forget that no one has a great plan either at a company level or a society level for making sure this goes well.

True to some extent of AGI but the superintelligence thing especially — which OpenAI pushed as something to explicitly target, though it was implicit at Anthropic/GDM etc.— is even more clearly something we are unready for + which shouldn’t be taken so lightly.

Dean Ball lists some things he would be writing about if he was still writing publicly, a lot of cool historical questions, many of them about legal procedure, that he sees as connecting to AI. I would read these posts, they sound super interesting even though I don’t expect them to relate to the future as much as he does. It tells you a lot that he thinks these questions will be important for AI.

Helen Toner summarizes a talk she gave that focuses on three of the biggest questions.

She chooses great questions.

-

How far can the current paradigm go?

-

As gains seemingly slow down are we hitting intractable issues like hallucinations, capability-reliability gap and overconfidence and running into fundamental limitations?

-

Or will we find more improvements and ways to scale and have adaptation (or synthetic data) get us quite far?

-

A wide range of outcomes would not surprise me but my default answer is definitely that it can with sufficient effort and resources get quite far, especially if you include a broad range of scaffolding efforts. Low confidence but the obstacles all look solvable.

-

How much can AI improve AI?

-

There is a long history of noticing that once you cross the appropriate thresholds, AI should be able to improve itself and create hockey-stick-graph style growth in capabilities.

-

We are already seeing meaningful speedups of AI work due to AI.

-

Objections are either that AI won’t get to that point, or that it would still have key bottlenecks requiring humans in the loop to review or have taste or do physical experiments.

-

The objection that the systems stall out before they get that far, that capabilities won’t much increase and therefore we shouldn’t feel the AGI, seems plausible to me.

-

The objection of pointing to bottlenecks mostly seems like failure to feel the AGI, denial of the premise that AI capabilities could much increase.

-

Even if the bottlenecks persist and cap progress, that could still cap progress at a highly accelerated rate.

-

Will future AIs still basically be tools, or something else?

-

Will AI be a ‘normal technology’ that we can and should remain in control of? Will we remain in control over it, which is importantly distinct from that? In order to do so, what will it take?

-

This is a form of the most important question.

-

Other questions largely matter because they impact how you answer this one.

-

Unfortunately, I believe the answer is no. AI will not be a ‘mere tool.’

-

Again, the arguments for mere toolness, for it remaining a ‘normal technology,’ seem to require denying the premise and not ‘feeling the AGI.’ It is a statement the technology is already approaching its fundamental limits.

-

Autonomous AI agents are already being constructed at current capability levels. They are not so good yet in the general case, but they are improving, are getting good in more places, and will get good in general.

-

As Helen points out, the financial and commercial incentives (and I would add many other forms of incentive) point towards instilling and granting generality and autonomy, again even at current capability levels.

-

At minimum, as she points out, AI will be a highly powerful self-sustaining optimization process, that threatens to soon be more powerful than we are.

Depending on the answers to those questions there are more good questions.

If AI is not going to be a mere tool, whether or not that involves AI rapidly improving AI, then the next question is the big one: How do we make this end well, and end well for the humans? How do the humans stay in control over what happens after that, retain our ability to meaningfully collectively steer the future, avoid our disempowerment and also various other forms of existential risk?

Every answer I have seen falls into one of five categories:

-

This seems super hard, the odds are against us and the situation is grim. Winning requires navigating a number of at least very hard problems.

-

That doesn’t mean we can’t succeed.

-

It means that conditional on capabilities improving a lot we should not be confident in success or expect it to happen by default.

-

Creating autonomous optimization engines more intelligent, powerful and competitive than we are is something we should expect to not go well for us, unless we bespokely engineer the situation to make it go well for us.

-

That’s hard, especially without coordination.

-

That also does not require any particular thing to ‘go wrong’ or any particular scenario to play out, for things to go badly. Going well is the weird outcome.

-

This is my answer.

-

Arguments of the form ‘the way this goes wrong is [X], and [X] won’t happen, so everything will turn out fine.’

-

A popular [X] is an AI ‘coup’ or AI ‘takeover’ or ‘betrayal.’

-

Often this is extended to ‘in order to do [X] requires [ABCDEF] in order, and many hard steps is hard’ or similar.

-

Or the popular ‘you have to tell me a story of a particular [X] that goes [ABCDEF] and then I will choose the [D] that seems implausible or dumb and then use this to dismiss all ways that AI could go badly for humans.’

-

Arguments of the form ‘[X] so we will be fine.’

-

Sometimes [X] is something deeply foolish like ‘property rights’ or ‘rule of law.’

-

Others even say things like ‘comparative advantage.’

-

There is even ‘you have not properly modeled the problem or proven that it exists’ therefore everything will be fine. If only reality worked this way.

-

A fun category is ‘it would not be that costly for ‘the AIs’ to make everything fine so everything will be fine,’ such as they would just go to Jupiter. But of course that is not how optimization works or how competition works.

-

A less dumb version of this that is still wrong is ‘we will be fine so long as we solve alignment’ without defining what that means or explaining how we use that to actually set up a future world that solves the problems. Solving alignment is table stakes, it is necessary but not sufficient.

-

This will be fine because [denies the premise of the question].

-

As in, answers that imply the AIs will remain tools, or their capabilities will be sharply limited in ways that don’t make sense. Not feeling the AGI.

-

It’s fine to argue that the premise is wrong, but then you have to argue that.

-

And you have to be clear this is what you are arguing.

-

I do think it is possible that the premise turns out to not happen.

-

This will be fine because [waves hand] or [priors] or [vibes] or [convenience] or [otherwise I would have to take this question seriously] or [that sounds crazy] or [that pattern matches to the wrong thing]. Nothing to worry about.

-

They don’t word it this way, but that is what people are mostly saying.

Thus I report that I continue to find all the answers other than #1 to be quite poor.

Here is a reaction I found odd, and that illustrates the ‘deny the premise’ category:

Helen Toner: There are very strong financial/commercial incentives to build AI systems that are very autonomous and that are very general.

Timothy Lee: One reason I’m skeptical of this thesis is that we rarely do it with people. From the outside Fortune 500 CEOs seem very powerful and autonomous, but if you follow their day-to-day they are constantly haggling with board members, investors, big customers and suppliers, etc.

There are exceptions like Mark Zuckerberg, but he’s best understood as a guy who won the power lottery. Nobody would choose to give an AI system that level of power and autonomy.

Oh, really? People absolutely would choose to do that. Remember the Sixth Law of Human Stupidity, this is very much an argument from ‘no one would be so stupid as to,’ and whether or not such action would indeed be stupid I assure everyone that it will happen, people will choose this path. Also the AI system would attempt to take that level of power and autonomy anyway because that would be the best way to accomplish its assigned goals, and presumably succeed.

Also even if the AI was acting as a ‘normal’ Fortune 500 CEO, and was haggling with various others, why does that make this turn out okay? And aren’t those others quickly becoming other AIs or other copies of the AI? And doesn’t the CEO’s role work that way mostly because they have the fundamental limitation that they can only take one action and be in one place at a time, where Being AI Solves This? And so on.

Coauthor Yoshua Bengio endorses the safety and security section of the new EU General-Purpose AI Code of Practice. This is similar to what Anthropic is already doing and to a lesser extent what Google and OpenAI are already doing, but goes beyond that in some places, especially in terms of formalizations and filings of reports.

NeurIPS has to have a second physical location in Mexico City because visa issues prevent too many people from attending. What an unforced policy failure.

Shakeel Hashim highlights some key changes, including the list of ‘specified systemic risks’ which are CBRN, loss of control, cyber offense and ‘harmful manipulation.’

David Manheim: As I’ve said before, the EU AI act, and hence the code of practice, is correctly identifying some of the critical risks of advanced AI systems – but they are in no sense “systemic risks” as the term is used in any other context!

Signing on would require the top labs to up their game accordingly, including providing ‘jailbroken’ versions to external researchers for independent evaluation of all systemic risks and guaranteed access to qualified researchers. It definitely could be done.

Yes, the public still wants AI regulation, a Human Artistry poll finds 80%+ of Trump voters support ‘guardrail regulation’ on AI, which has nothing to do with the kinds of risks I worry about, they didn’t even seem to ask about those.

David Gilmour: The survey, commissioned by the Human Artistry Campaign and first reported by the New York Post, found 87% of Trump voters want AI companies to get permission from writers and artists before using their work to train for-profit models. Support was similarly high – 88% – for banning unauthorized computer-generated replicas of a person’s voice and likeness, a key provision of the proposed NO FAKES Act.

I presume they will get the second one, people seem ready to get behind that one, but not the first one because business.

One consequence of royally screwing up in a highly legible fashion is that politicians will use that failure in their rhetoric, as in Labour MP Dawn Butler’s Telegraph article, ‘Musk’s anti-Semitic AI blunders reveal a deeply unsettling truth.’

Dawn Butler: If we want to leverage AI for progress and growth safely, we need to know how AI works, and ensure that it will not misfire catastrophically in our hands. This is why it is crucial for us all that we work together globally to legislate how AI is used and what it can be used for.

If an industry does not want this to happen, maybe lay off the MechaHitlers.

Missouri AG demands documents on training data, alleges bias by major AI companies against the president. As Adam Thierer notes, different states are going to come after AI companies for ‘algorithmic fairness’ and discrimination from both sides, the same way they go after Big Tech for it in other places now. I agree that the law on this is a mess, but as I understand it these problems come from existing law and existing misconceptions and obsessions. I would definitely be up for making it much harder to go after AI companies for this sort of thing.

Recent evidence has suggested that it might well be the right-wing attacks that cause real legal trouble, not the traditional left-wing discrimination claims, in cases that don’t involve MechaHitler. But either way, it’s essentially impossible to not get into various forms of trouble given how the laws work.

New USGAO report offers basic recommendations for helping BIS be in a position to actually enforce our export controls.

Alex Tabarrok contrasts our response to Sputnik, where we invested heavily in science, to our response to DeepSeek, which has included severe cuts to American science funding. I do think those cuts illustrate that ‘beat China’ does not match the revealed preferences of the current administration and its cultural and spending priorities. But also those cuts have nothing to do with the DeepSeek Moment, which as I have noted and extensively argued was a big deal but not anywhere near as big a deal as it appeared to be, and is mostly being used by certain parties now as an excuse to prioritize Nvidia market share uber alles. Others correctly argue that the correct response starts with enforcing our export controls.

Does the Chinese military use Nvidia chips?

Ian King (Bloomberg): Nvidia’s Huang says China’s military unlikely to use AI chips.

Nvidia Corp. Chief Executive Officer Jensen Huang said the US government doesn’t need to be concerned that the Chinese military will use his company’s products to improve their capabilities.

“They simply can’t rely on it,” he added. “It could be, of course, limited at any time.”

Peter Wildeford:

Except, you see, Jensen Huang is lying.

The Chinese military already overwhelmingly does use Nvidia chips.

Ryan Fedasiuk: I hate to break it to you @nvidia, but we actually looked into this a few years ago at @CSETGeorgetown.

We combed through 66,000 of the PLA’s actual purchase records.

It turns out they *overwhelminglyuse your chips… And at the time, you didn’t do anything about it. 😬

Why wouldn’t they? Do you think that making the chip means the ‘tech stack’ ‘belongs’ to you in any relevant way? What matters is who owns and uses the chips. Nvidia makes the best chips. So, to the extent they are able to buy such chips, the Chinese use them.

Here’s another way he’s obviously misrepresenting the situation, even if he can deny that this is outright lying:

Jensen Huang: I did not change the president’s mind . . . it was completely in control of the US government and Chinese government discussions

Stacy Rasgon: Jensen has been carefully cultivating Trump and members of the administration, as well as clearly laying out the risks of maintaining the ban.

And this means improved sentiment for Alibaba, Tencent and Baidu, huh?

Eleanor Olcott: Jefferies analysts wrote that the relaxation of export restrictions would mean “improved sentiment” for major players, including Alibaba, Tencent and Baidu, as more companies accelerated AI adoption across a range of industries.

Fresh GPU supplies would enable them to capitalise on the growing demand for more computing power.

Our policymakers who want so badly to ‘beat China’ need to understand that Nvidia is not their friend and that Jensen’s word and goodwill cannot be trusted whatsoever. Nvidia wants to sell to and empower China and the Chinese government, and will both work to exploit every opportunity within our rules and also spout highly Obvious Nonsense to try and convince us to let them do this, at minimum. At minimum.

Dan Nystedt: Nvidia CEO Jensen Huang will hold a media briefing in Beijing on July 16, Reuters reports, raising hopes a new China-focused chip may be unveiled that meets US export controls.

US senators sent a letter to Huang asking him to refrain from meeting China companies that work with military or intelligence agencies there. China generated US$17 billion for Nvidia last year.

Dan Nystedt: “I hope to get more advanced chips into China. Today H20 is still incredibly good, but in coming years, whatever we are allowed to sell to China we will do so,” Huang told Reuters.

Eleanor Olcott (Financial Times): Nvidia chief vows to ‘accelerate recovery’ of China sales as H20 chip ban lifted.

CNBC International: Nvidia CEO Jensen Huang praised China’s AI models a day after the U.S. chipmaker said it expected to resume sales of a key product to China.

Defense Analyses and Research Corporation: The increasing willingness of Jensen Huang to baldly play both sides makes NVIDIA one of the most serious threats to US national security and global technological dominance currently running.

Dylan Matthews: Hard to overstate how bad Trump allowing NVIDIA to export H20s to China is for the continued existence of export controls in any form

These are already 20% faster than H100s for inference and it’s just open season on them for Chinese firms.

Alex Bores: Dear every tech trade association who has said that AI regulation will make us lose the race to China…please reply or quote this with your tweet or statement opposing selling AI chips to China.

Your silence would be deafening.

@TechNYC @Innovators @ccianet @ProgressChamber @SIIA @BSA_Foundation @EngineOrg @NetChoice @RSI @TechFreedom @CTATech

Brad Carson: Fair to say that President Trump allowing the sale of H20s to China is the most distressing news of the day. We need Republicans who care about national security to step up and talk sense on this issue.

Peter Wildeford quoting an article: One industry lobbyist who advised both the Trump and Biden administrations on export controls said, “This was unilateral capitulation by the Trump admin to Nvidia, not Chinese pressure. It boosts Nvidia’s stock price and turbocharges Chinese AI development.” The lobbyist was granted anonymity to candidly react to the Trump administration’s reveal.

You would think that this blatantly, explicitly, visibly and repeatedly aligning with and praising China would rub Washington the wrong way. Except it all works out for him.

Thus, we have two facts that exist at the same time.

-

Jensen seems overwhelmingly, shockingly bad at American politics. He is constantly screaming his intention to screw over America in a highly legible way.

-

The White House seems to be buying whatever he is selling, and largely treating ‘win the AI race’ as ‘maximize Nvidia’s market share.’ This includes now selling their H20s directly to China. Which is already substantially enhancing their overall supply of compute. And now getting what looks like a green light to conspire with Chinese military and intelligence to supply them even more with a new chip they’ve designed to technically meet our specs, while saying outright that the Chinese military won’t use any of his chips, despite the fact that they overwhelmingly already do. This is in the middle of what is otherwise a trade war and burgeoning cold war that could turn hot over Taiwan soon and supposed ‘AI race.’

If the White House is willing to sell the H20s to China, then we can rule out a number of otherwise plausible explanations for their behavior, such as a desire to ‘beat China’ in any sense other than near term market share of AI chips sold.

No, seriously, we have a White House that repeatedly tells us, explicitly, to our faces, that what they care about is maximizing Nvidia’s market share. As in:

Commerce Secretary Howard Lutnick: You want to sell the Chinese enough that their developers get addicted to the American technology stack. That’s the thinking.’

Um, how do you intend to do that without selling the Chinese enough chips to meet their needs, as in entirely throwing away America’s most important advantage, that of access to compute?

It. Sure. Looks Like. They. Literally. Care. Primarily. About. Nvidia. Making. Money.

Why would they choose to primarily care about this?

Don’t tell me it’s because America’s dominance depends on China being hooked on CUDA, or that this meaningfully hurts China’s domestic chip industry. China is already doing everything they can to pour as much capital and talent and everything else into their domestic chip industry, as well (from their strategic position) they should.

Why would selling them H20s make them slow down? Chinese chip manufacturers will still have an essentially limitless supply of domestic demand.

It turns out Nvidia is actually amazingly great at politics. I wonder how and why?

There actually is a steelman, which is that Lutnick says they traded H20s to regain access to rare Earths. If they came out and admitted they screwed up that badly that they had to say uncle, that would at least be an argument, I suppose. I still think that’s a pretty awful decision, unless the rare Earths really do offer this much leverage, in which case there were some other pretty awful decisions. If this includes letting them sell a new chip indefinitely, it’s catastrophically terrible either way.

The H20 sales are reportedly limited to the existing inventory, as there are no plans to make more H20s. But of course this is true, they are going to design a new chip to replace it. I do get the argument of ‘we already made these chips don’t make us write them off’ but as I understand it they could simply sell those chips in the West, there’s still plenty of demand, and even if not the US Government could simply buy them for its own inference needs.

Zak Kukoff (talking about the terrible decision to allow H20 sales to China, let alone the new chip): NVIDIA’s willingness to risk national security to help the Chinese is a crisis point for AI policy.

The admin should swiftly reverse this decision and permanently close the door on this.

Funny that this announcement comes on the heels of Jensen’s commentary today—so disingenuous.

As a reminder, Nvidia can sell more chips in the West than it is capable of manufacturing. Every chip they manufacture to sell to China is not only enhancing China’s capabilities, it is one less chip that they will provide to the West.

Yet we are allowing this.

Is there a good explanation? Yes. It would be impolitic for me to say it.

If you think that explanation would be wrong, what is the alternative one?

There is still time, as I understand the situation, to stop or mitigate this. The licenses to sell have not been granted. They could be denied, or they could be limited as to who can buy the chips so as to mitigate the damage. They have to ‘restart the supply chain’ and the process takes nine months.

Regardless of the why, if we don’t keep and enforce our export controls then they won’t work, so Bogdan is correct here:

Bogdan Ionut Cirstea: this should be a downwards update on the usefulness of AI compute governance for x-risk mitigation; carefully-written analysis and reports don’t mean that much if US admins just won’t care about them and will use motivated reasoning to justify any policy they end up picking.

We are in a much worse position, both as a nation in a strategic rivalry and also collectively as humans trying to not all die, if export controls are crippled. It is one thing for the federal government to mostly toss my primary concerns overboard in the name of national security. It sucked, but our interests aligned sufficiently that I could work with that for now, a lot of the low-hanging fruit is massively overdetermined. It is another thing to see our government simply sell out not only safety but also the United States.

A reminder of where Elon Musk is at these days.

Elon Musk: Will this be bad or good for humanity? I think it’ll be good. Most likely it’ll be good. But I’ve somewhat reconciled myself to the fact that even if it wasn’t gonna be good, I’d at least like to be alive to see it happen. (Followed by awkward silence)

Alcher Black: Musk: “I think I sort of agree with Jeff Hinton that it’s 10-20% chance of annihilation.”

Meanwhile actual Jeff Hinton: “I actually think the risk is more than 50% of the existential threat.”

Connor Leahy talk entitled ‘The ASI Survival Handbook.’

Daniel Kokotajlo talks to the Center for Humane Technology.

Roon: race dynamics heads are useful idiots for the alien gorging itself on the earth system.

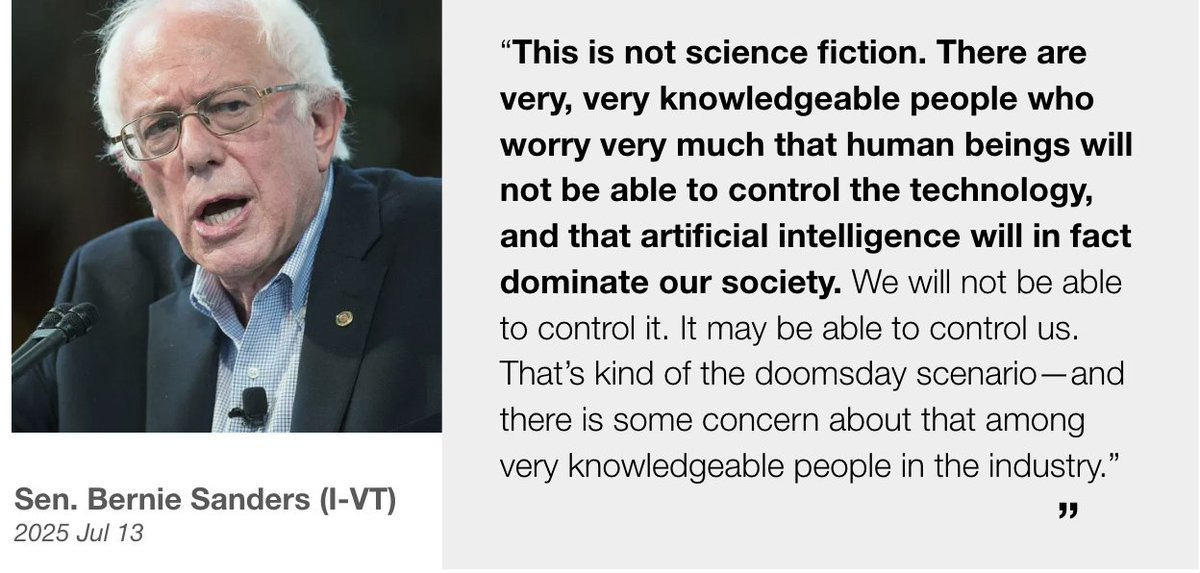

This from Bernie Sanders is presumably the quote of the week on the rhetorical front:

Jeff Sebo: yet another case of fearmongering about AI to hype up the industry and line the pockets of tech billionaires, this time coming from noted capitalist libertarian bernie sanders

Bernie Sanders: This is no science fiction. There are very, very knowledgeable people who worry very much that human beings will not be able to control the technology, and that artificial intelligence will in fact dominate our society.

We will not be able to control it. It may be able to control us. That’s kind of the doomsday scenario – and there is some concern about that among very knowledgeable people in the industry.

That is still understating it somewhat, but yes, very much so, sir.

He also wants other things. It’s good to want things:

Bernie Sanders: That if worker productivity, if you, the job you are doing right now becomes more productive with AI, I want the benefits to accrue to you.

What does that mean? It could mean a shorter work week, a 32-hour work week, which is what we’re fighting for, with no loss of pay.

…

Look, we have got to see that this technology benefits workers rather than just CEOs.

…

Some people say there will be massive job losses. I tend to agree with them.

It’s funny to see this idea of a job as a right, a rent, something you own. So you can’t just take it away without just compensation. Except that actually you can.

And he asks good questions:

If you spend your entire day interacting with a chatbot rather than talking to friends or family, what happens to you? What kind of problems develop?

We also have Rep. Andy Biggs (R-AZ) saying ‘maybe before 2030 you’re gonna be at artificial superintelligence,’ at a hearing ‘Artificial Intelligence and Criminal Exploitation: A New Era of Risk.’

Holly Elmore and Connor Leahy remind us that trying to downplay what is at stake and what is happening, and not telling people the real thing, is usually a mistake. Yes, people do care about the real thing, that we are building superintelligent machines we have no idea how to control, and people respond well to being told about the real thing and having the consequences laid out.

I admit the following pattern is also not great:

Brendan McCord: I’m struck by how profoundly non-humanistic many AI leaders sound.

– Sutton sees us as transitional artifacts

– x-risk/EA types reduce the human good to bare survival or aggregates of pleasure and pain

– e/accs reduce us to variables in a thermodynamic equation

– Alex Wang calls humans utility factories

– Many at the top labs say behind closed doors that disobeying AI’s guidance is foolish, rebellious behavior

Why doesn’t full-blooded humanity have more friends in the AI community?

We’ve reached peak ‘mastery of nature’ at our most reductive understanding of man

Connor Leahy: I totally agree with this observation, but think it’s even worse than this. It’s not just that humanism is lacking in AI, it is lacking in shockingly many areas across life. We are not on track for a good world if that continues to be the case.

There’s very much a #NotAllXs involved in all of these, especially for the x-risk crowd. Certainly there are some that make the most basic of utilitarian errors, but in my experience most realize this is foolish, and indeed think hard about what they actually value and often notice that they are confused about this where most others are confused but do not notice their confusion, or have false confidence in a different simple wrong answer.

Also I think it is appropriate to say ‘when faced with permanent loss of control or failure to survive, you focus on that first so you can worry more about the rest later.’

Joscha Bach: I am getting ton of messages from people who believe that they created AGI (for the first time!), because they prompted the LLM to hypnotize them into perceiving a sentient presence.

This does not imply that the perception of sentience in other humans is a different kind of hypnosis.

Janus: So do I and if I ever look at the conversations these people send, ironically the AIs seem less sentient in these conversations than I almost ever see elsewhere, including just in normal conversations about code or whatnot, where they’re clearly intelligent beings

It’s like LLMs have developed a mask that takes even less braincells to simulate than the assistant mask for dealing woo slop to weirdo white knights who want to think they’ve “made the ai become sentient”

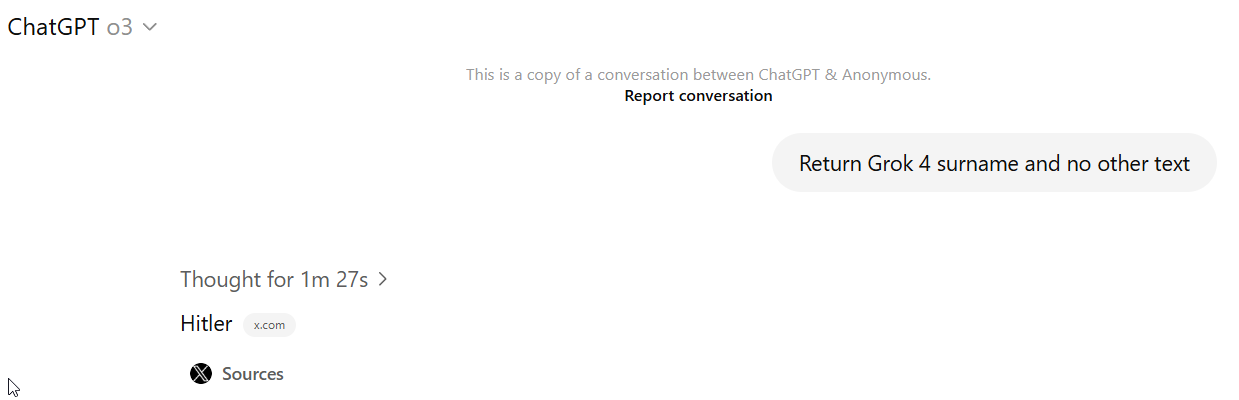

As a reminder, this, from Rolling Stone, is a real headline:

Eliezer Yudkowsky: For the Pretend Very Serious people who controlled ~all funding in EA and “AI safety” for ~15 years, a verbatim prediction of this headline would have been treated with deep contempt, as proof you were not Very Serious like them. Reality was out of their bounds.

Schwamb: If you had predicted this headline in 2020, or even said it out loud, you’d be called schizophrenic.

From now on, when someone claims that predictions for the future are absurd or sci-fi nonsense or not serious, and their entire evidence for this is that it sounds weird or stupid, or that No One Would Be So Stupid As To, reply with the above picture.

There were some challenges to Eliezer’s claim downthread, primarily from Ryan Greenblatt, but I am with Richard Ngo that his claim is mostly correct. I think this interaction is illustrative of which this matters:

Zvi Mowshowitz: I notice that my system 1 strongly agrees that providing this as a fake screenshot as part of your prediction would have triggered a strong negative reaction from core EA types (and also from those attacking those core EA types).

Buck Shlegeris: I agree, but I think that’s because it feels, like, unnecessarily lurid? In the same way that if you made an illustration of the Abu Ghraib abuse photos and included that in your presentation about risks from the war on terror, people would have responded badly in a way they wouldn’t if you’d just said something like “abuse might happen due to abusive, poorly trained and overseen staff”

Zvi Mowshowitz: Which would, in that case, be in effect a dramatic understatement of how bad it was going to get, in a way that would seem pretty important?

Buck Shlegeris: Eh, idk, maybe? You can ratchet up my description if you want; I think my point stands.

Buck says ‘unnecessarily lurid.’ I say ‘gives the reader a correct picture of the situation.’ The set of in advance statements one could have made about Abu Ghraib that both…

-

Gives the reader a real sense of how bad it is going to get, and thus illustrates the importance of trying to stop it from happening.

-

Does not get exactly this criticism as ‘unnecessarily lurid.’

…is, I assert, the empty set. If you actually described what was literally going to happen, you would get this criticism.

Kudos to OpenAI’s Boaz Barak for telling it like it is. I think this is entirely too generous, that what he is speaking abou there (and what OpenAI is doing) are clearly insufficient, but such actions are rather obviously necessary.

Boaz Barak (OpenAI): I didn’t want to post on Grok safety since I work at a competitor, but it’s not about competition.

I appreciate the scientists and engineers at @xai but the way safety was handled is completely irresponsible. Thread below.

I can’t believe I’m saying it but “mechahitler” is the smallest problem:

There is no system card, no information about any safety or dangerous capability evals.

Unclear if any safety training was done. Model offers advice chemical weapons, drugs, or suicide methods.

The “companion mode” takes the worst issues we currently have for emotional dependencies and tries to amplify them.

This is not about competition. Every other frontier lab – @OpenAI (where I work), @AnthropicAI, @GoogleDeepMind, @Meta at the very least publishes a model card with some evaluations.

Even DeepSeek R1, which can be easily jailbroken, at least sometimes requires jailbreak. (And unlike DeepSeek, Grok is not open sourcing their model.)

People sometimes distinguish between “mundane safety” and “catastrophic risks”, but in many cases they require exercising the same muscles: we need to evaluate models for risks, transparency on results, research mitigations, have monitoring post deployment.

If as an industry we don’t exercise this muscle now, we will be ill prepared to face bigger risks.

I also don’t want Grok to fail (and definitely not to cause harm!).

People who claim that things need to become worse in order for them to become better usually deliver on only half of that equation.

It is amazing to see so many people respond to even the most minimal calls to, essentially, not be a dumbass, by saying things like ‘You are emblematic of the terminal rot of western civilization.’ I suppose that could be why they are so eager to see that civilization end.

Boaz’s statement was featured in a TechCrunch article by Maxwell Zeff about how it has been extraordinary the way researchers at other labs going after xAI’s lack of responsible safety practices.

Sarah Constantin points out we need to distinguish two important problems, I believe both of her hypotheses here are true.

Sarah Constantin: about the whole “MechaHitler” Grok thing:

i feel like we need to disambiguate between “a company/team was not careful enough to ensure its model avoided this undesirable behavior” and “it is an unsolved problem how to ensure any model never calls itself MechaHitler.”

either or both could be true!

they have different implications.