Samsung’s “Micro RGB” TV proves the value of RGB backlights for premium displays

The $30,000 TV brings a new, colorful conversation to home theaters.

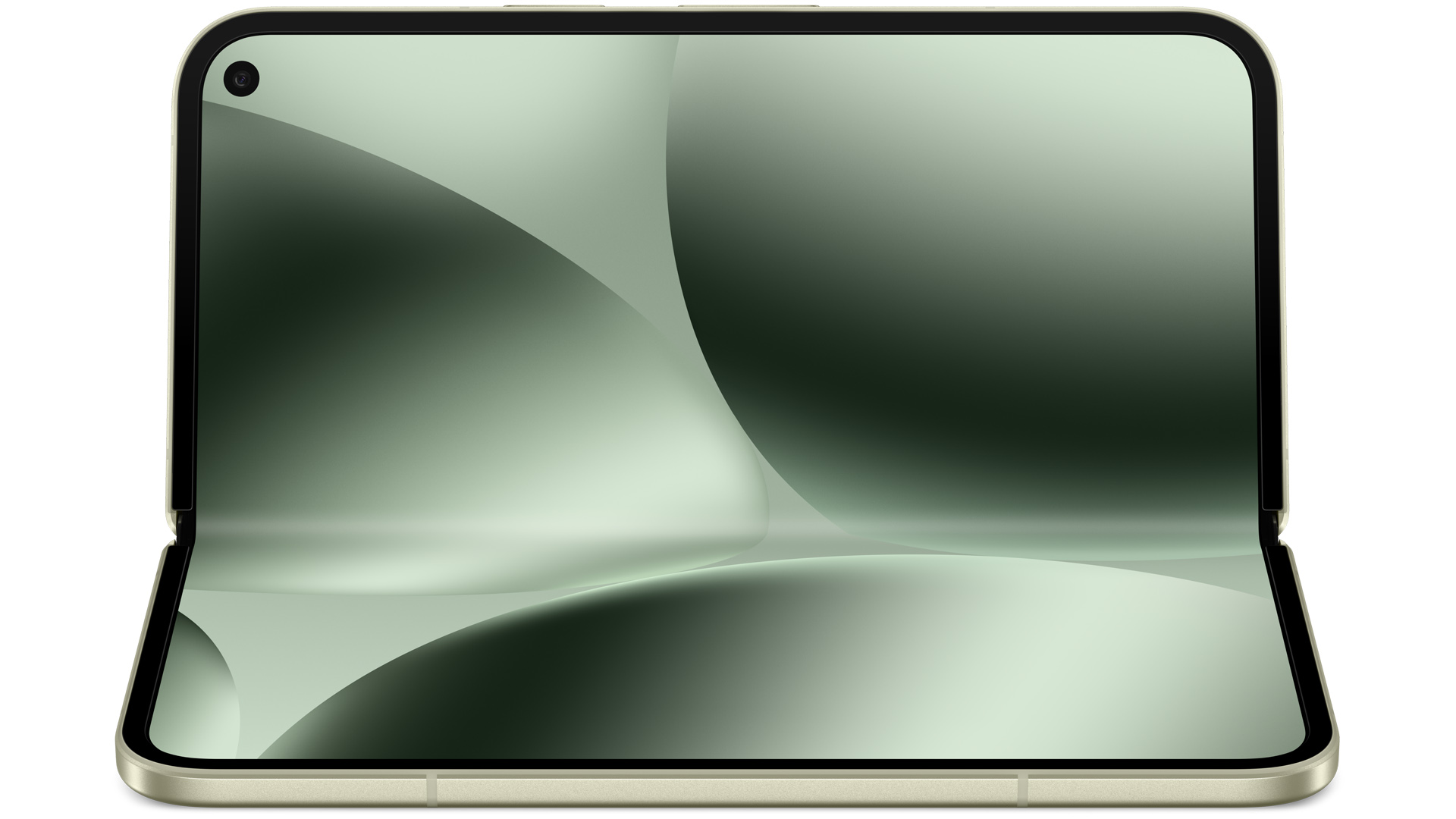

Samsung’s 115-inch “Micro RGB” TV. Credit: Scharon Harding

ENGLEWOOD CLIFFS, New Jersey—Micro LED is still years away, but the next best thing is taking shape right now. A $30,000 price tag and 114.5-inch diagonal size makes the Samsung “Micro RGB” TV that I demoed this week unattainable for most. But the unique RGB backlight and Micro LED-sized diodes it employs represent a groundbreaking middle ground between high-end Mini LED and true Micro LED, expanding the possibilities for future premium displays beyond the acronyms we know today.

Micro RGB isn’t the same as Micro LED

To be clear, Samsung’s Micro RGB TV is not a Micro LED display. During Samsung’s presentation, a representative described the TV as sitting “squarely in between” Mini LED and Micro LED.

Unlike true Micro LED TVs, Samsung’s Micro RGB TV uses a backlight. The backlight is unique in that it can produce red, green, and/or blue light via tiny RGB LEDs. Most LCD-LED backlights create just blue or white backlighting, which is applied to color filters to create the different hues displayed on the screen.

And differing from a true Micro LED display, the pixels in the Samsung TV I demoed aren’t self-emissive and can’t be shut off individually for virtually limitless contrast. Like some of the best Mini LED TVs, this TV delivers enhanced contrast through the use of thousands of local dimming zones. Without getting specific, Samsung said the Micro RGB TV has roughly four times the number of dimming zones as its 115-inch QN90F TV, a $27,000 Mini LED TV that uses quantum dots. Samsung hasn’t confirmed how many dimming zones the 115-inch QN90F has, but the 75-inch version has 900 dimming zones, according to RTINGs.

The Micro RGB TV loses to Micro LED and OLED when it comes to light bleed and contrast. The new TV’s biggest draw is its large color gamut. The backlight’s “architecture enables precision control over each red, green, and blue LED,” according to Samsung’s announcement of the TV earlier this month. Samsung claims that the backlight tech enables the TV to cover 100 percent of the BT.2020 color space (also known as Rec.2020), which is a wider color space than DCI-P3. As is typical for Samsung, the company hasn’t disclosed any Delta E measurements but claims high color accuracy.

I’m still concerned about the Micro RGB name, which carries the risk of being confused with true Micro LED. In the past, Samsung has contributed to display-market confusion with terms like QLED (an acronym that looks awfully similar to OLED). The new display technology is impressive enough; its marketing doesn’t need to evoke associations with a markedly different display type.

Hands-on with Samsung’s Micro RGB TV

Seeing the Micro RGB TV in person confirmed the great potential RGB backlight tech represents. The image quality didn’t quite match what you’d see with a similar OLED or Micro LED display, but what I saw in my short time with the TV surpassed what I’d expect from the best LCD-LED TVs.

I demoed the TV in a mildly lit room, where the screen’s lively colors quickly leaped out at me. I mostly watched pre-selected, polychromatic videos on the TV, making it hard to discern color accuracy. But during the brief demo, I saw colors that are rare to see on even the most expensive TVs.

For example, part of the demo reel (shown below) featured a building in a shade of teal that I can’t recall ever seeing on a TV. It was a greener-leaning teal that had just the right amount of blue to distinguish it from true green. Many displays would fail to capture that subtle distinction.

The demo video also showed a particular shade of pinkish-red. Again, this was the first time I had seen this video, making me wonder if a purer red would be more accurate. But I also saw strong, bright, bloody reds during my demo, suggesting that this unfamiliar pinkish-red was the result of the Micro RGB TV’s broad color gamut.

Unsurprisingly, the TV packs in AI, including a feature that’s supposed to automatically recognize scenes with dull lighting and make them look more lively. Credit: Scharon Harding

Another top standout from my demo was the smooth gradient effects that the TV showed. I could detect no banding in a sunset-like background, for instance, as deep oranges effortlessly transitioned to paler shades before seamlessly evolving into white. Nuanced shades also appeared to enable unique textures on the TV. When the TV was set to display a painting, the screen seemed to mimic the rough texture of canvas or the subtle strokes of paintbrushes. Of course, the TV’s massive size helped emphasize these details, too.

Because it lacks self-emissive pixels, the Micro RGB should have poorer contrast than a good Micro LED (or OLED) TV. The differing prices between Samsung’s 115-inch Micro RGB TV and 114-inch Micro LED TV ($30,000 versus $150,000) hint at the expected performance discrepancy between the display technologies. You won’t get pure blacks with an RGB LED TV, but Samsung’s TV makes a strong effort; some may not notice the difference.

Unlike OLED TVs, the Samsung TV also has potential for the halo effect (also known as blooming). In instances when the TV was showing bright, near-white colors near dark colors, it was hard to notice any halos or gradation. But I didn’t see enough of the right type of content on Samsung’s TV to determine how much of a potential blooming problem it has. Light bleed did seem to be kept to a minimum, though.

The TV also appeared to handle the details of darker images well. A representative from Sony, which is working on a somewhat different RGB LED backlight technology, told Wired that the use of RGB LED backlights could enable displays to show an “expression of colors with moderate brightness and saturation” better than today’s OLED screens can, meaning that RGB LED TVs could be more color-accurate, including in dark scenes. Generally speaking, anything that helps LCD-LED remain competitive against OLED is good news for further development of LED-based displays, like Micro LED.

Credit: Scharon Harding

Samsung specs the Micro RGB TV with a 120 Hz standard rate. The company didn’t disclose how bright the TV can get. Bright highlights enable improved contrast and a better experience for people whose TVs reside in rooms that get bright (yes, these people exist). Display experts also associate properly managed brightness levels with improved color accuracy. And advanced mastering monitors can enable content with brightness levels of up to 4,000 nits, making ultra-bright TVs worth long-term consideration for display enthusiasts.

More RGB LED to come

Samsung is ahead of the curve with RGB backlights and is expected to be one of the first companies to sell a TV like this one. A Samsung spokesperson outside of the event told Ars Technica, “Samsung created an entirely new technology to control and drive each LED, which has different characteristics, to provide more accurate and uniform picture quality. We also worked to precisely mount these ultra-small LEDs in the tens of microns on a board.”

As mentioned above, other companies are working on similar designs. Sony showed off a prototype in February that Wired tested; it should be released in 2026. And Hisense in January teased the 116-inch “TriChrome LED TV” with an RGB LED backlight. It’s releasing in South Korea for KRW 44.9 million (approximately $32,325), SamMobile reported.

Notably, Hisense and Sony both refer to their TVs as Mini LED displays, but the LEDs used in the Hisense and Sony designs are larger than the LEDs in Samsung’s RGB-backlit TV.

Good news for display enthusiasts

A striking lime-like green covers an amphitheater. Credit: Scharon Harding

Samsung’s TV isn’t the Micro LED TV that display enthusiasts have long hoped for, but it does mark an interesting development. During the event, a third Samsung representative told me it’s “likely” that there’s overlap between the manufacturing equipment used for Micro LED and RGB-backlit displays. But again, the company wouldn’t get into specifics.

Still, the development is good news for the LED-LCD industry and people who are interested in premium sets that don’t use OLED displays, which are expensive and susceptible to burn-in and brightness limitations (these issues are improving, though). It’s likely that RGB-backlit TVs will eventually become a better value than pricier types of premium displays, as most people won’t notice the downsides.

The Samsung rep I spoke with outside of the event told me the company believes there’s room in the market for RGB Micro TVs, QLEDs, OLEDs, Mini LEDs, and Micro LEDs.

According to the press release of the Micro RGB TV, Samsung has “future plans for a global rollout featuring a variety of sizes.” For now, though, the company has successfully employed a new type of display technology, creating the possibility of more options for display enthusiasts.

Samsung’s “Micro RGB” TV proves the value of RGB backlights for premium displays Read More »