Microsoft’s Entra ID vulnerabilities could have been catastrophic

“Microsoft built security controls around identity like conditional access and logs, but this internal impression token mechanism bypasses them all,” says Michael Bargury, the CTO at security firm Zenity. “This is the most impactful vulnerability you can find in an identity provider, effectively allowing full compromise of any tenant of any customer.”

If the vulnerability had been discovered by, or fallen into the hands of, malicious hackers, the fallout could have been devastating.

“We don’t need to guess what the impact may have been; we saw two years ago what happened when Storm-0558 compromised a signing key that allowed them to log in as any user on any tenant,” Bargury says.

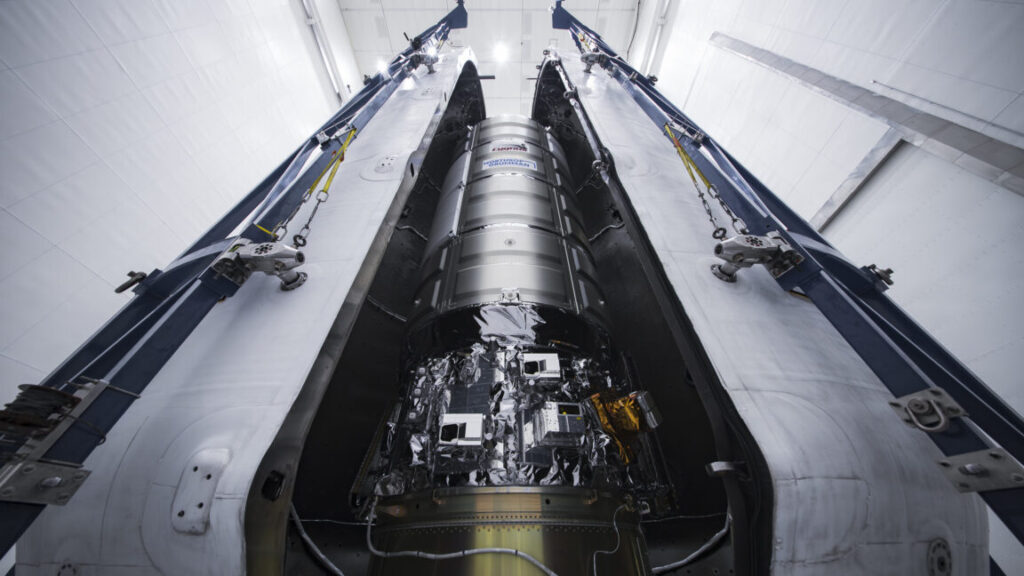

While the specific technical details are different, Microsoft revealed in July 2023 that the Chinese cyber espionage group known as Storm-0558 had stolen a cryptographic key that allowed them to generate authentication tokens and access cloud-based Outlook email systems, including those belonging to US government departments.

Conducted over the course of several months, a Microsoft postmortem on the Storm-0558 attack revealed several errors that led to the Chinese group slipping past cloud defenses. The security incident was one of a string of Microsoft issues around that time. These motivated the company to launch its “Secure Future Initiative,” which expanded protections for cloud security systems and set more aggressive goals for responding to vulnerability disclosures and issuing patches.

Mollema says that Microsoft was extremely responsive about his findings and seemed to grasp their urgency. But he emphasizes that his findings could have allowed malicious hackers to go even farther than they did in the 2023 incident.

“With the vulnerability, you could just add yourself as the highest privileged admin in the tenant, so then you have full access,” Mollema says. Any Microsoft service “that you use EntraID to sign into, whether that be Azure, whether that be SharePoint, whether that be Exchange—that could have been compromised with this.”

This story originally appeared on wired.com.

Microsoft’s Entra ID vulnerabilities could have been catastrophic Read More »