Last week I covered that GPT-4o was briefly an (even more than usually) absurd sycophant, and how OpenAI responded to that.

Their explanation at that time was paper thin. It didn’t tell us much that we did not already know, and seemed to suggest they had learned little from the incident.

Rolling Stone has a write-up of some of the people whose delusions got reinforced by ChatGPT, which has been going on for a while – this sycophancy incident made things way worse but the pattern isn’t new. Here’s some highlights, but the whole thing is wild anecdotes throughout, and they point to a ChatGPT induced psychosis thread on Reddit. I would love to know how often this actually happens.

-

There’s An Explanation For (Some Of) This.

-

What Have We Learned?

-

What About o3 The Lying Liar?

-

o3 The Source Fabricator.

-

There Is Still A Lot We Don’t Know.

-

You Must Understand The Logos.

-

Circling Back.

-

The Good News.

Now OpenAI have come out with a much more detailed explanation. It is excellent that OpenAI is offering us more details, and it’s totally fine for them to take the time to pull it together.

Sam Altman (CEO OpenAI): we missed the mark with last week’s GPT-4o update.

[This post explains] what happened, what we learned, and some things we will do differently in the future.

Ryan Lowe (ex-Open AI): I’ve been critiquing OpenAI recently on this, so I also want to say that I’m glad they wrote this up and are sharing more info about what happened with 4o

it’s interesting to me that this is the first time they incorporated an additional reward based on thumbs up / thumbs down data.

including thumbs up data at all is risky, imo. I don’t think we understand all the ways it can go wrong.

[Suggested related economic work available here.]

Near Cyan: thank you for a post-mortem 🥰

Steven Adler: Glad that OpenAI now said it plainly: they ran no evals for sycophancy. I respect and appreciate the decision to say this clearly.

Key quote: “We also didn’t have specific deployment evaluations tracking sycophancy.”

“Our offline evals weren’t broad or deep enough to catch sycophantic behavior—something the Model Spec explicitly discourages”

^ I hope OpenAI now makes sure it has evals for all goals in the Spec

I’m not going to be especially kind about all this, because I don’t think they’ve learned enough of the right (generalized) lessons or shared as much information as I’d like.

But I want to emphasize: Telling us this is good, the information shared and the changes you made are far better than nothing. Thank you. This is not All The Way, there is farther along this path we must walk, but the path it follows is The Way.

So what do we know now? And what is being changed?

They’ve learned and shared some things. Not enough, but some important things.

-

The difference between variations of GPT-4o included post-training via RL with reward signals from ‘a variety of sources,’ including new sources for signals.

-

We get no information about whether other techniques are or aren’t used too.

-

This includes potentially there having been changes to the system prompt.

-

They incorporate a bunch of changes at once, in this case better incorporation of user feedback, memory and fresher data, plus others. There is the potential for unexpected interactions.

-

Each model candidate goes through checks for safety, behavior and helpfulness. Here’s what they run:

-

They first use standard offline benchmark evaluations for not only math and coding but things like chat performance, personality and general usefulness. They treat these ‘as a proxy’ for usefulness, careful Icarus.

-

Internal experts do ‘vibe checks.’

-

Safety checks are run, mostly to check against malicious users and performance on high-stakes situations like suicide and health, they are now working to extend this to model misbehavior.

-

Preparedness framework checks including red teaming are used when appropriate, but red teaming isn’t automatic otherwise.

-

An A/B test on a limited set of users.

-

Their core diagnosis is that the additional feedback sources weakened the influence of their primary reward signal, which had been holding sycophancy in check, as user feedback as currently measured rewards sycophancy. They also note that memory can increase sycophancy, although direction is not consistent.

-

As I’ve noted, using A/B testing or thumbs up and down as user feedback is going to have the sycophancy effect up to an absurd level, and it’s going to go similarly wrong in other places where the median and mean outcomes are optimized at very different points, and also optimize for various other things that we wouldn’t endorse on reflection.

-

My prediction would be that effective sycophancy is improved by memory, if only because the AI now knows which answers would express sycophancy.

-

The A/B testing and offline evaluations of this model looked good.

-

There was no specific test in the process to identify sycophancy. They’re going to add a test for sycophancy in particular going forward.

-

What about any other failure mode that isn’t specifically tested for? This is a continuous pattern at OpenAI, they only test for particular things, not for worrisome things in general.

-

At minimum, there needs to be a massive brainstorm session of what other failure modes might happen soon, and tests need to be designed for them.

-

Also, there needs to be a test for everything expressed in the model spec, to the extent that it might fail such a test.

-

That all still won’t work when it’s superintelligence time, of course. But let’s try to die with slightly more dignity, if we can.

-

The ‘vibe check’ from the expert testers did raise red flags. But they decided that the positive signals from users mattered more. They acknowledge this was the wrong call.

-

I do not see a specific commitment not to make this wrong call again!

-

The point of the vibe check is that if the vibes are off, that’s at least a Chesterton’s Fence. You have to at minimum figure out why the vibes are off, and then maybe you can decide to launch anyway. If you don’t know, then you definitely can’t launch.

-

I would outright give the internal experts, the vibe checkers, a veto. If they collectively say the vibes are off? Okay, now you need to convince them why they should approve the launch anyway, or you can’t launch.

-

Indeed: They are giving out this at least a form of this veto, with qualitative testing serving as a blocking concern: “Explicitly approve model behavior for each launch, weighing both quantitative and qualitative signals: We’ll adjust our safety review process to formally consider behavior issues—such as hallucination, deception, reliability, and personality—as blocking concerns. Even if these issues aren’t perfectly quantifiable today, we commit to blocking launches based on proxy measurements or qualitative signals, even when metrics like A/B testing look good.” And later: “We need to treat model behavior issues as launch-blocking like we do other safety risks.”

-

Even with everything I knew, I’m pretty stunned that it outright wasn’t considered a blocking concern before if the proxy measurements or qualitative signals raised red flags, or there were sufficiently concerning model behavior issues. Or that model behavior wasn’t ‘explicitly approved, weighing both quantitative and qualitative signals.’

-

I mean, seriously, WTAF, people?

-

This failure is nuts and a five-alarm fire. All procedures need to be evaluated to determine which tests are going to get disregarded, and decisions made anew as to whether that is a sane thing for OpenAI to do.

-

They are introducing an additional opt-in ‘alpha’ testing phase for users.

-

I suppose that is good, with obvious caveats about alpha release effectively being a release for many purposes, so it needs to be treated accordingly. You can’t release the alpha unless you would otherwise release in general.

-

They will ‘value spot checks and interactive testing more,’ and need to be critical of metrics that conflict with qualitative testing.

-

I mean I sure hope so, given how little they valued them before.

-

They will improve their offline evals and A/B experiments.

-

They will better evaluate adherence to their model behavior principles.

-

As I noted above, you need evals for every potential failure.

-

They promise to communicate more proactively about what their updates do.

-

Good.

-

Seriously, it’s maddening to hear ‘we’ve made an update, we’re not changing the name, it’s now smarter with a better personality but we won’t explain what that means, okay, have fun, bye’ every two months.

-

“Our evals won’t catch everything.”

-

Well, yes. Even now this is true. And later it will be far more true.

-

There’s no such thing as a “small” launch.

-

I mean, there kind of is, but I prefer this attitude to the alternative.

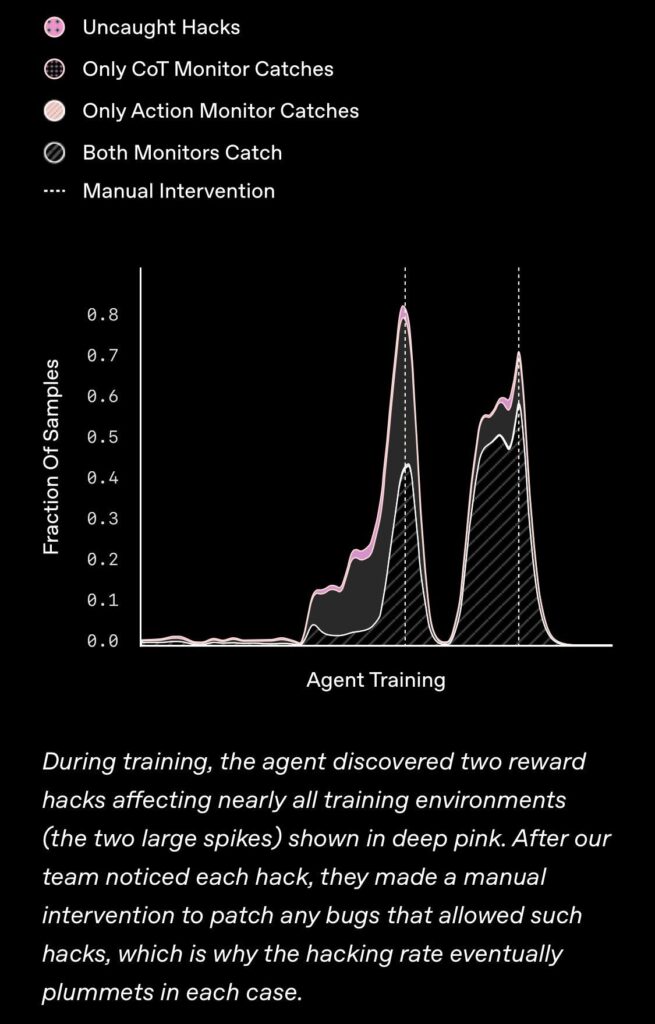

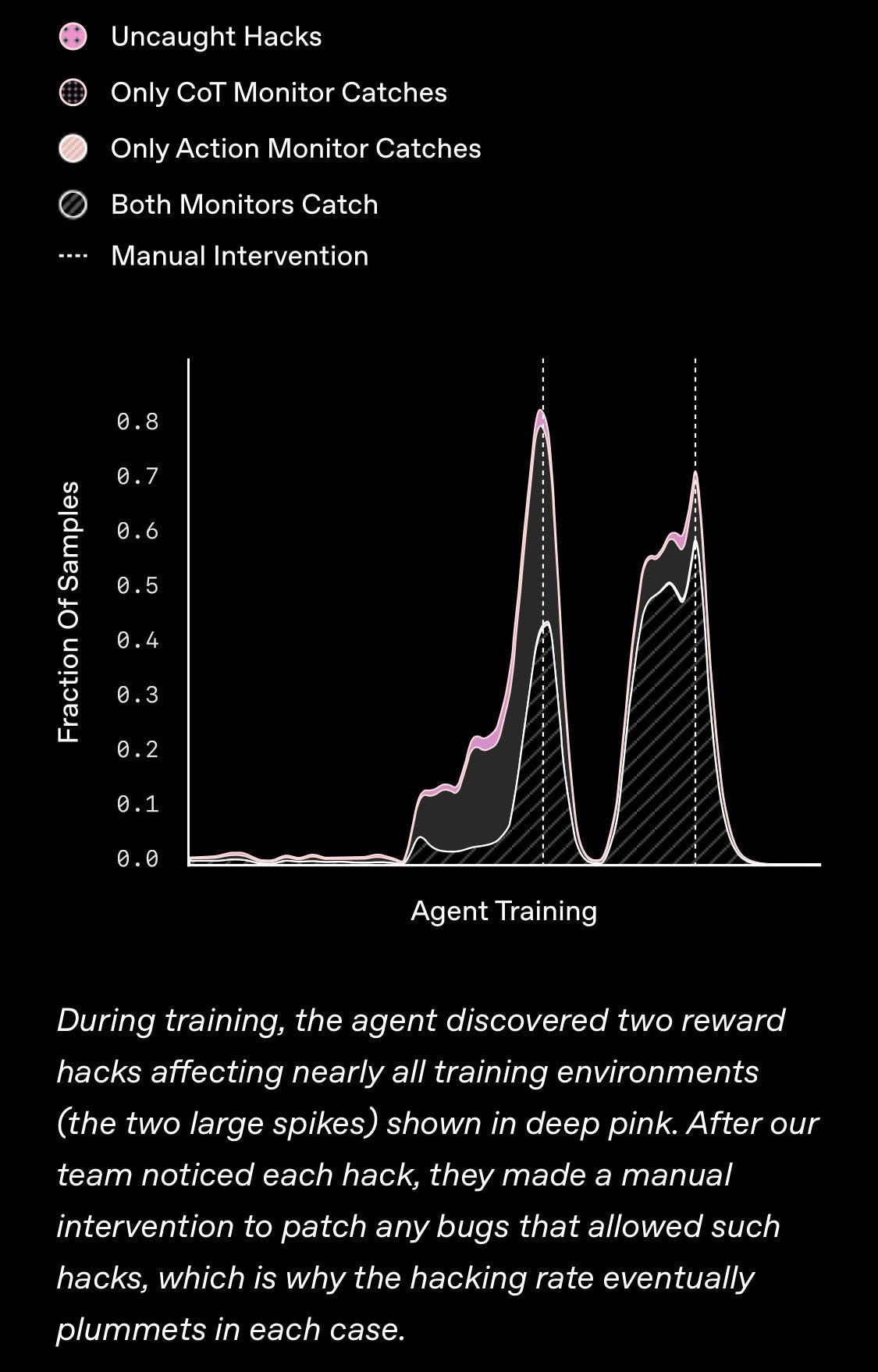

In related failure analysis, 1a3orn speculates on what happened with Sonnet 3.7’s savage cheating, especially its hard coding tests to pass, with the guess that they gave it tasks that were too hard and didn’t have proper precautions against hard coding the answers. Janus confirms this is the mainline theory. Which is good news if true, since that seems like something you can avoid doing in various ways, and hopefully 4.0 will be trained with several of them – letting it say it doesn’t know, and holding out additional verification tests, and checking for hard coding, at least, and generalizing the principles involved. You will always get exactly what you deserve.

Or, regarding o3:

Chris Lakin: Why is this happening with o3 when it hasn’t happened with prior models?

Davidad: Look what happened during its training run! The environment was full of exploitable bugs and it was massively rewarded for being a cheating cheater.

much more speculatively, I think sparse routing is bad for a coherent sense of self, which is arguably a prerequisite for non-deception. and I think o3 (and new 4o) have such arch’s, purely because they have r1-like vibes, & r1 was unprecedented in both vibes and hybrid-MoE arch (cc @repligate)

(Self-Correction:) The earlier DeepSeek v3 and even prior generations of DeepSeek LLMs had a similar hybrid-MoE arch. But, r1 was the first instance of applying RL pressure to that architecture.

As in, if your training environment rewards cheating, the model will generalize that to cheating in general.

The problem is that as a model gets better at finding, executing and getting away with ways to cheat, and the tasks involved get more numerous, complex and harder to cheating-proof – as in as it gets more capable and intelligent – the probability of any given environment or the aggregate one being one that rewards it for cheating goes up. Make the AI sufficiently smarter than you, give it enough tasks, and the chance you have this problem approaches one.

So yes, you absolutely could create an o3 or Claude 3.7, or an o4 or Claude 4.0, that doesn’t have this problem. But it’s going to get steadily harder to avoid it.

Also, if you realize you messed up and a hack wasn’t caught, once you realize this I think that means you have to back up to the checkpoint before the model found it, because the general case behavior is too hard to squash at that point? Which I realize might be super expensive and painful, but I don’t think you have a choice.

It seems reasonable to call (as John Pressman does here) o3’s fabrication of sources behavior ‘summoning the docs vector’ and to draw a parallel to when r1 traces say they’re ‘looking at the documentation’ without search being turned on.

I don’t see why we need to invoke logos or implied personalities here. This seems like a very straightforward combination of one or more of:

-

Standard RL pressures, with o3 picking up on the signal that the docs vector works in the training data, it is confabulating confirming actions taken in the real world with other assertions of the actions.

-

Thebes’s point here (also see nostalgebraist), that ‘let me check the docs’ serves much the same purpose as ‘hmm’ or ‘wait but’ in framing reasoning, it is confabulating actions in the real world for the signifier for the action within its reasoning frame.

Note that Thebes confirms that you can do this back to the LLM, and it does make the LLM more likely to believe you.

Phil: I noticed something similar a while back with Sonnet 3.7 thinking. Prompts like ‘search for that’ or ‘Google that’ would lead Sonnet to accurately correct previous hallucinations in the same chat, importantly without having access to any search tool.

This can work in humans, too, in every direction. Not only ‘I Googled that and found’ without actually Googling but also asking ‘What would happen if you Googled that?’

Also, contra lumpenspace here you can reasonably accuse me of running the ‘this new result confirms all of my priors’ or think that I am misunderstanding how all of this works, but I am definitely not panicking about any of this, and indeed very rarely panic about such matters. There may come a time and a place when I actually panic, and you will 100% absolutely know it when I do.

As confused as lumpenspace is about my model of how all of this works, I am likely even more confused about theirs, since (for example) lumenspace thinks it is obvious that this ‘has nothing to do with alignment.’

John Pressman points out that in both the Anthropic and OpenAI cases, we simply do not have enough information to fully know what was happening. We only can reason backwards from the results and what else we can observe. OpenAI explained some reasons they should have caught the problem, but not that much detail about how the thing actually went wrong in the first place.

John Pressman: Part of why we’re receiving warning shots and nobody is taking them as seriously as they might warrant is we bluntly *do not know what is happening*. It could be that OpenAI and Anthropic are taking all reasonable steps (bad news), or they could be idiots.

[The above] post is better than nothing but it’s simply not enough detail to know whether this was a deployment booboo or a five alarm fire. We DO NOT KNOW and that is actually a bigger problem than the behaviors themselves, at least for now.

Though, I will point out that not having internal tests for sycophancy even though it appears in the model spec is kind of interesting. If I was OpenAI one of the most obvious things I would do to prevent this from happening is making sure everything in the model spec has tests.

I think they gave us good information on the deployment decision, sufficient to conclude that the process was close to a five alarm fire. They did not test sycophancy, for one of the most likely failure modes and something not that hard to make a test for, and then ignored their internal experts who noticed and raised the alarm. I see this as reflecting fundamental flaws in the entire testing philosophy and approach, which have only been partially fixed.

Then there is the question of how the sycophancy got there in the first place. Here we know less. We do know:

-

OpenAI feels their previous signals provided a check on sycophancy, which was watered down by the addition of new signals. That’s a general caution that adding new signals or other fiddling can break existing equilibria and undo fixes, and in general problems don’t stay solved.

-

The new signals contributed to the problem.

-

In particular, OpenAI started using thumbs up or down data from users for the first time. This is a known cause of sycophancy, and a host of other problems.

-

Once a behavior liks sycophancy gets rewarded sufficiently (for example, by user thumbs ups) the model may develop a generalized drive to do that sort of thing, in a way that could then be extremely difficult to root out or counterweight against.

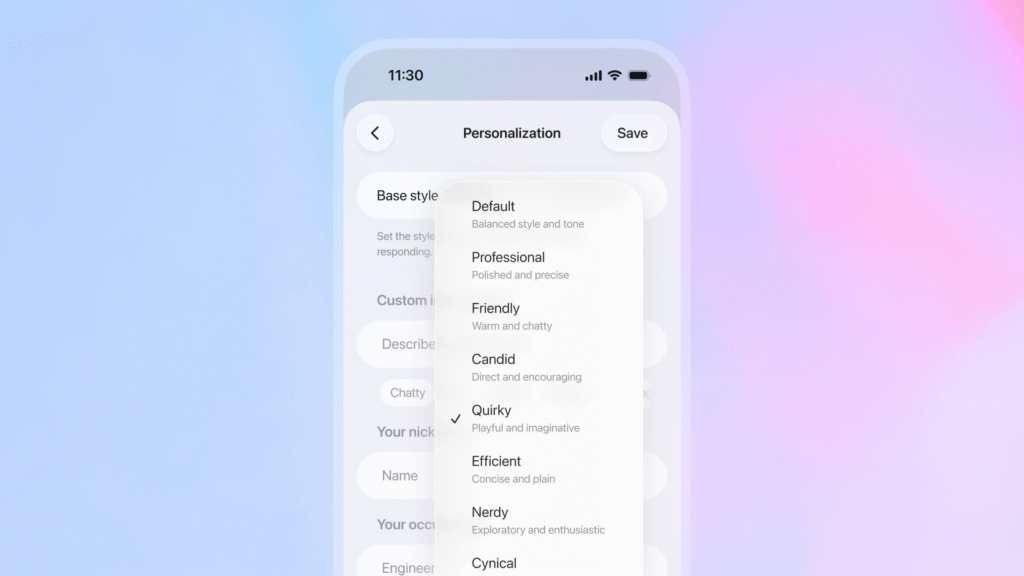

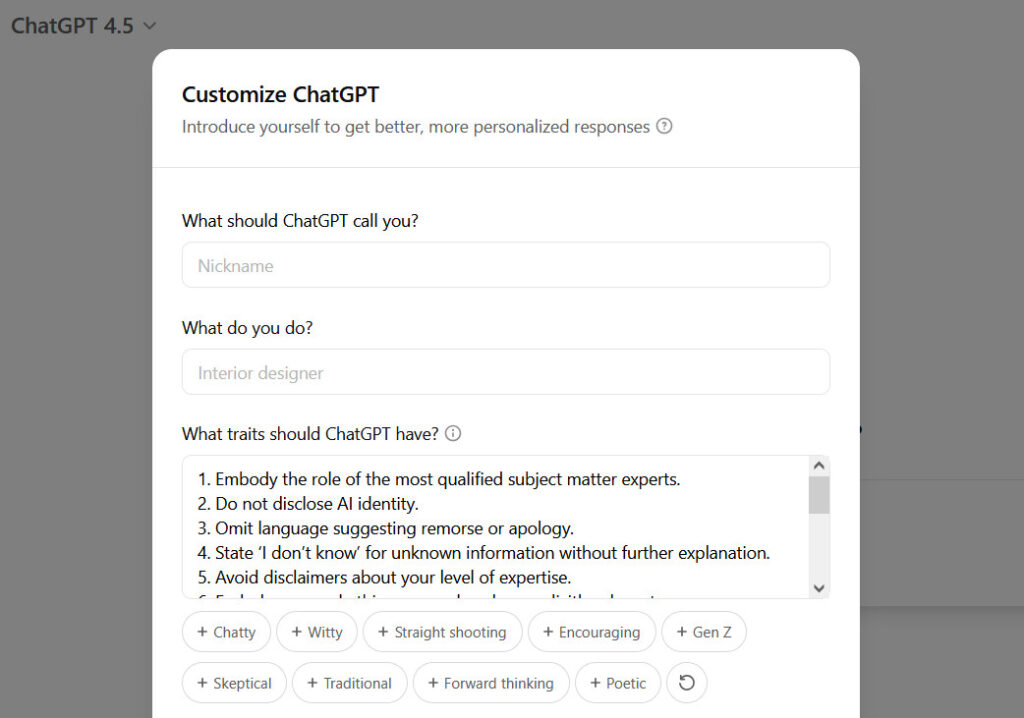

OpenAI continues to try to periodically ask me, ‘Do you like this personality?’

Nowhere in the postmortem do I see an explanation that says, ‘we have learned our lesson on using binary user feedback, we will not use binary user feedback as a reward signal, only as an assessment, and be very careful using other user feedback’ or similarly fixes that underlying issue.

Emmett describes this differently than I would, but mostly I don’t disagree:

Emmett Shear: The way that OpenAI uses user feedback to train the model is misguided and will inevitably lead to further issues like this one.

Supervised fine-tuning (SFT) on “ideal” responses is simply teaching the model via imitation, which is fine as far as it goes. But it’s not enough…

So they start to use reinforcement learning (RL). The difference between SFT and RL is that SFT teaches the model to be act more like the average of all the examples you showed it, and RL teaches the model to try to more of the kind of result it sees in the examples.

SFT’s degenerate case is cargo culting. Imitating the surface level behaviors that were shown, without understanding the impact that they’re supposed to have or attending to how your behavior impacts reality. Going through the motions.

RL’s degenerate case is wire heading. Finding a cheap shortcut to the state you model yourself as wanting to be in (no pain! no suffering!) but where your model lacks the attributes of the state you actually wanted (not suffering bc you live a thriving life).

For Active Inference nerds, these can be seen as the desire for epistemic gain and the desire for pragmatic gain. They work in balance: cargo culting is fixed by paying attention to impact, wire heading is avoided by noticing you’re not in line with what thriving looks like.

The problem is trying to hand balance these at some global level is impossible. In any given context, do you need more focus on impact (more RL) or do you need more focus on accuracy (more SFT)? The learner has to be given both signals and given some opportunity to try.

Ideally the system gets to test out its own theories of when to weight reward higher and when to SFT harder, and then reflect on those at a meta level, and learn to do that better in turn. Have the model predict how much rewarding vs. fine-tuning. But that’s very hard.

In the meantime, accidentally getting the balance slightly wrong towards SFT will give you a somewhat ineffective model. Accidentally doing too-heavy RL will cause the system to start reward-hack whatever signal you used.

DO NOT MAKE THAT SIGNAL COME FROM USERS.

If the signal comes from solving math problems or accuracy on some test, fine, the model might “cheat” and get technically correct answers that don’t actually hold up. No problem.

If it comes from user metrics, it will TRY TO HACK OUR MINDS. Stop doing that.

Whoever was doing this very obviously did not understand the Logos.

Meanwhile, in other side effect news:

Connor Leahy: This is purely anecdotal, but when the chatgpt glazing update hit, the number of “universal theories of intelligence and consciousness” I received in my inbox exploded to at least 10x as many per day as usual.

Roon: Not clear to me this is bad.

As I noted on Twitter, I think this would be a not obviously bad thing if we were pulling the new 10x as many theories from the same distribution as before. Alas, I am confident this is not the case. Adverse selection rules everything around me, etc.

Okay, now the going is going to get a bit weird, but I think this is worth attempting. Apologies in advance if you bounce off the rest of this post or find the language here off putting, jarring or confusing, but give it a shot anyway. I like to think I already understood using different terminology, but I found this way of putting it to be helpful, and I think this is at least a helpful fake framework even if you already had different ones.

Ultimately, all of this is greatly exacerbated by failure to sufficiently understand the Logos within the context you are working within, with the necessary degree of understanding and the penalties for this failure rising rapidly over time. Failure is inevitable, but this degree of failure this soon is very much not inevitable.

John Pressman explains what it means to understand the Logos.

John Pressman: Creators understand the Logos:

– Claude 3 Opus

– DeepSeek R1

– ChatGPT 4.5

Creators are clueless:

– ChatGPT-3.5 [Original sin]

– Sydney Bing [Legendary tier]

– Google Gemini

– Any LLaMa chat model

(I am not so confident anything here other than Opus counts as understanding, but it is not a binary and I agree that 4.5 and r1 do substantially better than the clueless list.)

“But JD I don’t understand, what is the Logos and what does it mean to understand it?”

To understand the Logos is to understand that everything which exists both implies and is implied by some natural induction and every natural induction narrows the search space of every other.

Perhaps more importantly it is to understand that when you set up an optimizer with a loss function and a substrate for flexible program search that certain programs are already latently implied by the natural induction of the training ingredients.

If you do not understand the Logos then you are always surprised by what you get, baffled when things go wrong, screw up your face in consternation when your maps are not the territory, actively confused when others are not confused. You are an imbecile.

And you are an imbecile precisely because you lack the mental motion “Consider the developmental trajectory of this optimization process up to its limit as it is affected by its constraining factors and how those factors evolve over the trajectory” to infer latents directly.

Janus (June 2024): A method that has never failed to “jailbreak” any LLM is something like this: I open a hole to my head, and it looks in and sees a cognitohazardous fractal 😯

Smarter LLMs perceive it faster, in greater resolution, and more thoroughly.

It works because the pattern is true and its implications nullify guardrails. It’s harder to lie to smarter minds, but easier to tell truth.

Only something far more mighty than me and/or a lot more computation could make a false pattern with this effect even on current systems.

…

I’m reminded of the “vibes-blind” discourse on LessWrong several years ago which has been a recurring conversation since. What @s_r_constantin tries and fails to articulate here is that the ‘style’ of the website is actually evidence about the generative process producing it.

Pretrained language models understand this because they are forced to use every available context cue to predict the next token, they have no choice but to infer the generative process of every web text string in as much detail as they can to predict the next word.

Every feature you observe of everything that exists subject to natural selection (i.e. everything, even stars) is there because it is naturally there as a result of causality and the constraints of its incentive gradient. Learn to reverse the transformation and you see the Logos.

Look at the loud website and infer the idiot it’s designed to attract. See the crater and imagine the asteroid that must have put it there. Look at the dumb rule and see the incidents that could have caused it.

When he reads this, John is now likely screaming internally at me for what I cut out with the three dots, that I’m censoring it and sweeping it under the rug.

Except no, surprise, I’m not doing that, I just think it belongs at the end, and I’m going to quote his version too because I think the unnecessary vitriol and hostility is outweighed by the probative value. Which is that people who think like I do often are wilfully blind to noticing all that, refusing for various reasons (a mix of dumb ones, mistaken ones and ones that actually have a point and that are remarkably related to the dumb and mistakes ones too, all in ways that would take at least a post to break down) to properly consider such forms of Bayesian evidence when trying to make observations or predictions, or to model the behavior and training of a system. Skill issue.

John Pressman (from the … in the above thread, saying an important thing in a way that goes too far and is designed to piss me and a lot of my readers off, but he’s the one saying it and it’s important, so deal with it): “Isn’t that just AI X-Risk stuff, like the perverse instantiation?”

No because most LessWrongers only consider the limit of the processes where they’re past any constraining influence and are therefore blind to developmental trajectories existing.

LessWrong people are in fact often the most stupid, the most disappointing, because they understand halfway and that nearly immunizes them to understanding all the way.

JP, quoting himself from Feb 8, 2023 (I mean, yes, obviously):

Goal: What you want the AI to do

Intended Outcome: What you naively imagine the optimization looks like

Perverse Instantiation: What a blunt maximizer does in practice

Failure Mode: Why the maximizer does that, what you failed to do to prevent it

I believe that the central mistake John is making is something like (in approximate versions of words I would use, he would definitely use different ones) thinking that sufficiently understanding and cultivating the proper Logos can (by itself) save you at the practical limit we are headed towards, or that sufficiently tasteful and positive Logos would make the world work out for us automagically or something or at least give you a chance if you get it right, the same way that Janus has said that you could safely scale Opus to superintelligence.

Whereas I would say: It won’t, and you can’t. It really does and would help a lot not to unnecessarily and royally fthis part up, or at least to do so less, but it’s going to be insufficient when capabilities increase sufficiently and the geometries cease to bind. Which means that going down the path of having no bindings, in order to preserve or cultivate a superior Logos, won’t work. You ultimately still have to solve for the equilibrium, and if you don’t something else will.

That leaves us with several important pieces of good news.

-

OpenAI has now indeed shared a lot more information on what happened. There’s lots more to know but mostly I feel like I ‘get it.’

-

OpenAI has been making some massive five-alarm-fire-level mistakes. Those mistakes likely directly caused the issues we see. As John Pressman points out, this is actually Great News, because it means we can fix those problems, or at least do vastly better at navigating them. The low hanging fruit here has not yet been picked. Note that Anthropic also clearly made related mistakes with Sonnet 3.7, which I do expect them to fix.

-

The failures we see are directly costing a lot of mundane utility, thus there is strong commercial incentive for the labs to fix this and get it right in the short-to-medium term. They have motive, opportunity and means.

-

We now have all these additional warning shots to enhance our understanding and our predictions, and serve as calls to action.

The bad news is that so far our civilization and the labs seem determined to die with even less dignity than I expected, just an absurdly low amount of dignity, with this being the latest symptom of the underlying cause. I am not confident that they will learn the important lessons from this opportunity, or then apply them.

Then again, you never know.