D-Wave quantum annealers solve problems classical algorithms struggle with

The latest claim of a clear quantum supremacy solves a useful problem.

Right now, quantum computers are small and error-prone compared to where they’ll likely be in a few years. Even within those limitations, however, there have been regular claims that the hardware can perform in ways that are impossible to match with classical computation (one of the more recent examples coming just last year). In most cases to date, however, those claims were quickly followed by some tuning and optimization of classical algorithms that boosted their performance, making them competitive once again.

Today, we have a new entry into the claims department—or rather a new claim by an old entry. D-Wave is a company that makes quantum annealers, specialized hardware that is most effective when applied to a class of optimization problems. The new work shows that the hardware can track the behavior of a quantum system called an Ising model far more efficiently than any of the current state-of-the-art classical algorithms.

Knowing what will likely come next, however, the team behind the work writes, “We hope and expect that our results will inspire novel numerical techniques for quantum simulation.”

Real physics vs. simulation

Most of the claims regarding quantum computing superiority have come from general-purpose quantum hardware, like that of IBM and Google. These can solve a wide range of algorithms, but have been limited by the frequency of errors in their qubits. Those errors also turned out to be the reason classical algorithms have often been able to catch up with the claims from the quantum side. They limit the size of the collection of qubits that can be entangled at once, allowing algorithms that focus on interactions among neighboring qubits to perform reasonable simulations of the hardware’s behavior.

In any case, most of these claims have involved quantum computers that weren’t solving any particular algorithm, but rather simply behaving like a quantum computer. Google’s claims, for example, are based around what are called “random quantum circuits,” which is exactly what it sounds like.

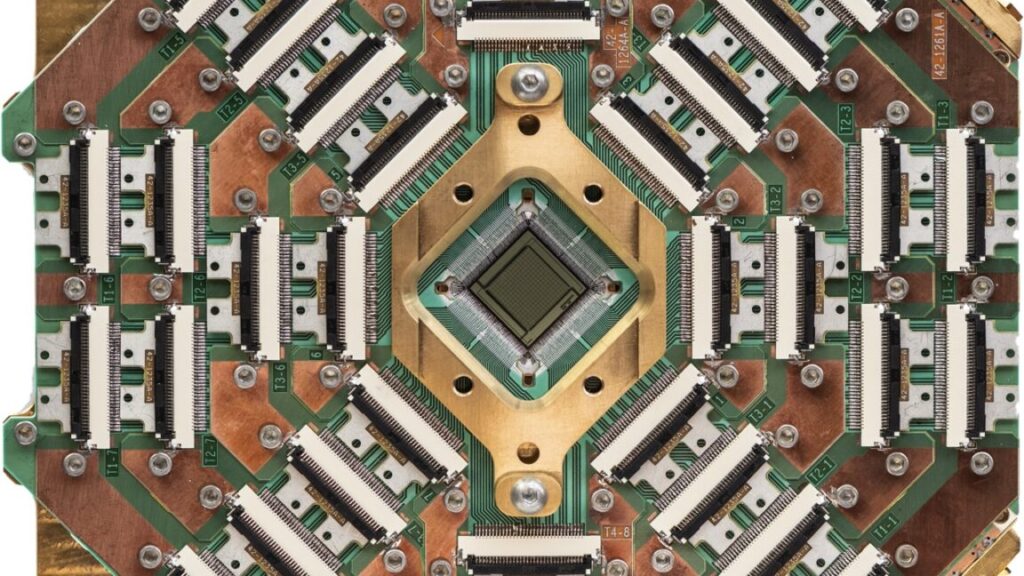

Off in its own corner is a company called D-Wave, which makes hardware that relies on quantum effects to perform calculations, but isn’t a general-purpose quantum computer. Instead, its collections of qubits, once configured and initialized, are left to find their way to a ground energy state, which will correspond to a solution to a problem. This approach, called quantum annealing, is best suited to solving problems that involve finding optimal solutions to complex scheduling problems.

D-Wave was likely to have been the first company to experience the “we can outperform classical” followed by an “oh no you can’t” from algorithm developers, and since then it has typically been far more circumspect. In the meantime, a number of companies have put D-Wave’s computers to use on problems that align with where the hardware is most effective.

But on Thursday, D-Wave will release a paper that will once again claim, as its title indicates, “beyond classical computation.” And it will be doing it on a problem that doesn’t involve random circuits.

You sing, Ising

The new paper describes using D-Wave’s hardware to compute the evolution over time of something called an Ising model. A simple version of this model is a two-dimensional grid of objects, each of which can be in two possible states. The state that any one of these objects occupies is influenced by the state of its neighbors. So, it’s easy to put an Ising model into an unstable state, after which values of the objects within it will flip until it reaches a low-energy, stable state. Since this is also a quantum system, however, random noise can sometimes flip bits, so the system will continue to evolve over time. You can also connect the objects into geometries that are far more complicated than a grid, allowing more complex behaviors.

Someone took great notes from a physics lecture on Ising models that explains their behavior and role in physics in more detail. But there are two things you need to know to understand this news. One is that Ising models don’t involve a quantum computer merely acting like an array of qubits—it’s a problem that people have actually tried to find solutions to. The second is that D-Wave’s hardware, which provides a well-connected collection of quantum devices that can flip between two values, is a great match for Ising models.

Back in 2023, D-Wave used its 5,000-qubit annealer to demonstrate that its output when performing Ising model evolution was best described using Schrödinger’s equation, a central way of describing the behavior of quantum systems. And, as quantum systems become increasingly complex, Schrödinger’s equation gets much, much harder to solve using classical hardware—the implication being that modeling the behavior of 5,000 of these qubits could quite possibly be beyond the capacity of classical algorithms.

Still, having been burned before by improvements to classical algorithms, the D-Wave team was very cautious about voicing that implication. As they write in their latest paper, “It remains important to establish that within the parametric range studied, despite the limited correlation length and finite experimental precision, approximate classical methods cannot match the solution quality of the [D-Wave hardware] in a reasonable amount of time.”

So it’s important that they now have a new paper that indicates that classical methods in fact cannot do that in a reasonable amount of time.

Testing alternatives

The team, which is primarily based at D-Wave but includes researchers from a handful of high-level physics institutions from around the world, focused on three different methods of simulating quantum systems on classical hardware. They were put up against a smaller version of what will be D-Wave’s Advantage 2 system, designed to have a higher qubit connectivity and longer coherence times than its current Advantage. The work essentially involved finding where the classical simulators bogged down as either the simulation went on for too long, or the complexity of the Ising model’s geometry got too high (all while showing that D-Wave’s hardware could perform the same calculation).

Three different classical approaches were tested. Two of them involved a tensor network, one called MPS, for matrix product of states, and the second called projected entangled-pair states (PEPS). They also tried a neural network, as a number of these have been trained successfully to predict the output of Schrödinger’s equation for different systems.

These approaches were first tested on a simple 8×8 grid of objects rolled up into a cylinder, which increases the connectivity by eliminating two of the edges. And, for this simple system that evolved over a short period, the classical methods and the quantum hardware produced answers that were effectively indistinguishable.

Two of the classical algorithms, however, were relatively easy to eliminate from serious consideration. The neural network provided good results for short simulations but began to diverge rapidly once the system was allowed to evolve for longer times. And PEPS works by focusing on local entanglement and failed as entanglement was spread to ever-larger systems. That left MPS as the classical representative as more complex geometries were run for longer times.

By identifying where MPS started to fail, the researchers could estimate the amount of classical hardware that would be needed to allow the algorithm to keep pace with the Advantage 2 hardware on the most complex systems. And, well, it’s not going to be realistic any time soon. “On the largest problems, MPS would take millions of years on the Frontier supercomputer per input to match [quantum hardware] quality,” they conclude. “Memory requirements would exceed its 700PB storage, and electricity requirements would exceed annual global consumption.” By contrast, it took a few minutes on D-Wave’s hardware.

Again, in the paper, the researchers acknowledge that this may lead to another round of optimizations that bring classical algorithms back into competition. And, apparently those have already started once a draft of this upcoming paper was placed on the arXiv. At a press conference happening as this report was being prepared, one of D-Wave’s scientists, Andrew King, noted that two pre-prints have already appeared on the arXiv that described improvements to classical algorithms.

While these allow classical simulations to perform more of the results demonstrated in the new paper, they don’t involve simulating the most complicated geometries, and require shorter times and fewer total qubits. Nature talked to one of the people behind these algorithm improvements, who was optimistic that they could eventually replicate all of D-Wave’s results using non-quantum algorithms. D-Wave, obviously, is skeptical. And King said that a new, larger Advantage 2 test chip with over 4,000 qubits available had recently been calibrated, and he had already tested even larger versions of these same Ising models on it—ones that would be considerably harder for classical methods to catch up to.

In any case, the company is acting like things are settled. During the press conference describing the new results, people frequently referred to D-Wave having achieved quantum supremacy, and its CEO, Alan Baratz, in responding to skepticism sparked by the two draft manuscripts, said, “Our work should be celebrated as a significant milestone.”

Science, 2025. DOI: 10.1126/science.ado6285 (About DOIs).

John is Ars Technica’s science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to seek out a bicycle, or a scenic location for communing with his hiking boots.

D-Wave quantum annealers solve problems classical algorithms struggle with Read More »