Meta pirated and seeded porn for years to train AI, lawsuit says

Evidence may prove Meta seeded more content

Seeking evidence to back its own copyright infringement claims, Strike 3 Holdings searched “its archive of recorded infringement captured by its VXN Scan and Cross Reference tools” and found 47 “IP addresses identified as owned by Facebook infringing its copyright protected Works.”

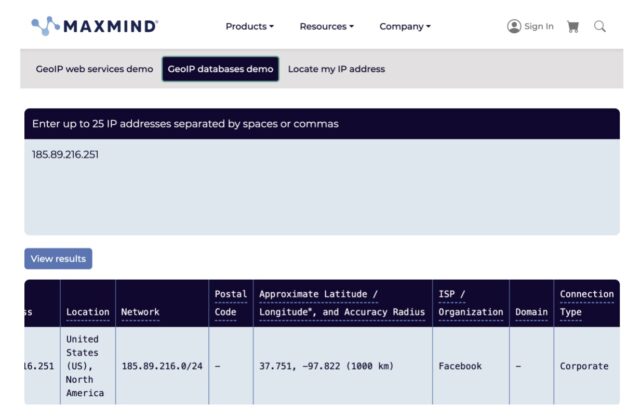

The data allegedly demonstrates a “continued unauthorized distribution” over “several years.” And Meta allegedly did not stop its seeding after Strike 3 Holdings confronted the tech giant with this evidence—despite the IP data supposedly being verified through an industry-leading provider called Maxmind.

Strike 3 Holdings shared a screenshot of MaxMind’s findings. Credit: via Strike 3 Holdings’ complaint

Meta also allegedly attempted to “conceal its BitTorrent activities” through “six Virtual Private Clouds” that formed a “stealth network” of “hidden IP addresses,” the lawsuit alleged, which seemingly implicated a “major third-party data center provider” as a partner in Meta’s piracy.

An analysis of these IP addresses allegedly found “data patterns that matched infringement patterns seen on Meta’s corporate IP Addresses” and included “evidence of other activity on the BitTorrent network including ebooks, movies, television shows, music, and software.” The seemingly non-human patterns documented on both sets of IP addresses suggest the data was for AI training and not for personal use, Strike 3 Holdings alleged.

Perhaps most shockingly, considering that a Meta employee joked “torrenting from a corporate laptop doesn’t feel right,” Strike 3 Holdings further alleged that it found “at least one residential IP address of a Meta employee” infringing its copyrighted works. That suggests Meta may have directed an employee to torrent pirated data outside the office to obscure the data trail.

The adult site operator did not identify the employee or the major data center discussed in its complaint, noting in a subsequent filing that it recognized the risks to Meta’s business and its employees’ privacy of sharing sensitive information.

In total, the company alleged that evidence shows “well over 100,000 unauthorized distribution transactions” linked to Meta’s corporate IPs. Strike 3 Holdings is hoping the evidence will lead a jury to find Meta liable for direct copyright infringement or charge Meta with secondary and vicarious copyright infringement if the jury finds that Meta successfully distanced itself by using the third-party data center or an employee’s home IP address.

“Meta has the right and ability to supervise and/or control its own corporate IP addresses, as well as the IP addresses hosted in off-infra data centers, and the acts of its employees and agents infringing Plaintiffs’ Works through their residential IPs by using Meta’s AI script to obtain content through BitTorrent,” the complaint said.

Meta pirated and seeded porn for years to train AI, lawsuit says Read More »