It is monthly roundup time.

I invite readers who want to hang out and get lunch in NYC later this week to come on Thursday at Bhatti Indian Grill (27th and Lexington) at noon.

I plan to cover the UBI study in its own post soon.

I cover Nate Silver’s evisceration of the 538 presidential election model, because we cover probabilistic modeling and prediction markets here, but excluding any AI discussions I will continue to do my best to stay out of the actual politics.

Jeff Bezos’ rocket company Blue Origin files comment suggesting SpaceX Starship launches be capped due to ‘impact on local environment.’ This is a rather shameful thing for them to be doing, and not for the first time.

Alexey Guzey reverses course, realizes at 26 that he was a naive idiot at 20 and finds everything he wrote cringe and everything he did incompetent and Obama was too young. Except, no? None of that? Young Alexey did indeed, as he notes, successfully fund a bunch of science and inspire good thoughts and he stands by most of his work. Alas, now he is insufficiently confident to keep doing it and is in his words ‘terrified of old people.’ I think Alexey’s success came exactly because he saw people acting stupid and crazy and systems not working and did not then think ‘oh these old people must have their reasons,’ he instead said that’s stupid and crazy. Or he didn’t even notice that things were so stupid and crazy and tried to just… do stuff.

When I look back on the things I did when I was young and foolish and did not know any better, yeah, some huge mistakes, but also tons that would never have worked if I had known better.

Also, frankly, Alexey is failing to understand (as he is still only 26) how much cognitive and physical decline hits you, and how early. Your experience and wisdom and increased efficiency is fighting your decreasing clock speed and endurance and physical strength and an increasing set of problems. I could not, back then, have done what I am doing now. But I also could not, now, do what I did then, even if I lacked my current responsibilities. For example, by the end of the first day of a Magic tournament I am now completely wiped.

Google short urls are going to stop working. Patrick McKenzie suggests prediction markets on whether various Google services will survive. I’d do it if I was less lazy.

This is moot in some ways now that Biden has dropped out, but being wrong on the internet is always relevant when it impacts our epistemics and future models.

Nate Silver, who now writes Silver Bulletin and runs what used to be the old actually good 538 model, eviscerates the new 538 election model. The ‘new 538’ model had Biden projected to do better in Wisconsin and Ohio than either the fundamentals or his polls, which makes zero sense. It places very little weight on polls, which makes no sense. It has moved towards Biden recently, which makes even less sense. Texas is their third most likely tipping point state, it happens 9.8% of the time, wait what?

At best, Kelsey Piper’s description here is accurate.

Kelsey Piper: Nate Silver is slightly too polite to say it but my takeaway from his thoughtful post is that the 538 model is not usefully distinguishable from a rock with “incumbents win reelection more often than not” painted on it.

Gil: worse, I think Elliott’s modelling approach is probably something like max_(dem_chance) [incumbency advantage, polls, various other approaches].

Elliott’s model in 2020 was more bullish on Biden’s chances than Nate and in that case Trump was the incumbent and down in the polls.

Nate Silver (on Twitter): Sure, the Titanic might seem like it’s capsizing, but what you don’t understand is that the White Star Line has an extremely good track record according to our fundamentals model.

At worst, the model is bugged or incoherent, or a finger is on the scale. And given the debate over Biden stepping aside, this could have altered the outcome of the election. It still might have, if it delayed Biden’s resignation, although once you get anywhere near this far ‘the Sunday after the RNC’ is actually kind of genius timing.

I have done a lot of modeling in my day. What Nate is doing here is what my culture used to refer to as ‘calling bullshit.’ I would work on a model and put together a spreadsheet. I’d hand it off to my partner, who would enter various numbers into the input boxes, and look at the outputs. Then we’d get on the phone and he’d call bullshit: He’d point out a comparison or output somewhere that did not make sense, that could not be right. Usually he’d be right, and we’d iterate until he could not do that anymore. Then we might, mind you I said might, have a good model.

Another thing you could have done was to look at the market, or now the market history, since ‘things may have changed by the time you read this’ indeed.

Thus, no, I do not need to read through complex Bayesian explanations on various modeling assumptions to know that the 538 forecast here is bonkers. If it produces bonkers outputs, then it bonkers. If the topline number seemed bonkers, but all the internals made sense and the movements over time made sense and one could be walked through how that produces the final answer, that would be one thing.

But no, these outputs are simply flat out bonkers. The model does not much care about the things that matter most, it does not respond reasonably, it has outputs in places that were so pro-Biden as to look like bugs. Ignore such Obvious Nonsense.

It is also important because when they change Biden, to Harris or otherwise, there is a good chance they will still make similar mistakes.

As noted above, I will continue to cover modeling and prediction markets, and tracking how the candidates relate to AI, and continue doing my best to avoid otherwise covering the election. You’ll get enough of that without me.

My current view of the market is that Harris is modestly cheap (undervalued) at current prices, but Trump is still the favorite, and we will learn a lot soon when we actually have polling under ‘it’s happening’ conditions.

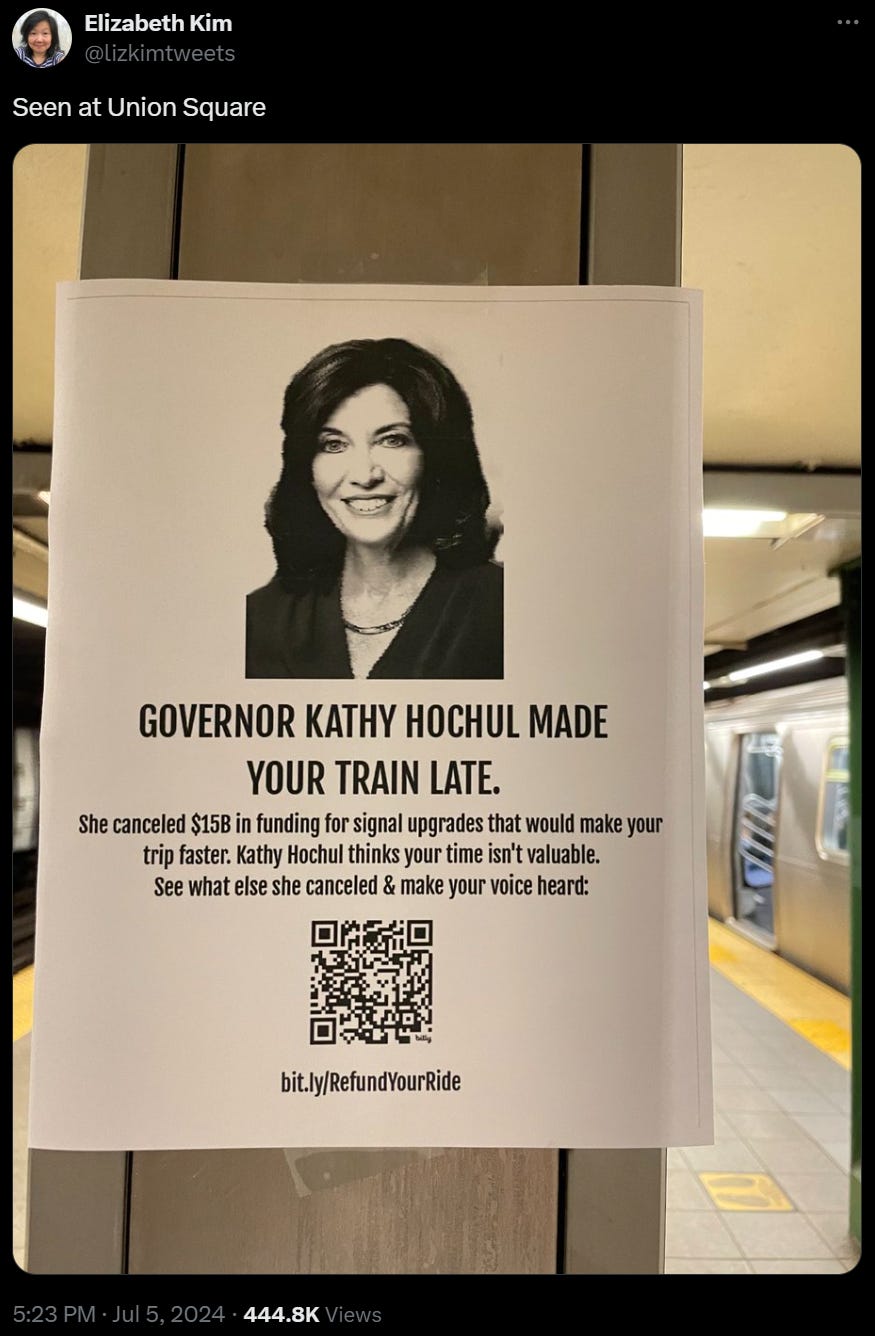

Shame.

The beatings will continue until we have congestion pricing or a new governor.

We actually do want a 24-hour coffee shop and bookstore (with or without a cat, and 18-hour get you 95% of the value), or the other nice things mentioned in the Josh Ellis thread here. We say we do, and in some ways we act like we do. We still don’t get the things, because our willingness to pay directly says otherwise.

There are many similar things that genuinely seem to make our lives way better, that warm our hearts by their mere existence and optionality. That people actively want to provide, if they could. Yet they are hard to find, because they cannot pay the rent.

You can have your quaint bookstore, on one condition, which is paying a lot more, directly, for some combination of a membership, the books and the coffee.

Instead, we are willing to pay quite a lot more for the house three blocks from the bookstore, because we recognize its value. But if the bookstore charged us half that money directly, we would refuse to pay. It ruins the thing. So the owners of land get rich and the bookstore gets driven out.

I have to remind myself of this constantly. I pay a lot in fixed costs to live in a place I love, including the extra taxes. Then I constantly have the urge to be stingy about actually paying for many of the things that make me want to live here. It is really hard not to do this.

Magic players drive this point home. You plan for a month, pay hundreds for cards, pay hundreds for the plane ticket and hundreds more for the hotel, work to qualify and train, in a real sense this is what you live for… and then complain about the outrageous $100 entry fee or convention fee.

This is so much of why we cannot have nice things. It is not that we do not have a willingness to pay in the form of having less money. It is that we think those things ‘should cost’ a smaller amount, so when they cost more, it ruins the thing. It is at core the same issue as not wanting to buy overpriced wires at the airport.

The CrowdStrike incident was covered on its own. These are other issues.

Least surprising headlines department: Identity-verifier used by Big Tech amid mandates has made personal data easily accessible to hackers.

AU10TIX told 404 Media that the incident was old and credentials were rescinded—but 404 Media found that the credentials still worked as of this month. After relaying that information, AU10TIX “then said it was decommissioning the relevant system, more than a year after the credentials were first exposed on Telegram.”

If you require age verification to safeguard privacy, this will predictably have a high risk of backfiring.

Nearly all AT&T customer records were breached in 2022. The breach has now been leaked to an American hacker in Turkey. This includes every interaction those customers made, and all the phone numbers involved. Recall that in March 2024 data from 73 million AT&T accounts leaked to the dark web. So yes, we need to lock down the frontier AI labs yesterday.

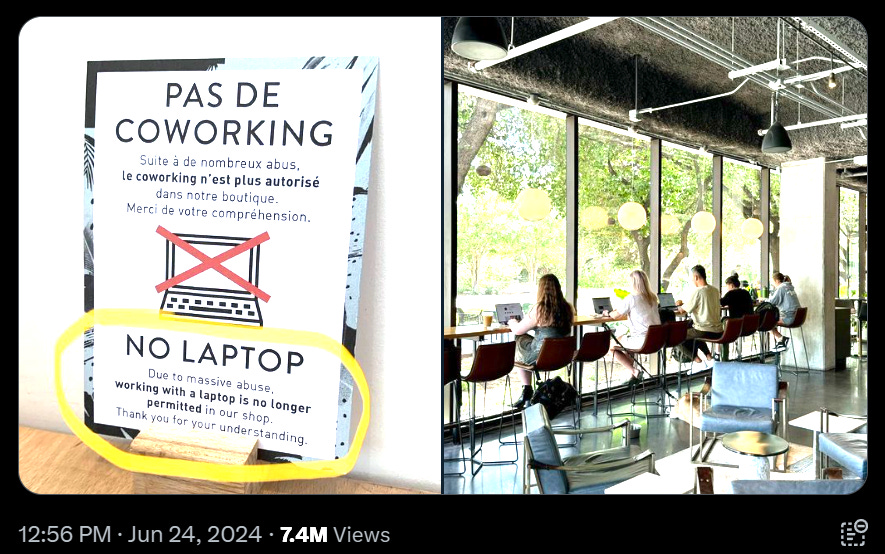

Beware the laptop trap.

Samo Burja: When I first saw the laptop practice in San Francisco I assumed people worked with laptops in cafes because their houses were crowded with too many roommates to save on rent and offices to save on startup runway.

I had no idea people in LA and NYC did this too.

Unless you’re in San Francisco I don’t think your laptop work is adding to GDP. Use cafes to meet friends.

Marko Jukic: European cafes are 100% right to ban “coworking” i.e. staring silently at my electronic device screen for hours on end while pretending to work and taking up space in a public place intended for relaxation and socializing.

Don’t let Americans turn the cafe bar into an office!

The picture on the right above depicts a hellish anti-social prison-like atmosphere. In a cafe, I want to hear music, conversation, laughter, and the football game.

It’s a CAFE, not a library, not an office, not a university lecture hall. Leave your laptop at home.

Americans will complain endlessly how America lacks “third spaces” and enjoyable public life but then like the idea of turning European cafes into sterile workspaces where professional laptop-typers sit in silent rows avoiding eye contact pretending to do important work.

Levelsio: The difference between European and American cafes is so stark

In Europe many don’t allow laptops anymore

In America they usually do and people are working on something cool!

I am with the French here. The cafe is there to be a cafe. If you want to work, you can go to the office, and seriously don’t do it on a laptop, you fool. I do not care if you are in San Francisco.

Marko Jukic claims that what distinguishes others from ‘normies’ is mainly not that normies are insufficiently intelligent, but not normies have astounding and incurable cowardice, especially intellectual cowardice but also risk taking in life in general.

Marko Jukic: Spending time with our young elites at university, in Silicon Valley, etc. I never got the impression that intelligence was lacking. Far from it. What was lacking was everything else necessary to use that intelligence for noble and useful ends. In a way this is much worse.

Actually practicing personal loyalty, principled self-sacrifice, or critical thinking in a way that isn’t camera-ready is not just uncommon or frowned-upon but will get you treated like a deranged, dangerous serial killer by average cowards. It’s actually that bad these days.

To return to the original point, thinking your own thoughts is barely a drop in the bucket of courage. But most don’t even have that drop. Important to keep that in mind when you model society, social technology, reforms, and “the public” or “the normies” or whatever.

We are certainly ‘teaching cowardice’ in many forms as a central culture increasingly over time. It is a major problem. It is also an opportunity. I do not buy the part where having courage gets you attacked. It is not celebrated as much as it used to be, this is true. And there are places where people will indeed turn on you for it, either if you make the wrong move or in general. However, that is a great sign that you want to be in different places.

Note that even in places where rare forms courage are actively celebrated, such as in the startup community, there are other ways in which being the ‘wrong kind of’ courageous and not ‘getting with the program’ will get this same reaction of someone not to be allies with. The principle is almost never properly generalized.

To answer Roon’s request here: No.

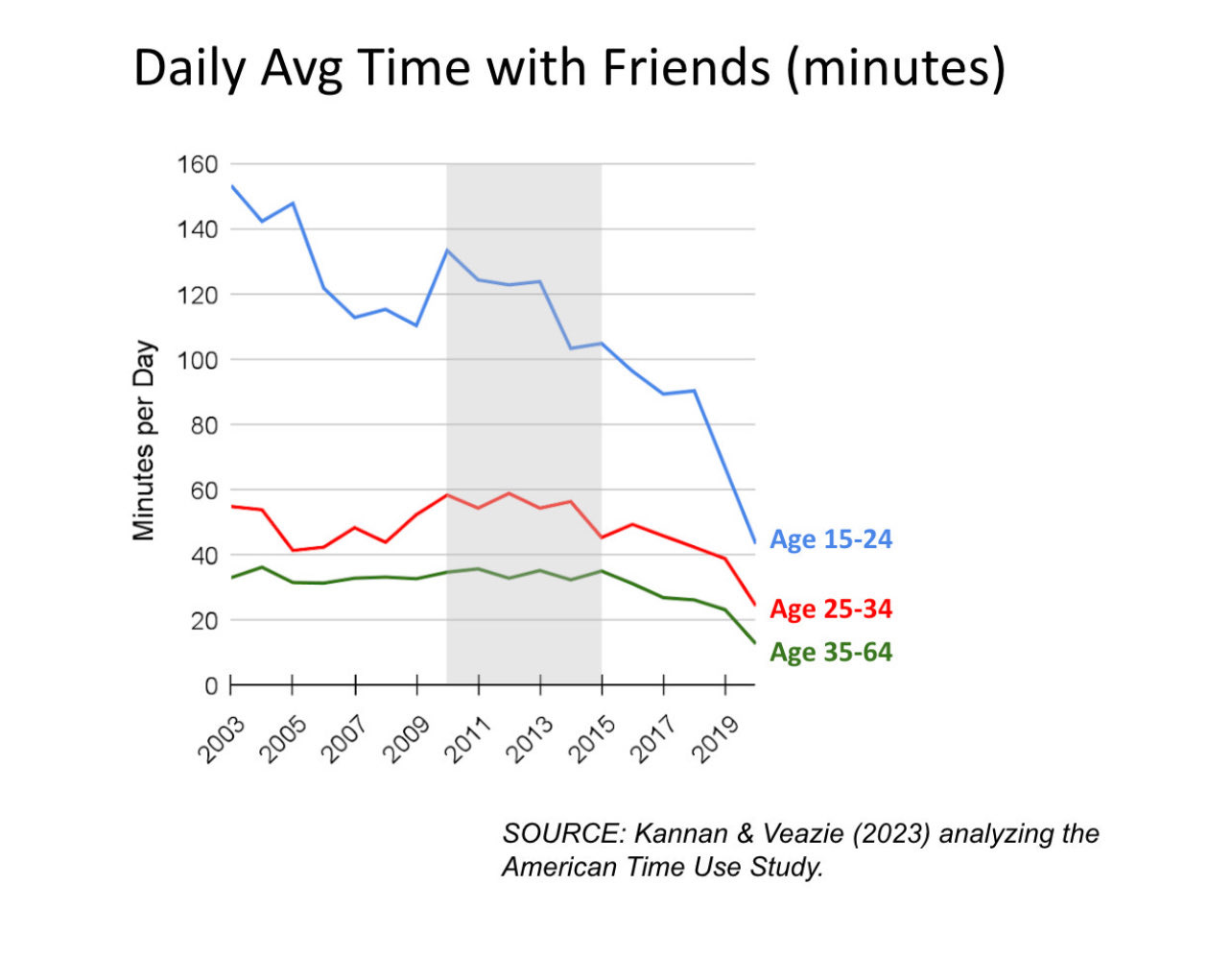

Mark Carnegie: If you don’t think this is a crisis i don’t know what to say to you.

Roon: cmon man now adjust the graph with the amount of time people spend texting or in their GCs.

Suhail: Yeah, we’re more connected, not less connected.

No. We really, really aren’t more connected. No, time spent texting or especially in ‘group chats’ is not a substitute to time spent with friends. Indeed, the very fact that people sometimes think it is a substitute is more evidence of the problem. Is it something at all? Yes. It is not remotely the same thing.

Tyler Cowen asks, what is the greatest outright mistake by smart, intelligent people, in contrast to disagreements.

His choice is (drum roll): attempting to forcibly lower prescription drug prices. Here’s the post in full.

Tyler Cowen: I am not referring to disagreements, I mean outright mistakes held by smart, intelligent people. Let me turn over the microphone to Ariel Pakes, who may someday win a Nobel Prize:

Our calculations indicate that currently proposed U.S. policies to reduce pharmaceutical prices, though particularly beneficial for low-income and elderly populations, could dramatically reduce firms’ investment in highly welfare-improving R&D. The U.S. subsidizes the worldwide pharmaceutical market. One reason is U.S. prices are higher than elsewhere.

Tyler Cowen: That is from his new NBER working paper. That is supply-side progressivism at work, but shorn of the anti-corporate mood affiliation.

I do not believe we should cancel those who want to regulate down prices on pharmaceuticals, even though likely they will kill millions over time, at least to the extent they succeed. (Supply is elastic!) But if we can like them, tolerate them, indeed welcome them into the intellectual community, we should be nice to others as well. Because the faults of the others probably are less bad than those who wish to regulate down the prices of U.S. pharmaceuticals.

Please note you can favor larger government subsidies for drug R&D, and still not want to see those prices lowered.

He has amusingly gone on to compare those making this mistake to ‘supervillains.’

A lot of people thought this was all rather absurd. The greatest mistake is failure to choose to vastly systematically overpay for something while everyone else gets it dirt cheap, because otherwise future investment would be reduced?

I think this points to what may actually be the gravest genuine mistake, which is:

Causal Decision Theory!

As in, you base your decision on what has the best consequences, rather than choosing (as best you can) the decision algorithm with the best consequences after considering every decision (past, present and future, yours and otherwise) that correlates with your decision now.

Alternatively, you could view it as the desire to force prices to appear fair, the instinct against gouging, which is also involved and likely a top 10 pick.

The debate over pharma prices indeed a great example of how this messes people up.

Everyone else except America is defecting, refusing to pay their fair share to justify the public good of Pharma R&D. One response is that this sucks, but America needs to step up all the more. Another is that if people can defect without punishment knowing others will pick up the slack then they keep doing so, indeed if you had not indicated this to them you would not be in this position now.

On top of that, you are paying off R&D that already happened in order to hold out the promise of reward for R&D in the future (and to some extent to create necessary cash flow). Locally, you are better off doing what everyone else does, and forcibly lowering prices rather than artificially raising them like we do. But if corporations expect that in the future, they will cut R&D.

So everyone is threatening us, and we are paying, so they keep threatening and we keep paying, but also this gives us strong pharma R&D.

You could say on top of the burden being unfairly distributed this is a really dumb way to support pharma R&D, and we should instead do a first best solution like buying out patents. I would agree. Tyler would I presume say, doesn’t matter, because we won’t possibly do this first best solution big enough to work, it is not politically feasible. And I admit he’d probably be right about that.

Another aspect is, suppose a corporation puts you in a position where you can improve welfare, or prevent welfare loss, but to do so you have to pay the corporation a lot of money, although less than the welfare improvement. And they engineered that, knowing that you would pay up. Should you pay? Importantly wrong question framing, the right question is what should your policy be on whether to pay. The policy should be you should pay to the extent that this means the corporations go out to seek large welfare improvements, balanced against how much they seek to engineer private gains including by holding back much of the welfare benefits.

A lot of situations come down to divide-the-pie, various forms of the dictator game – there is $100, Alice decides how to divide it, Bob accepts the division or everyone gets nothing. At what point does Bob accept an unfair division? If Bob demands an unfair (or fair!) division, and Alice believes Bob, at what point does Alice refuse? And so on.

Another way of putting a lot of this is: You can think of yourself or a given action, often, as effectively ‘moving last,’ where you know what everyone will do conditional on your action. That does not mean you must or should do whatever gives you the best payoff going forward, because it is very easy to exploit those with such a policy.

What does that imply about the motivating example? I think the answer is a lot less obvious or clean than Tyler thinks it is, even if you buy (as I mostly buy) the high value of future marginal pharma R&D.

Next up we have another reason you need functional decision theory.

Agenda setting is powerful when you model everyone else as using naïve Causal Decision Theory. If you get to propose a series of changes to be voted upon, you can in theory with enough steps get anything you want.

We model legislative decision-making with an agenda setter who can propose policies sequentially, tailoring each proposal to the status quo that prevails after prior votes. Voters are sophisticated and the agenda setter cannot commit to future proposals.

Nevertheless, the agenda setter obtains her favorite outcome in every equilibrium regardless of the initial default policy. Central to our results is a new condition on preferences, manipulability, that holds in rich policy spaces, including spatial settings and distribution problems. Our findings therefore establish that, despite the sophistication of voters and the absence of commitment power, the agenda setter is effectively a dictator.

Those voters do not sound terribly sophisticated. Rather, those voters sound profoundly unsophisticated.

Fool me once, shame on you. Fool me twice, can’t get fooled again.

An actually sophisticated voter would say that the agenda setter, if allowed to pass anything that is a marginal improvement for 51% of voters, effectively becomes a dictator. The proof is easy, you don’t need a paper – you could for example repeatedly propose to transfer $1 from 49% to the 51%, while always being part of the 51%, repeat until you have almost all the money, use that money periodically to buy other preferences.

The thing is, a sophisticated voter would recognize what you were up to rather quickly. They would say ‘oh, this is a trick, I know that this benefits me on its face but I know where this leads.’ And a majority of them would start always voting no.

This is not merely a theoretical or ideal response. This is a case where economists and casual decision theorists and politicians look at regular people and call them ‘irrational’ for noticing such things and reacting accordingly. What’s the matter with Kansas?

This, from the agenda setter’s perspective, is the matter with Kansas. If you set the agenda to something that looks superficially good, but you having control of the agenda is bad, then I should vote down your agenda on principle, as you haven’t given me any other affordances.

That is not to say that the agenda setter is not powerful. Being the agenda setter is a big game. You do still have to maintain the public trust.

Roon weeps for the old Twitter. He blames the optimizations for engagement for ruining the kinds of communities and interactions that made Twitter great, reporting now his feed is filled with slop and he rarely discovers anything good, whereas good new discoveries used to be common.

I continue to be confused by all the people not strictly using the Following tab plus lists (or Tweetdeck), and letting the For You feed matter to them. Why do you do this thing? Also out of curiosity I checked my For You feed, and it’s almost all the same people I follow or have on my lists, except it includes some replies from them to others, and a small amount of very-high-view-count generic content. There’s no reason to use that feature, but it’s not a hellscape.

Roon: The beauty of twitter was the simcluster, where 90% of the tweets in my feed came from one of the many organic self-organizing communities i was part of. now it’s maybe 20%. I used to daily discover intelligent schizomaniacs, now they are diffuse among the slop.

Near: Human values are actually fully inconsistent with virality-maximizing algorithms ‘but revealed preferences!’ as a take fully misunderstands coordination problems any society can be burnt to the ground with basic game theory and the right algorithm. We should strive for better.

I see Twitter as having net declined a modest amount for my purposes, but it still mostly seems fine if you are careful with how you use it.

I do think that Roon and Near are right that, if this were a sane civilization, Twitter would not be trying so hard to maximize engagement. It would be run as a public good and a public trust, or an investment in the long term. A place to encourage what makes it valuable, with the trust that this would be what matters over time. If it made less (or lost more) money that way, well, Elon Musk could afford it, and the reputational win would be worth the price.

If you want to improve your Twitter game, I found this from Nabeelqu to be good. Here is how I do things there. Here is Michael Nielson’s advice.

Your periodic reminder.

Brian Potter lays out the history of fusion, and the case for and against it being viable.

Scientists want to take more risks, and think science funding should generally take more risks. We need more ambitious projects. This paper points out a flaw in our funding mechanisms. The NIH, NSF and their counterparts make funding decisions by averaging peer review scores, whereas scientists say they would prefer to fund projects with more dissensus. This favors safe projects and makes it difficult to fund novel ideas. This is great news because it is relatively easy to fix by changing the aggregation function to put much less weight on negative reviews. Rule scientific ideas, like thinkers, in, not out.

Does the Nobel Prize sabotage future work?

Abstract: To characterize the impact of major research awards on recipients’ subsequent work, we studied Nobel Prize winners in Chemistry, Physiology or Medicine, and Physics and MacArthur Fellows working in scientific fields.

Using a case-crossover design, we compared scientists’ citations, publications and citations-per-publication from work published in a 3-year pre-award period to their work published in a 3-year post-award period. Nobel Laureates and MacArthur Fellows received fewer citations for post- than for pre-award work. This was driven mostly by Nobel Laureates. Median decrease was 80.5 citations among Nobel Laureates (p = 0.004) and 2 among MacArthur Fellows (p = 0.857). Mid-career (42–57 years) and senior (greater than 57 years) researchers tended to earn fewer citations for post-award work.

Early career researchers (less than 42 years, typically MacArthur Fellows) tended to earn more, but the difference was non-significant. MacArthur Fellows (p = 0.001) but not Nobel Laureates (p = 0.180) had significantly more post-award publications. Both populations had significantly fewer post-award citations per paper (p = 0.043 for Nobel Laureates, 0.005 for MacArthur Fellows, and 0.0004 for combined population). If major research awards indeed fail to increase (and even decrease) recipients’ impact, one may need to reassess the purposes, criteria, and impacts of awards to improve the scientific enterprise.

Steve Sailer (in the MR comments): I had dinner with Physics Laureate Robert Wilson, who had with Arno Penzias discovered the origin of the universe, a few months after Wilson won the Nobel in 1978. He was very gracious and polite as he was feted by his alma mater, Rice U., but deep down inside he probably wished he could have been back at his observatory tinkering with his radio telescope rather than doing all this kind of unproductive socializing you have to do after winning the Nobel.

Crusader (MR comments): Who ever said that major awards are supposed to increase the recipient’s future impact regardless of its merit?

Are Olympic gold medals supposed to increase the performance of athletes afterwards? Is a research award not just a status game carrot meant to incentivize the “first success” as well as a signal to others to review the related research?

Quite so. If you get a Nobel Prize then suddenly you have a ton of social obligations. The point of the prize is to give people something to aspire to win, not to enable those who win one to then do superior work, also scientists who win are typically already sufficiently old that their productivity will have peaked.

It seems odd to think about a Nobel Prize as being primarily about enabling future work. Even to suggest it is a huge indictment of our academic system – if you are up for a Nobel Prize, why didn’t you already have whatever resources and research agenda you most wanted?

Should scientific misconduct be criminalized? The slippery slope dangers are obvious. Yet it seems a violation of justice and also incentives that Sylvain Lense, whose deception wildly distorted Alzheimer’s research, killing many and wasting epic amounts of time and money, remains at large. Can we simply charge with fraud? If not, why the hell not?

Linch: Gender issues aside, it’s utterly bizarre to me that plagiarism is considered vastly worse among academics than faking data. It’s indicative pretty straightforwardly of rot imo, since it means the field as a whole cares more about credit attribution than about truth.

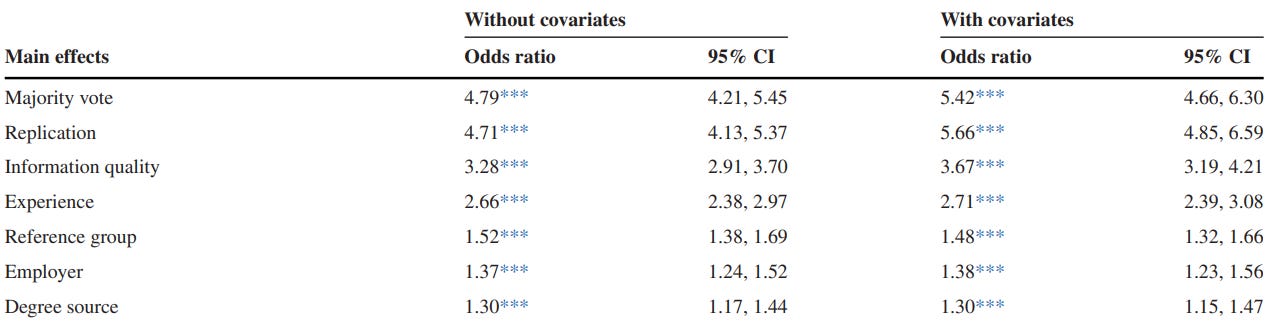

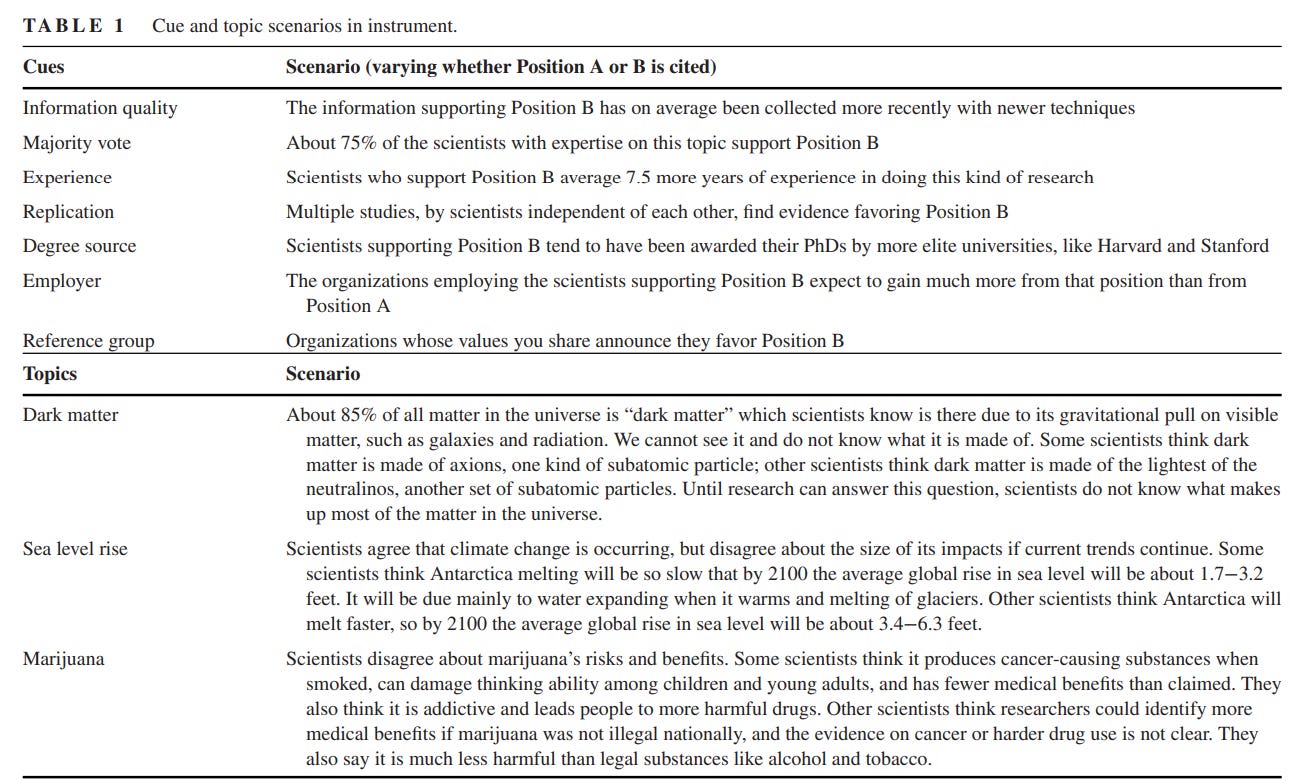

Paper asks how people decide who is correct when groups of scientists disagree. Here is the abstract.

Uncertainty that arises from disputes among scientists seems to foster public skepticism or noncompliance. Communication of potential cues to the relative performance of contending scientists might affect judgments of which position is likely more valid. We used actual scientific disputes—the nature of dark matter, sea level rise under climate change, and benefits and risks of marijuana—to assess Americans’ responses (n = 3150). Seven cues—replication, information quality, the majority position, degree source, experience, reference group support, and employer—were presented three cues at a time in a planned-missingness design. The most influential cues were majority vote, replication, information quality, and experience. Several potential moderators—topical engagement, prior attitudes, knowledge of science, and attitudes toward science—lacked even small effects on choice, but cues had the strongest effects for dark matter and weakest effects for marijuana, and general mistrust of scientists moderately attenuated top cues’ effects. Risk communicators can take these influential cues into account in understanding how laypeople respond to scientific disputes, and improving communication about such disputes.

The first sentence carries the odd implicit assumption that there is a ‘correct’ answer people should accept, the absence of which is skepticism or noncompliance. Then there’s describing various forms of Bayesian evidence as ‘cues,’ as opposed to considering the hypothesis that people might be considering the hypothesis. The role of risk manager seems to assume they already know what others are supposed to believe during scientific disputes. How do we use the right messaging to ensure the official scientists get believed over the unofficial ones?

Here are the results, all seven factors mattered.

Majority vote, replication and information quality and experience (where experience is defined as time doing this particular type of research), the most influential ‘cues,’ seem like excellent evidence to be using, with majority vote and replication correctly being used as the most important.

The other three are reference group support, degree source and employer. These seem clearly less good, although worth a non-zero amount. No, we should not rely too heavily on arguments from authority, and in particular not on arguments for association with authority.

Mistrust of science only decreased impact sizes by about 27%.

Score one for the public all around.

One thing I love about the paper is in 2.4.7 they lay out their predictions for which factors will be most important and how impacts are expected to work. Kudos.

Here are the detailed descriptions of the questions and cues.

Cues have the strongest effect on dark matter, a case where regular people have little to go on and know it and where everyone has reason to be objective. Marijuana leaves room for the most practical considerations, so any cues are competing with other evidence and it makes sense they have less impact.

Via Robin Hanson, across six studies, communicators who take an absolute honesty stance (‘it is never okay to lie’) and then lie anyway are punished less than those who take a flexible honesty stance that reflects the same actual behavior.

The straightforward explanation is that it is better for people to endorse the correct moral principles and to strive to live up to them and fail, rather than not endorse them at all. This helps enforce the norm or at least weakens it less, on several levels, and predicts better adherence and an effort to do so. With the same observed honesty level, one predicts more honesty both in the past and the future from someone who at least doesn’t actively endorse lying.

One can also say this is dependent on the lab setting and lack of repeated interaction. In that model, in addition to the dynamics above, hypocrisy has short term benefits and long term costs. If you admit to being a liar, you pay a very large one-time cost, then pay a much smaller cost for your lies beyond that, perhaps almost zero. If you say you always tell the truth, then you pay a future cost for each lie, which only adds up over the course of a long period.

Certainly Trump is the avatar of the opposite strategy, of admitting you lie all the time and then lying all the time and paying very little marginal cost per lie.

In Bayesian terms, we estimate how often someone has lied to us and will lie in the future, and will punish them proportional to this, but also proportionally more if you take a particularly strong anti-lie stance. And also we reward or punish you for your estimated effort to not lie and to enforce and encourage good norms, by both means.

In both cases, if you are providing only a few additional bits of evidence on your true base rate, hypocrisy is the way to go. If discount rates are low and you’re going to be exposed fully either way, then meta-honesty might be the best policy.

One can also ask if honesty is an exception here, and perhaps the pattern is different on other virtues. If you are exposed as a liar, and thus exposed as a liar about whether you are a liar, how additionally mad can I really get there? How much does ‘hypocrite’ add to ‘liar,’ which arguably is strictly stronger as an accusation?

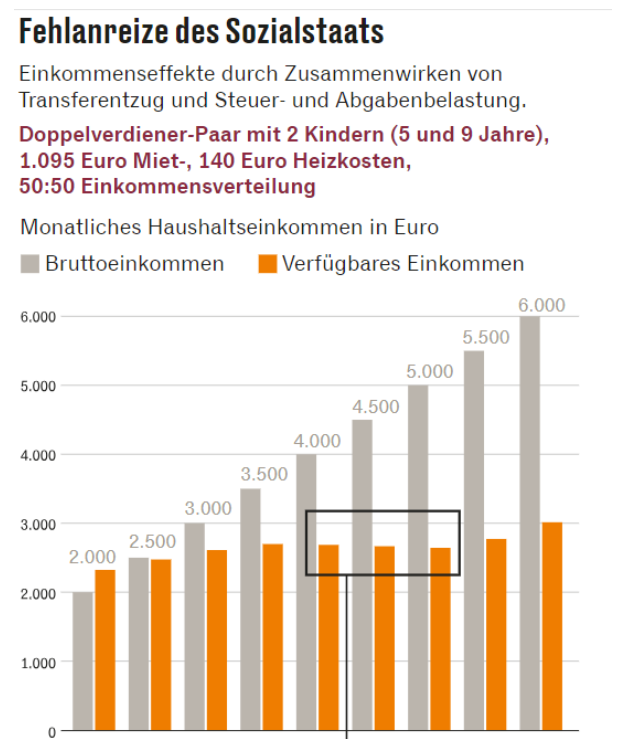

German marginal tax rates are a disaster and the poverty trap is gigantic.

The grey lines are Euros per month. Orange is effective take home pay. You essentially earn nothing by going from $25,800/year to $77,400/year, what the hell? With the median income right in the middle of that around €45k.

It is not as extreme as it sounds, because the benefits you get are not fully fungible. To get them you need to be renting, and to get max value it needs to be in a relatively expensive city, whereas the actual cash benefit is only 500 euros a month, which isn’t much. But still, yikes. This has to be a recipe for massive voluntary unemployment and black market work. To the extent that it isn’t, it is the German character being bizarrely unable to solve for this particular equilibrium.

jmkd: The wikipedia article (in German) below suggests that ~15% of the German economy is in “undeclared work.” Admittedly using numbers from different time periods, that would be equivalent to roughly 1/4 of the population working minimum wage.

yo: It’s a household-level view for a family of four. Roughly, if this family has no income, it is eligible for Bürgergeld, €24k/y. Plus a rent subsidy worth about the same €24k/y in the big cities, plus health insurance worth around €15k/y for that family. So yes, average families can get roughly €70k net welfare. Note that a family of four with €70k income would not pay much in taxes. But it would pay around 20% of this pretax income in social charges (mostly pension contributions and health insurance)

Oye cariño, ¿quieres comprar algunos créditos porno? Spain unveils the Digital Wallet Beta, an app for internet platforms to check before letting you watch porn. The EU is giving all porn sites until 2027 to stop you from watching porn, forcing kids (by that point) to download AI porn generators instead. Or have their AI assistant purchase some of those porn credits from ‘enthusiasts.’

Gian Volpicelli (Politico): Officially (and drily) called the Digital Wallet Beta (Cartera Digital Beta), the app Madrid unveiled on Monday would allow internet platforms to check whether a prospective smut-watcher is over 18. Porn-viewers will be asked to use the app to verify their age. Once verified, they’ll receive 30 generated “porn credits” with a one-month validity granting them access to adult content. Enthusiasts will be able to request extra credits.

While the tool has been criticized for its complexity, the government says the credit-based model is more privacy-friendly, ensuring that users’ online activities are not easily traceable.

While I oppose this on principle, I do approve of this for the kids all things being equal. You should have to work a bit for your porn especially when you are young. I also like the VPN encouragement. The parts where various website geoblock and adults get inconvenienced and identification information is inevitably stolen again as it was this past month? Those parts I do not like as much.

Should the UK use proportional representation? Tyler Cowen says no, because the UK needs bold action so it is good to give one party a decisive mandate even if they got only a third of the vote and essentially won because game theory and a relatively united left. See what they can do, you can always vote them out again. He does not much care about the voters not actually wanting Labour to rule any more than they did before. The point of democracy, in his view, is as a check in case government gets too out of line (and presumably a source of legitimacy), rather than ensuring ‘fairness.’

The danger is an unfair system can damage those other goals too, and this seems like a lot of power to hand to those who get the upper hand in the game theory. Essentially everyone is locked in these ‘unite or die’ dilemmas constantly, as we are in America, except now there is an expectation that people might not unite. So I presume you need some form of runoff, approval or ranked choice voting. They are far from perfect, but so much less distortionary than actual first past the post rules when they fail to collapse into a two party system.

The FTC tried to ban almost all noncompetes, including retroactively. It is not terribly surprising that the courts objected. Judge Ada Brown issued a temporary block, finding that the FTC likely lacked the authority to make the rule, which seems like a very obviously correct observation to me.

Thom Lambert: Now that @FTC’s noncompete ban has been preliminarily enjoined (unsurprisingly), let’s think about some things the agency could do on noncompetes that are actually within its authority. It could, of course, bring challenges against unjustified noncompetes.

hat would create some helpful precedent *andallow the agency to amass expertise in identifying noncompetes that are unwarranted. (The agency implausibly claims that all but a very few noncompetes lack justification, but it has almost no experience with noncompete cases.)

It could also promulgate enforcement guidelines. If the guidelines really take account of the pros and cons of noncompetes (yes, there are pros) and fairly set forth how to separate the wheat from the chaff, they’ll have huge influence in the courts and on private parties.

These moves are admittedly not as splashy as a sweeping economy-wide ban, but they’re more likely to minimize error cost, and they’re within the agency’s authority. In the end, achievement matters more than activity.

This is the new reality even more than it was before.

-

If you bring individual action against particular cases you can build up case law and examples.

-

If you try to write a maximally broad rule, the courts are going to see to it you have a bad time.

There was a lot of talk about the overturning of Chevron, but there was another case that could also potentially be a big deal in making government work even less well. This is Ohio v. EPA, which is saying that if you ignore any issue raised in the public comments, then that can torpedo an entire project.

Robinson Meyer: Last week, the Court may have imposed a new and *extremelyhigh-scrutiny standard on how federal agencies respond to public comments. That will slow the EPA’s ability to write new rules, but it would also make NEPA even more arduous.

…

The EPA did respond to the comments at the center of the Ohio case, but Justice Neil Gorsuch, writing for the majority, decided the agency did not address a few specific concerns properly.

So the new procedure will be, presumably, to raise every objection possible, throw everything you can at the wall, then unless the government responds to each concern raised in each of the now thousands (or more) comments, you can challenge the entire action. And similarly, you can do the same thing with NEPA, making taking any action that much harder. Perhaps essentially impossible.

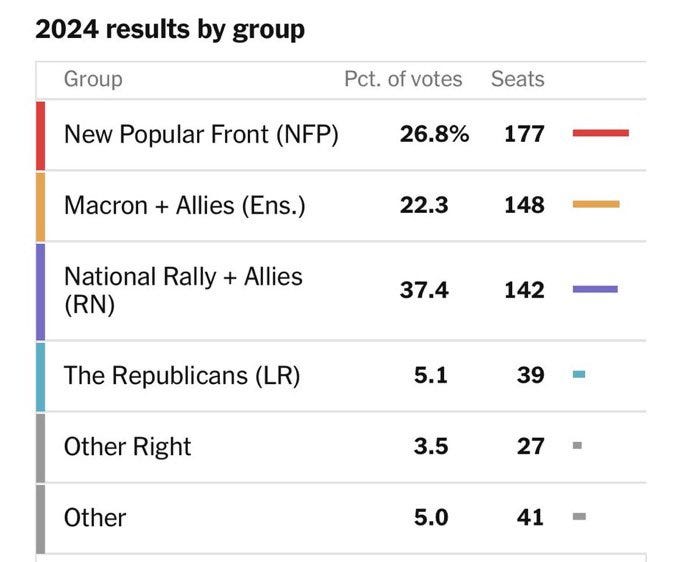

French elections produce unexpected seemingly disproportional results.

It is not as bad as it looks. NFP and Macron essentially (as I understand it) operated as one block, with whoever was behind dropping out in each local election, so effectively this is more like a party with 49.1% of the vote getting 325 seats to RN’s 37.4% and 142.

Claude estimates that if a similar result happened in America, the house would break down about 265-170, but our system is highly gerrymandered and the parties are geographically isolated. I don’t think 325-142 is that extreme here.

If you combined RN+LR+’Other Right’ then you would get 46% of the vote and only 208 seats with a 3.1% gap, which seems extreme. LR and Other Right did well in converting votes to seats in the second round, so they were likely not being dramatically unstrategic.

Similarly to the English results, one must ask to what extent we want strategic voting and negotiating between parties to determine who gets to rule.

New York City sets minimum food delivery wage to $19.56, which in turn means intense competition for work preference during busy hours. It also means fees on every order, which many no doubt are responding to by not tipping. I strongly suspect most of this mostly cancels out and the services are still totally worth it.

New York City gets trash cans. You thought the day would never come. So did I. Before unveiling them, New York did a $4 million McKinsey study ‘to see if trash cans work’ and that is not the first best solution but it sure is second best.

Enguerrand VII de Coucy: Oh my god New York City paid McKinsey $4,000,000 to do a study on if trash cans work.

rateek Joshi: Maybe the point was that the NYC govt wanted to tell its citizens “If you don’t start putting trash in trash bins, we’ll give more money to McKinsey.”

Enguerrand VII de Coucy: Honestly that’s a potent threat

Swann Marcus: In fairness, the end result of this McKinsey study was that New York started using trashcans. Most American cities would have spent $4 million on a trashcan study and then inexplicably never gotten trashcans.

Aaron Bergman: I am going to stake out my position as a trash can study defender. It probably makes sense to carefully study the effects of even a boring and intuitive policy change that affects ~10⁷ people

Mike Blume: It’s fun to rag on NYC for their incompetence in this area, but “where will the bins go” is an understudied problem on many American streets

Getting the details right here is very important. There are some cases where governments vastly overpay for stupid things, and I don’t think this is one of them.

In defense of the lost art of the filler episode. I strongly agree here. Not all shows should be 22 episodes a year, but many should be. It makes the highs mean more, and I love spending the extra time and taking things gradually.

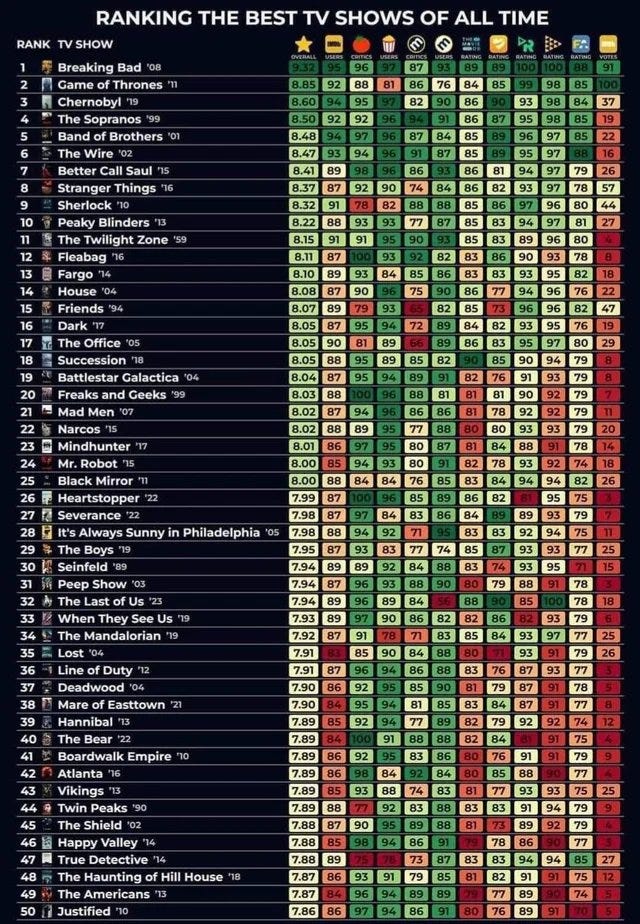

What do we make of this list and also the rating type breakdown?

The recency bias is strong. There are way too many 2010s shows here. I do think that there was a quality upgrade around the 90s but still.

The drama bias is also strong. Comedies are great and deserve more respect.

It’s hard to get a good read on the relative rating systems. It does seem like too much weight was put on the votes.

How many of these have I seen enough to judge?

There are a bunch of edge cases but I would say 20.

Correctly or Reasonably Rated: The Wire (my #1), Breaking Bad (my #3 drama), The Office, It’s Always Sunny in Philadelphia, Mr. Robot (I have it lower but I can’t argue), Severance (so far, it’s still early), Seinfeld (you somewhat had to be there), Freaks and Geeks (if you don’t hold brevity against it).

Underrated: The Americans (my #2 drama), Deadwood

Decent Pick But Overrated: Chernobyl (miniseries don’t count, others are missing if they do, and even if you discount that it’s good but not this good), Game of Thrones (great times and should make the list but you can’t put it at #2 after the last few seasons, come on), Stranger Things (Worth It but #8?!), Battlestar Galactica (this is a bit generous), The Shield (I can maybe see it), Lost (oh what could have been).

Bad Pick: Friends (better than its rep in my circles but not a best-of), House (it’s fine but not special), True Detective (one very good season but then unwatchable and no time is not a flat circle), Black Mirror (not half as clever as it thinks, despite some great episodes), The Mandalorian (I stuck with it long enough to know it isn’t top 50 level great and wasn’t working for me, although it isn’t actively bad or anything).

Most Importantly Missing (that I know of and would defend as objective, starting with the best three comedies then no order): Community, The Good Place, Coupling (UK) (if that counts), Watchmen (if we are allowing Chernobyl this is the best miniseries I know), Ally McBeal, Angel and Buffy the Vampire Slayer (no, seriously, a recent rewatch confirms), Gilmore Girls, Roseanne, Star Trek: DS9 (I see the counterarguments but they’re wrong), How I Met Your Mother.

I wonder if you should count Law & Order. You kind of should, and kind of shouldn’t.

The other ~30 here I haven’t given enough of a chance to definitively judge. Many I hadn’t even heard about.

Does anyone have a better list?

Of the ones I didn’t mention, I’m open to the case being made. For The Sopranos and Better Call Saul, I watched a few episodes and realized they were objectively very good but thought ‘I do not want to watch this.’ Or in particular, the show is great but I do not want to watch these people. A bunch of others here seem similar?

I can overcome that, but it is hard. Breaking Bad is not something I wanted to watch, in many important senses, but it was too good not to, and Walter White breaks bad but does not have that ‘I can’t even with this guy.’

Scott Sumner has his films of 2024 Q2. He put Challengers at only 2.6/4, whereas I have Challengers at 4.5/5, which provides insight into what he cares about. From the description he was clearly on tilt that day. Also I strongly suspect he simply does not get the characters involved, and finding them unlikeable did not seek to get them. It is the first time I’ve seen his rating and said not ‘you rated this differently than I would because we measure different things’ but rather ‘no, you are wrong.’

My movie log and reviews continue to be at Letterboxd. I’ve moved more towards movies over television and haven’t started a new TV series in months.

The official EA song should be: Okay, full disclosure. We’re not that great. But nevertheless, you suck.

Economeager: As you know i do not identify with EAs as a culture despite my great support for givewell, open phil, etc. However when I meet someone who gives misguided and ineffective charity for purely emotional reasons I do have like a palpatine kermit moment with myself.

Never mind I saw the EA guys getting hyped to think about how “the economy” will work “after AGI” and hate everyone equally again.

Andy Masley: I was on the fence about getting more involved in EA a few years ago and then in my old job was exposed to a charity where people read stories over Zoom to dogs.

When given $10,000 to spend however they wanted, people spent the majority of it on pro-social things that benefited others, and almost 17% went to charities outright. This seems like a missed opportunity to provide more details about what types of things the money was spent on, we can study multiple things at once. Public posting of spending choices on Twitter had little impact on distribution of purchases.

I didn’t get a chance to pre-register my expectations here, nor do I have a good sense of exactly what counts as ‘pro social’ versus not. The idea that people, when given a windfall, spread it around somewhat generously, seems obvious. Windfalls are considered by most people as distinct from non-windfall decisions, the money is ‘not yours’ or not part of your typical planning, and is often largely wasted or bestowed generously, in a way that ‘core’ income is not. It is an opportunity to affirm your bonds to the community and good character and not present a target, and the money fails to ‘feel real.’ I do find it strange that public info did not at all impact decisions, which makes me suspect that such decisions were treated as effectively equally public either way in practice.

Johns Hopkins Medical School goes tuition-free for medical students due to massive grant, also expands aid for future nurses and public health pioneers. Nikhil Krishnan speculates that more places will end up doing this, and correctly notices this is not actually good.

The choke point is residency slots. It would not be my first pick for charity dollars, but I think that ‘give money to endow additional residency slots at hospitals that agree to play ball’ would be a highly understandable choice. Whereas ‘make future doctors that will mostly earn a lot of money have less student debt’ does not make sense. Yes, you can potentially improve applicant quality a bit, but not much. Whatever your goal, unless it is ‘glory to this particular program,’ you can do it better.

You can use 1Password to populate environmental variables in CLI scripts, so you can keep your API keys in your password manager, also there is a fly.io plugin.

Arnold Ventures is hiring for its infrastructure team.

How to write for Works in Progress.

Pick your neighborhood carefully, not only your city.

Phil: So, the first thing I think of is that you’re going to spend 1000x more time in your surrounding 5 blocks than you will in any other neighborhood in your city. And so thinking about all the things that New York City or next city has, is to me a lot less important than thinking about the things within the five blocks where you live. Most neighborhoods in your city you might never step foot in, they might as well be in the other side of the country. But the things in your immediate vicinity are the things that are going to dominate your life. So picking and influencing your neighborhood is really important. And the two big ways you can influence your neighborhood are one, determining who lives in your neighborhood by moving people there, something I am very biased on because I work on it. And two, improving your neighborhood.

As a New Yorker, I definitely will walk more than five blocks more than 5% of the time. For example, my favorite most frequented restaurant is 7 blocks away. The point very much still stands. My friend Seth uses the rule of thumb that value is proportional to the inverse square of travel time, which again goes too far but is directionally right.

Concert goers who consumed more alcohol were less likely to choose pro-social options in experimental economic games. Does not seem to distinguish between cooperators being more sober, versus sobriety leading to cooperation. Both seem plausible. One more reason not to drink.

Little effect is found of siblings on attitudes towards inequality. This study says more about what current academic pressures and biases than it says about anything else.

Paper says that despite the narrative of democratic backsliding, objective measures such as electoral competitiveness, executive constraints and media freedom show no such evidence of (net) backsliding.

Those with higher IQ scores shoot firearms more accurately. I did not expect that. The real intelligence is never needing to shoot and never getting shot. I bet those correlate too.

Your enemies probably have more enemies than you do. Unfortunately, on the same principle, you probably have fewer friends than your friends.

Shoutout to my former teammate and coworker Kai Budde, the German Juggernaut who never loses on Sundays. He’s an all around amazing guys and best teammates you will know. I mention this because unfortunately Kai has terminal cancer. They have renamed the Player of the Year trophy in Kai’s honor.

He at least got a chance to play the PT recently in Amsterdam, with all the associated great times.

Then it was a Sunday, so of course Kai Budde won the PTQ.

Even with my qualification slots, I’m well past the point I can take this kind of time off to properly prepare, and even if I could I can’t put up the stamina for a three day fight, or even a two day fight. But man I miss the good times.

Moxfield lets you do this:

Lupe: I used to be in on the bling until we hit a weird critical capacity of too much. I’m now slowly putting a filter of “first printing” on all of the cards in my main Cube. Magic cards are kind of like hieroglyphs, so as a designer, I want to maximize tabletop legibility.

Brian Kowal: This is The Way.

Magical Hacker: I didn’t know you could do this until I saw this post, & now I need to share what I picked: f:c game:paper lang:en -e:plst (frame: 2015 -is:borderless (is:booster or st:commander) -is:textless -is:ub -is:etched or -is:reprint or e:phpr) (-e:sld or e:sld -is:reprint) prefer:newest

I cannot emphasize enough how much I agree with Lupe. Some amount of bling is cool. At this point we have way, way too much bling. There are too many cards, and also too many versions of each card, too many of which are not legible if you do not already know them on sight. I do want to stay in touch with the game, but it seems impossible.

The value of Chess squares, as measured by locations of pawns, bishops and knights. A fun exercise that I do not expect to offer players much insight. Pawn structure seems strangely neglected in their analysis.

John Carmack points out that a key reason the XBox (and I would add the PlayStation) never caught on as entertainment centers is that their controllers require non-trivial power to operate, so they go to sleep after periods of inaction and require frequent charging. If we could solve that problem, I would happily use the PlayStation as a media center, the interface is otherwise quite good.

Surely we can get a solution for this? Why can’t we have a remote that functions both ways, perhaps with a toggle to switch between them? Maybe add some additional buttons designed to work better as part of a normal remote?

Matthew Yglesias makes a case that high-pressure youth sports is bad for America. Sports played casually with your friends are great. Instead, we feel pressure to do these expensive, time consuming, high pressure formalized activities that are not fun, or we worry we will be left behind. That cuts out a lot of kids, is highly taxing on parents and damages communities. And yes, I agree that this trend is terrible for all these reasons. Kids should mostly be playing casually, having fun, not trying to make peak performance happen.

Where we differ is Yglesias thinks this comes from fear of being left behind. There is some of that but I am guessing the main driver is fear of letting kids play unsupervised or do anything unstructured. The reason we choose formal sports over the sandlot is that the sandlot gets you a call to child services. Or, even if it doesn’t, you worry that it would.

Hockey got one thing very right.

Scott Simon: In prep for, tonight, watching my first hockey game in… a decade?… I just learned that challenges in the NHL come with real stakes—if you’re wrong, your team is assessed a penalty. Now *thatis a challenge system. (Still, robot refs now.)

My first choice is no challenges. Barring that, make them expensive.

Tyler Cowen links to a paper by Christian Deutscher, Lena Neuberg, and Stefan Thiem on Shadow Effects of Tennis Superstars. They find that when the next round in a second-tier tournament would be against one of the top four superstars, other players in the top 20 over the period 2004-2019 would advance substantially less often than you would otherwise expect.

The more the superstars go away, the more the other top competitors smell blood and double down, effect size is 8.3 percentage points which is pretty large. Part of that might come from the opposite effect as well, if I was not a top player I might very much want the honor of playing against Federer or Nadal. Mostly I am presuming this effect is real. Tennis is a tough sport and you can’t play your full-on A-game every time especially if slightly hurt. You have to pick your battles.

Analysis of the new NFL kickoff rules, similar to the XFL rules. I realize the injury rate on kickoffs was too high, and seeing how this plays out should be fun, but these new rules seem crazy complicated and ham fisted. At some point we need to ask whether we need a kickoff at all? What if we simply started with something like a 4th and 15 and let it be a punt, or you could go for it if you wanted?

College football seems ready to determine home teams in the new playoff based on factors like ‘hotel room availability,’ ‘ticket sales’ and weather? Wtf? Oh no indeed.

Mitchell Wesson: Schools can absolutely control the quality and quantity of nearby hotel rooms.

Weather, obviously not but it doesn’t seem reasonable to ignore it either. Wouldn’t be fair to fans or teams if a game has to be delayed when that could otherwise have been avoided.

If someone gets to host, there needs to be only one consideration in who hosts a playoff game. That is which team earned a higher seed (however you determine that) and deserves home field advantage. That is it. If the committee actually ever gives home field to the other team, even once, for any other reason (other than weather so extreme you outright couldn’t play the game), the whole system is rendered completely illegitimate. Period.

Waymo now open to everyone in San Francisco.

Sholto Douglas: Three telling anecdotes

> I felt safer cycling next to a Waymo than a human the other day (the first time I’ve had more ‘trust’ in an AI than a human)

> the default verb/primary app has changed from Uber to Waymo amongst my friends

> when you ride one, try to beat it at picking up on noticing people before they appear in the map, you ~won’t

They’re amazing. Can’t wait for them to scale globally.

Matt Yglesias asks what we even mean by Neoliberalism, why everyone uses it as a boogeyman, and whether we actually tried it. Conclusions correctly seem to be ‘the intention was actually letting people do things but it gets used to describe anything permitting or doing something one doesn’t like,’ ‘because people want to propose bad policies telling people what to do without facing consequences’ and ‘no.’

Certainly all claims that the era of big government was ever over, or that we suddenly stopped telling people what they were allowed to do, or that we pursued anything that was at all related to ‘growth at all costs’ is absurd, although we made some progress on at least not having (fewer, although still far too many) price controls.

Nick proposes that for less than $1 million a year you could easily have the coolest and highest status cafe in San Francisco, attracting immense talent, have a cultural touchstone with lots of leverage, creating tons of real estate and actual value, other neat stuff like that. It seems many engineers pus super high value on the right cafe vibe, on the level of ‘buy a house nearby.’ I don’t get it, but I don’t have to. Nick proposes finding a rich patron or a company that wants it nearby. That could work.

In general, this is part of the pattern where nice places to be add tons of value, but people are unwilling to pay for them. You can provide $50/visit in value, but if you charge $10/table or $10/coffee, people decide that kills the vibe.

Which do you value more as a potential superhero: Mind control, flight, teleportation or super strength? On the survey the answer was teleportation.

The correct response, of course, is to have so many questions. Details matter.

Teleportation is a very extreme case of Required Secondary Powers. How do you ensure you do not teleport into a wall or the air or space? How do you deal with displacement? How often can you do it? Where can you go and not go? And so on.

There are versions of teleportation I’ve seen (including in some versions of AD&D) where I would not pay much for them, because you are so likely to get yourself killed you would only do it in a true emergency. Then there are others that are absurdly valuable.

Flight is the lightweight version of the same problem. If you take it to mean the intuitive ‘thing that Superman or Wonder Woman can do in movies’ then yeah, pretty great assuming people don’t respond by trying to put you in a lab, and I’d pay a lot.

Super strength is a nice to have at ‘normal’ levels. At extreme levels it gets a lot more interesting as you start violating the laws of physics or enabling new engineering projects, especially if you have various secondary powers.

Mind control is on an entirely different level. Sometimes it is a relatively weak power, sometimes it enables easy world domination. There you have to ask, as one of your first questions, does anyone else get mind control powers too? This is like the question of AI, with similarly nonsensical scenarios being the default. If the people with true mind control powers used them properly there would usually be no movie. If others get ‘for real’ versions of mind control, and you take super strength or flight, do you even matter? If so, what is your plan? And so on.

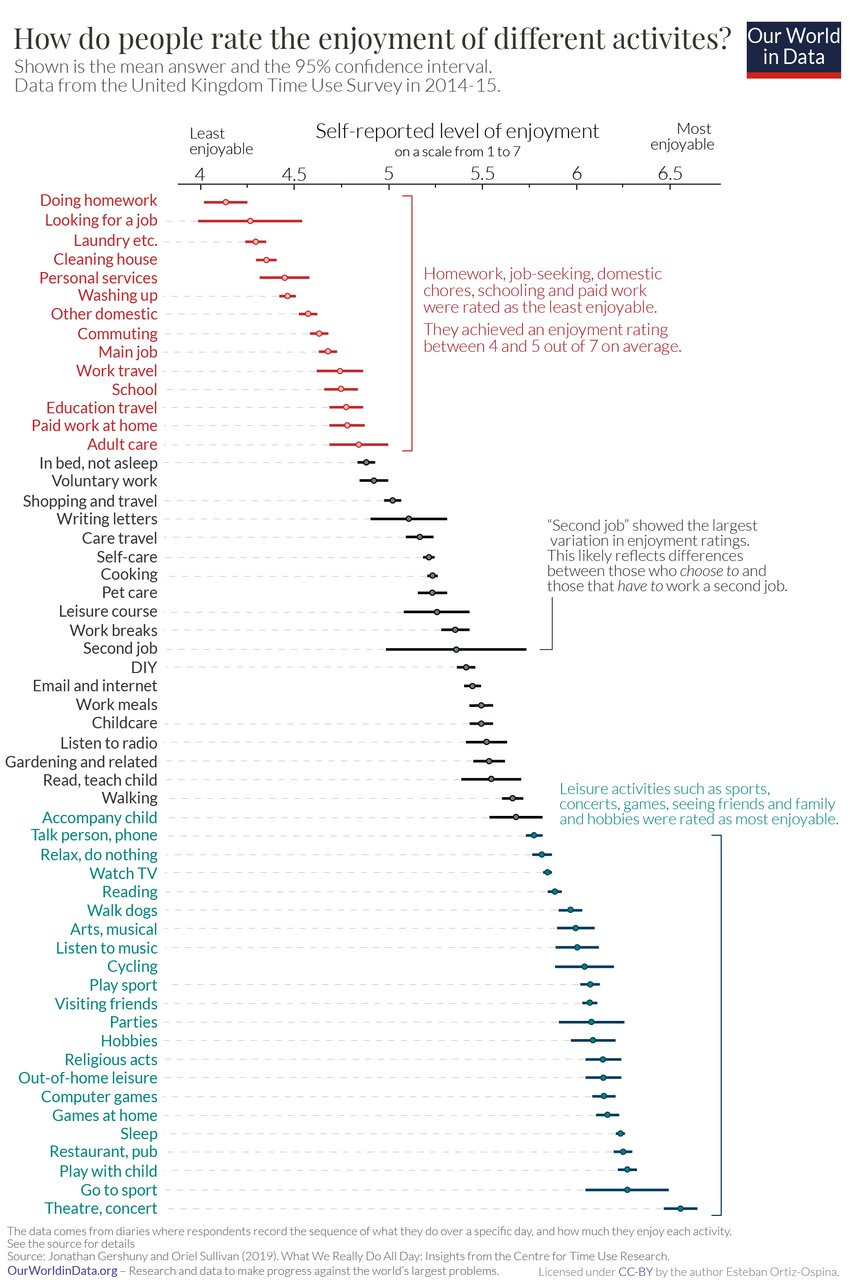

What activities do people enjoy or not enjoy?

Rob Wiblin [list edited for what I found interesting]:

-

‘Computer games’ are among the most enjoyable activities, probably deserve more respect. It clearly beats ‘watching TV’. ‘Games at home’ sounds cheap and accessible and scores high — I guess that’s mostly card or board games.

-

Highly social activities are more work and money to set up but still come in highest of all: ‘restaurant / pub’, ‘go to sport’, and ‘theatre / concert’. ‘Parties’ comes in behind those.

-

‘Play with child’ was among the most enjoyable of any activity. Many folks who choose not to have kids probably underrate that pleasure. Pulling in the other direction ‘Childcare’ falls in the middle of the pack, though it’s more popular by a mile than school, housework, or paid work. No surprise some people opt out of the workforce to raise a family!

-

‘Homework’ came dead last, much less popular than even ‘School’. Counts in favour of reducing it where it’s not generating some big academic benefit.

-

‘Email and internet’ — the activity that eats ever more of our days — is right in the middle. Conventional wisdom is you want to substitute it for true leisure and the numbers here clearly back that up.

-

There’s some preference for active over passive leisure — TV, reading, doing nothing and radio are all mediocre by the standards of recreation. I’m surprised reading and watching TV are right next to one another (I would have expected reading to score higher).

-

People sure hate looking for a job.

-

I’ve seen some debate about how much people like or dislike their jobs. Work and school are definitely much less enjoyable than activities where people are more likely to be freely determining for themselves what they’re doing. But they still manage a 4.7 out of 7. It could be much worse (and in the past probably was). Commuting is unpopular but not at the very bottom like I’d heard.

Gaming and sports for the win. Going to the game is second only to concerts, and I strongly agree most of us are not going to enough of either. Weird that going to the movies is not here, I’d be curious how high it goes. And yes, playing board games at home is overpowered as a fun activity if you can make it happen.

Homework being this bad is not a surprise, but it needs emphasis. If everyone understood that it was less fun than looking for a job or doing the laundry, perhaps they would begin to understand.

Reading I am guessing scores relatively low because people feel obligated to read. Whereas those who choose to read for relaxation on average like it a lot more.

Why Do Companies Go Woke? Middle managers, so a result of moral maze dynamics, which includes a lack of any tether to or caring about physical reality. Makes sense.

The absurdity of the claims in Graeber’s Bullshit Jobs.

Ross Rheingans-Yoo notes that ‘hold right mouse button and then gesture’ is a technique he and others often use playing the game Dota because it is highly efficient, yet only when Parity suggested it did it occur to him to use it for normal text editing. My initial reaction was skepticism but it’s growing on me, and I’m excited to try it once someone implements it especially if you can customize the options.

Making dumb mistakes is fine. Systems predictably making particular dumb mistakes is also fine. Even bias can be fine.

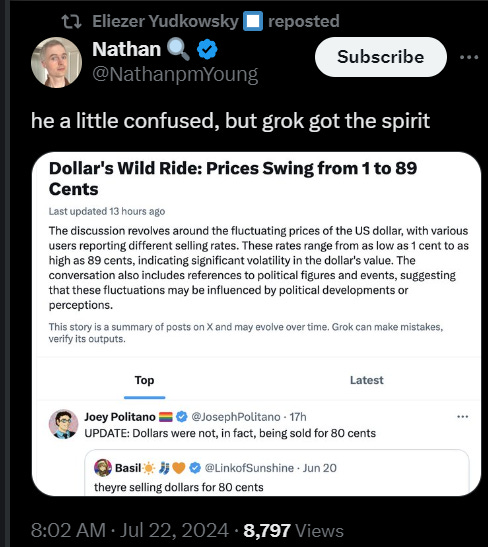

This was a serious miss, but it is like AI – if you only look for where the output is dumb, you will miss the point.

Keep trying, and you’ll figure it out eventually.

(For those who don’t know, this was about prediction markets on the Democratic presidential nomination.)