Pebble’s founder wants to relaunch the e-paper smartwatch for its fans

With that code, Migicovsky can address the second reason for a new Pebble—nothing has really replaced the original. On his blog, Migicovsky defines the core of Pebble’s appeal: always-on screen; long battery life; a “simple and beautiful user experience” focused on useful essentials; physical buttons; and “Hackable,” including custom watchfaces.

Migicovsky writes that a small team is tackling the hardware aspect, making a watch that runs PebbleOS and “basically has the same specs and features as Pebble” but with “fun new stuff as well.” Crucially, they’re taking a different path than the original Pebble company:

“This time round, we’re keeping things simple. Lessons were learned last time! I’m building a small, narrowly focused company to make these watches. I don’t envision raising money from investors, or hiring a big team. The emphasis is on sustainability. I want to keep making cool gadgets and keep Pebble going long into the future.”

Still not an Apple Watch, by design

The Pebble 2 HR, the last Pebble widely shipped. Credit: Valentina Palladino

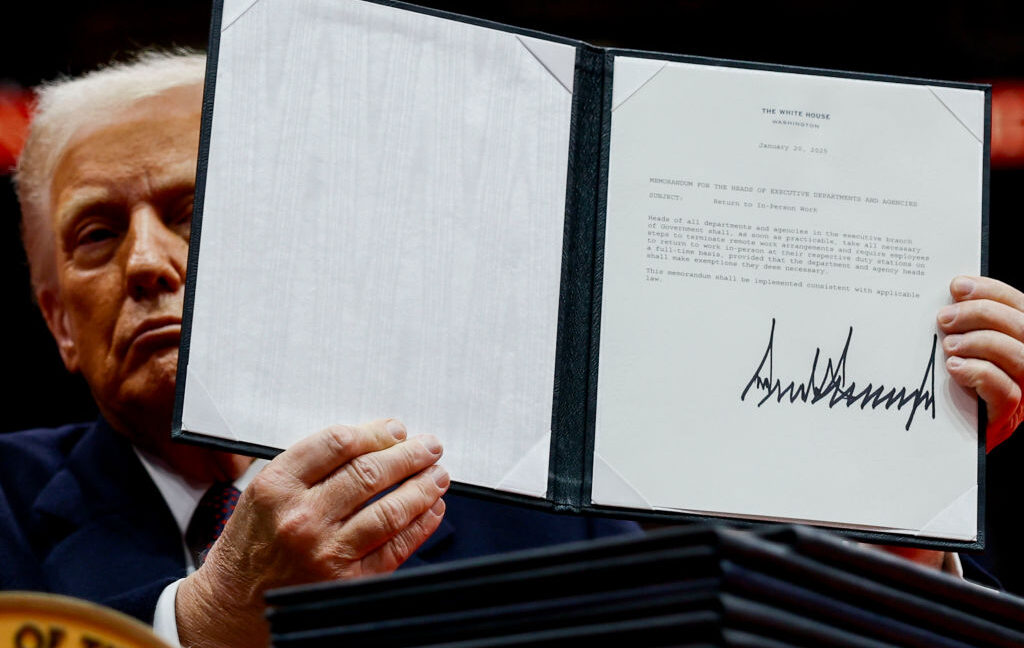

Ars asked Migicovsky by email if modern-day Pebbles would have better interoperability with Apple’s iPhones than the original models. “No, even less now!” Migicovsky replied, pointing to the Department of Justice’s lawsuit against Apple in 2024. That lawsuit claims that Apple “limited the functionality of third-party smartwatches” to keep people using Apple Watches and then, as a result, less likely to switch away from iPhones.

Apple has limited the functionality of third-party smartwatches so that users who purchase the Apple Watch face substantial out-of-pocket costs if they do not keep buying iPhones. The core functionality Migicovsky detailed, he wrote, was still possible on iOS. Certain advanced features, like replying to notifications with voice dictation, may be limited to Android phones.

Migicovsky’s site and blog do not set a timeline for new hardware. His last major project, the multi-protocol chat app Beeper, was sold to WordPress.com owner Automattic in April 2024, following a protracted battle with Apple over access to its iMessage protocol.

Pebble’s founder wants to relaunch the e-paper smartwatch for its fans Read More »