This seemed like a good next topic to spin off from monthlies and make into its own occasional series. There’s certainly a lot to discuss regarding crime.

What I don’t include here, the same way I excluded it from the monthly, are the various crimes and other related activities that may or may not be taking place by the Trump administration or its allies. As I’ve said elsewhere, all of that is important, but I’ve made a decision not to cover it. This is about Ordinary Decent Crime.

-

Perception Versus Reality.

-

The Case Violent Crime is Up Actually.

-

Threats of Punishment.

-

Property Crime Enforcement is Broken.

-

The Problem of Disorder.

-

Extreme Speeding as Disorder.

-

The Fall of Extralegal and Illegible Enforcement.

-

In America You Can Usually Just Keep Their Money.

-

Police.

-

Probation.

-

Genetic Databases.

-

Marijuana.

-

The Economics of Fentanyl.

-

Enforcement and the Lack Thereof.

-

Jails.

-

Criminals.

-

Causes of Crime.

-

Causes of Violence.

-

Homelessness.

-

Yay Trivial Inconveniences.

-

San Francisco.

-

Closing Down San Francisco.

-

A San Francisco Dispute.

-

Cleaning Up San Francisco.

-

Portland.

-

Those Who Do Not Help Themselves.

-

Solving for the Equilibrium (1).

-

Solving for the Equilibrium (2).

-

Lead.

-

Law & Order.

-

Look Out.

A lot of the impact of crime is based on the perception of crime.

The perception of crime is what drives personal and political reactions.

When people believe crime is high and they are in danger, they dramatically adjust how they live and perceive their lives. They also demand a political response.

Thus, it is important to notice, and fix, when impressions of crime are distorted.

And also to notice when people’s impressions are distorted. They have a mental idea that ‘crime is up’ and react in narrow non-sensible ways to that, but fail to react in other ways.

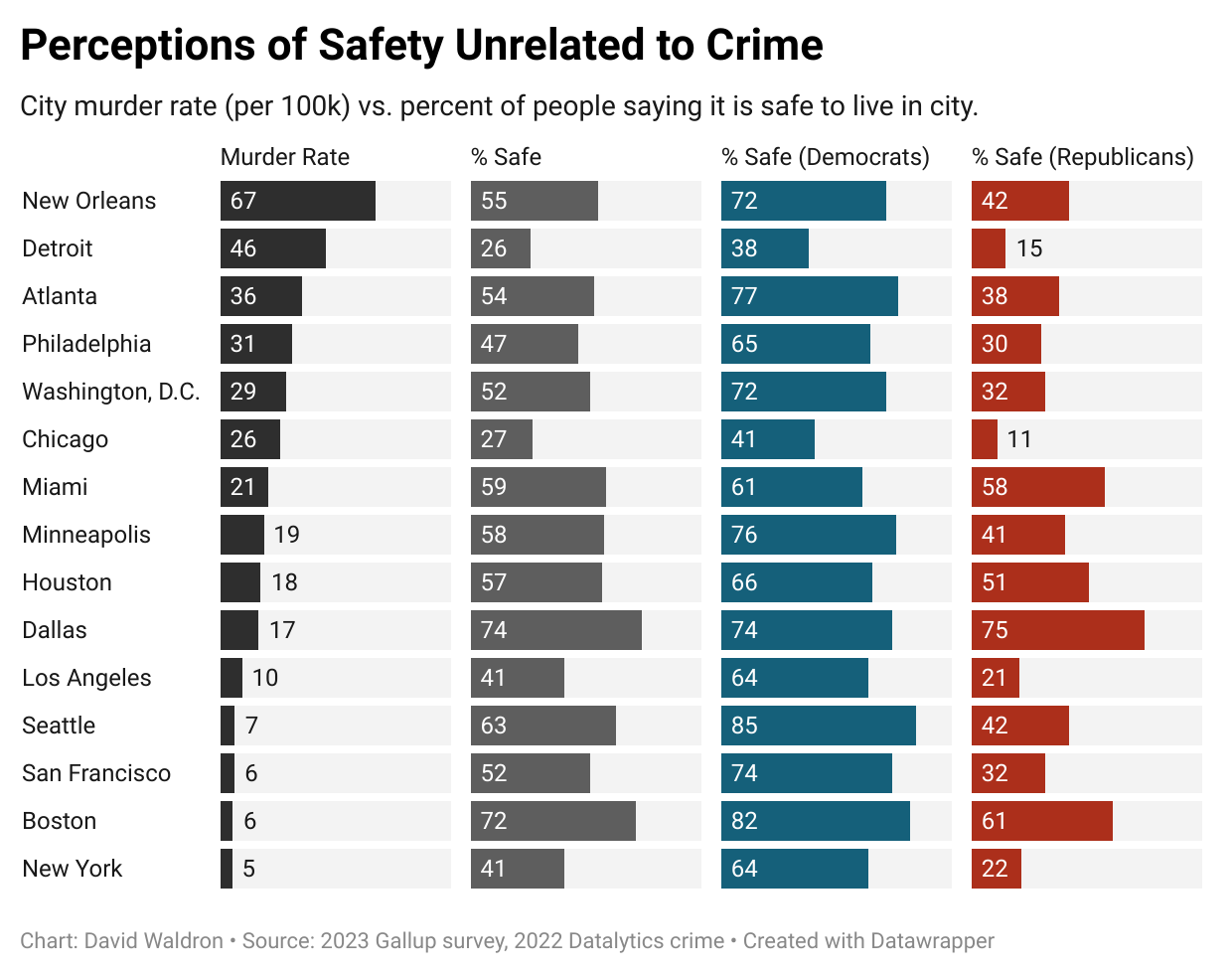

One thing that it turns out has very little to do with perceptions of crime is the murder rate, which in most places has gone dramatically down.

Hunter: Americans have a very distorted perception of how safe a city is.

If you go purely by state, if it counts then Washington DC is at the top, but 9 of the next 10 are red states. And of the 100 biggest cities, 5 of the 6 safest are in California.

Of course, there is a lot more to being safe than not being murdered. San Francisco has a very low murder rate, but what worries people are other crimes, especially property crime – things are bad enough there, and enough people in my circles pay attention to it, that it gets its own section. It’s definitely not ‘down at 6’ on the chart.

The thing is, property crime and other Ordinary Decent Crime went dramatically down too, in many ways, especially in these very blue areas. Crime used to be a huge portion of our cognitive focus in major cities.

You who carry a $1k iPhone around in your hand do not remember how insane that would have been when I was growing up in New York City. As Dennis Leary used to put it, have you ever played ‘good block, bad block’? And we took levels of risk, and perception of risk, unimaginable today, both for children and adults. I was warned not to have a hundred bucks in my wallet, and not to walk along Amsterdam Avenue, and I took the ‘safer’ last two block route back to our apartment on the Upper West Side.

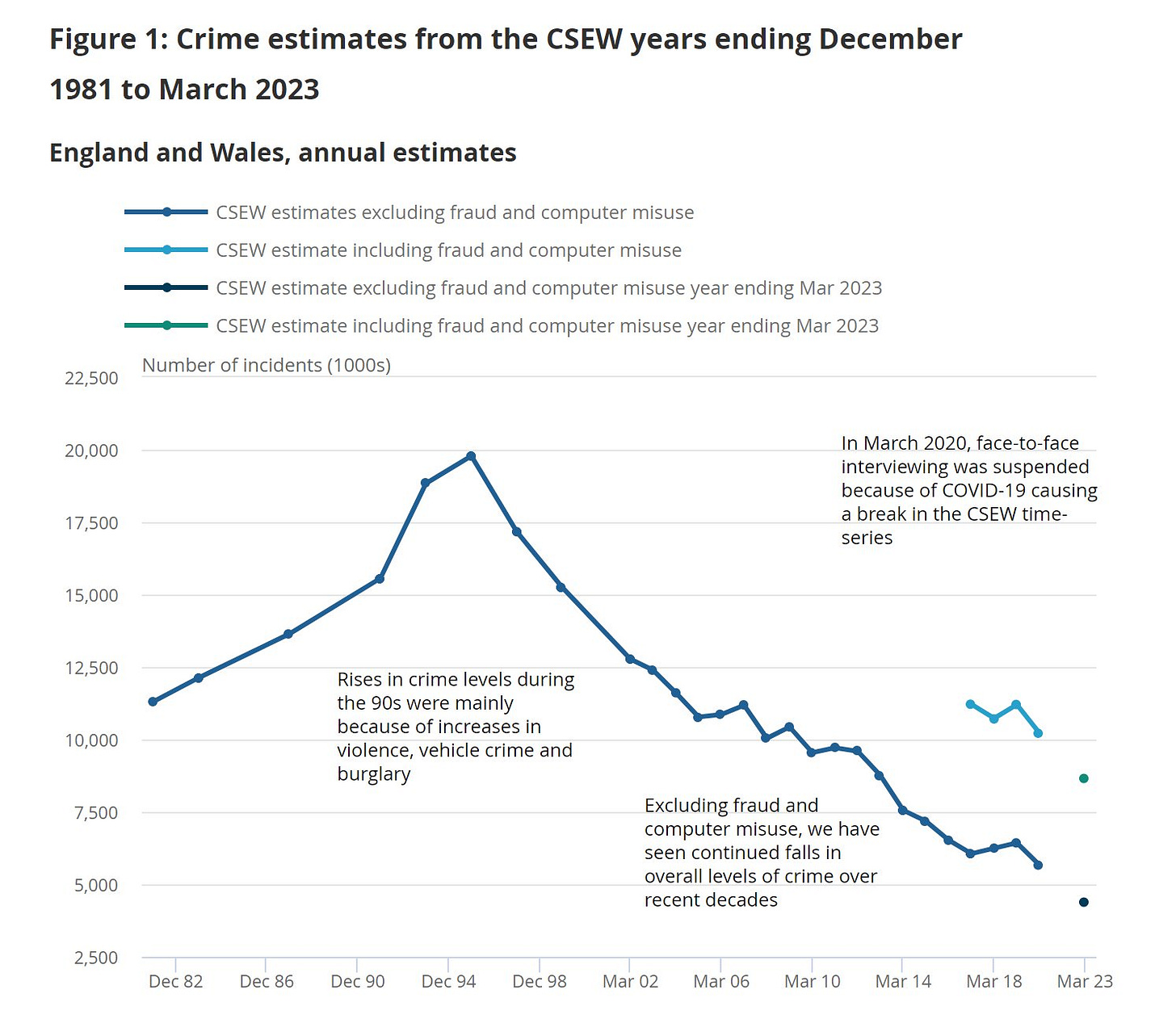

Here’s some English data to go with the USA data.

Stefan Schubert: In contrast to some people’s perception, crime has fallen dramatically in England and Wales over time.

Thanks to @s8mb and @shashj for highlighting this important and encouraging fact.

Violent crime and physical assault has gone from being like car crashes, which are an actual danger you have to constantly worry about and that happens all the time (although with a lot fewer fatalities than they used to!), to something halfway to airplane crashes. A violent crime is often now news. Thefts can be news.

That’s not to say that in particular places property crime hasn’t been allowed to spiral out of control, especially in particular ways.

We forget how blessed we are. And it is very important to hold onto that. Trust is fraying in other ways, but that feeling of living in Batman’s Gotham, with violence around every corner even if you didn’t choose to get involved? Yeah, that’s gone. The whole trope of ‘vigilante hero fights crime in the streets’ feels like an anachronism.

Aporia magazine makes the case that crime rates are much higher than in the past, because health care improvements have greatly reduced the number of murders, and once you correct for that murder is sky high. It is an interesting argument, and certainly some adjustment is needed, but in terms of magnitude I flat out do not buy it in light of my lived experience, as discussed elsewhere.

The other half of the post is that our criminal justice system is no longer effective, due to rules that now prohibit us from locking the real and obvious repeat criminals up, and those criminals do most of the crime and are the reason the system is overloaded. The obvious response is, we have this huge prison population, we sure are locking up someone. And yet, yes, we do seem capable of identifying who is doing all the crime, including the repeated petty crime, yet incapable of then locking them up. That does seem eminently fixable.

George Carlin had a routine where everyone would get 12 chances to clean up their drug habits before being exiled (to Colorado, of course) and it seems like we could figure something out here, even if we think three strikes are insufficient?

Our legal system depends on threatening people with big punishments, because standard procedure is severely downgrading all punishments.

So when you try to make shoplifting a felony punishable by three years in jail, what you’re actually doing is allowing that to be bargained down, and ensuring the punishment is ‘anything at all’ rather than cops arresting you on a misdemeanor only to have you released with a stern talking to, which means cops won’t even bother.

Obviously it would be better to actually enforce laws and punishments, but while we’re not doing that, we need to understand how laws work in practice.

We used to basically be okay with using this to give police and prosecutors leverage and discretion, and to allow enforcement in cases like ‘we know this person keeps stealing stuff’ without having to prove each incident. Now, we’re not, but we don’t know what the replacement would be.

The most obvious types of crime that are up are organized property crimes.

Why?

I see two central highly related reasons.

-

Our enforcement system is broken. That’s this section.

-

We increasingly tolerate disorder. That’s the following section.

Problem one is largely caused by problem two, but let’s talk effects first, then causes.

Essentially, in terms of my four categories of legality, we have moved much of property crime from its rightful place in Category 3 – where it is actively illegal and risks real consequences but we don’t move mountains to catch you – into at least Category 2, where it is annoying and discouraged but super doable with no real consequences. And in many cases, it is now effectively Category 1, where it might as well be thought of as fully legal without frictions.

If you are an upstanding citizen and then decide to do a little property crime, as a treat, you risk your reputation, various unpleasant reactions, and getting an arrest record and dealing with the courts, and you don’t know how likely those outcomes are. Security can and will sometimes catch you, you have things to lose, and so on. So the deterrence works okay there.

If you do a lot of property crime, however, you quickly learn that most of the risks and costs are kind of fixed, and often non-existent. Security guards and employees mostly won’t take any risk to interfere, police usually won’t be called.

Even when police catch you, even repeatedly, they mostly let you go, often even after being caught an absurd number of times. The old ‘quietly use or threaten violence or to otherwise mess you up’ solution has also been taken largely off the table, for better and for worse.

In each individual case, this is wise in terms of outcomes. But if you do it too often, eventually the criminal element or those considering such actions notices, and the problem snowballs.

Dr. Lawrence Newport: Barrister gets burgled. Criminal leaves behind their OWN BAIL NOTICE – showing they had been released only HOURS previously.

No arrests. Nothing happens.

Of other crimes @paulpowlesland says “I didn’t even report them to police because what’s the point?”

We deserve better.

…

Izzy Lyons: New figures show that 38,030 residential burglaries were recorded by the Met in the year ending last March — 82.4 per cent of which were closed with no suspect identified. Only 3.2 per cent resulted in a charge or summons.

If even reported burglaries only result in a suspect at all 17.6% of the time, and only have a charge 3.2% of the time let alone a plea or conviction, and presumably most burglaries don’t even get reported, and you don’t even make a point to arrest someone when they leave behind their literal bail notice, then you’re saying burglary is de facto legal. You can just take stuff. That will only get worse over time.

The same goes for things closer to fraud, such as organized cargo theft. Ryan Peterson reports that cargo theft has grown by more than 10% each month YoY since Q4 2021. This will continue until people start actually getting punished.

The latest incidents are in the Chicago area, where organized crime has run or taken control of motor carriers and uses them to enable cargo theft and demand de facto ransom payments. Ryan reports that Flexport has used a machine learning based fraud prevention technology (nicknamed ‘CURVE’) to spot and avoid such pitfalls. Essentially the criminals have learned to spoof commonly used indicators of trust, so the arms race is ongoing there same as everywhere else, and like many other places trust is declining.

The costs of property crime, in terms of countermeasures, can get very high. Putting products behind locked display cases is a rather large cost, given two in three shoppers mostly won’t buy such products. I will still buy medications or other urgently needed items through a display case in an emergency, but yeah if it’s not an emergency I’m going to pull out my phone and use Amazon, and next time try a different store. The whole procedure is too annoying and feels terrible.

Eventually, we’ll have to react to all this.

We’ll need to figure out how to enforce the law sufficiently that crime no longer pays, and it is at least somewhat obvious to the criminals that it no longer pays.

At minimum, we should track when we arrest or otherwise identify criminals, or there are otherwise clearly related incidents, and do escalating enforcement against repeat offenders. The stories of ‘this person got arrested 35 times for [X] and was released each time’ should never, ever happen, nor should the ‘50 people are doing a huge percentage of the crime in [NYC/SF/etc].’

As noted in an earlier section, this makes one wonder, who are all the criminals we are locking up, in that case?

Charles Lehman convincingly generalizes what is happening as a problem with disorder. We have successfully kept violent crime down, but we have seen a steady rise in disorder and in public tolerance for disorder, which people correctly dislike and then interpret as a rise in crime. Some of it very much is a rise in crime, such as retail theft forcing stores to lock up merchandise, while other disorderly actions are anti-social but not strictly criminal.

I would draw a distinction between disorder in the sense of ‘not ordered’ versus antisocial disorder that degrades the overall sense of order or harms non-participants in the activity. All hail Discordia, hail Eris, but hands off my stuff, you know?

Our academics have essentially delegitimatized disliking antisocial disorder and especially using authority to enforce against it, including against things like outright theft, and we’ve broken many of the social systems that enforced the relevant norms.

If you do not enforce rules against antisocial disorder, especially laws against outright theft, while enforcing rules against insufficiently obedient and rigid order, you are not doing people any favors. People realize these rules against disorder are not enforced, norms erode, and you get increasing amounts of disorder.

In particular, antisocial disorder becomes The Way to Do Things and get resources.

If you can sell drugs openly without restriction, or organize a theft ring at little risk, but opening a legitimate business takes years of struggle and expenses and occupational licensing issues and so on, guess what the non-rich aspiring businesspeople are increasingly going to do?

As I discussed in my post on sports gambling, we impose increasingly stringent regulations and restrictions on productive and ordered activity, while effectively tolerating destructive and disordered activity, including when we do and don’t enforce the relevant laws.

We need to reverse this. We should apply the ‘let them cook’ and ‘don’t use force to stop people who aren’t harming others’ principles to productive and ordered activity, rather than to unproductive and disordered activity. At minimum, we should beware and track the balance of inconveniences, and ensure that we are throwing up more and more expensive barriers to antisocial disordered activities, versus pro-social or ordered activities.

YIMBY for housing construction and doing business and working. NIMBY for noise complaints and shoplifting and cargo theft.

Is this causal in the sense of broken windows theory, or is it merely a symptom?

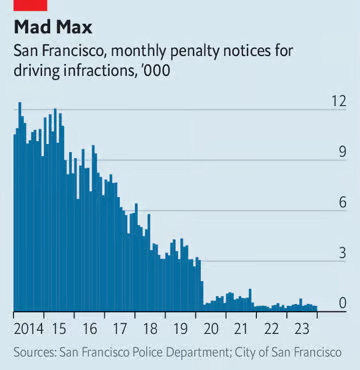

Rob Henderson: “Today, if you so choose, you can drive through red lights at high speed with impunity—police have almost completely stopped issuing traffic citations”

Seems true in the Bay Area generally. Last summer found you could drive 110mph on the 505; no penalty.

The only other place where I’ve been able to drive that fast with that level of impunity is the German autobahn.

wayne: “The police stopped writing tickets” is another one of those things that sounds like right-wing hyperbole, but, no — they literally stopped writing tickets

Kelsey Piper: I know people who’ve driven in Oakland and SF for months with expired registration (by accident) and were stopped the first time they drove their car out of Oakland or SF into a jurisdiction that enforces traffic laws.

My presumption is it is both symptom and cause when it gets this extreme.

In the Before Times, if you were up to no good as they saw it, and especially if you were committing crimes or interfering with the good citizens of the land, the law might notice, and in various ways make it clear you should stop.

This often worked, and gave police leverage, but was illegal, and led to terrible abuses.

So we decided that this was bad, actually, and moved progressively to cut off this sort of behavior.

The problem is that this puts the system in a tricky position. It has only so many resources, and it is expensive and time consuming to actually enforce laws by the book, and often you don’t have the proof or there are technicalities so you can’t do it.

We cannot go back, nor do we want to. But over time everyone is adjusting to and solving for the new equilibrium, and norms, habits and skills are also adjusting. The system was designed for one way and now it is the other way. A lot of what has been protecting us is inertia, and the instinct that there are forces waiting to keep things in line, and various norms that grew up over time.

That will, over time, continue to fade, if don’t give it good reason not to. I worry about this. It is all the more reason that, now that we don’t have as much illegible enforcement, we need more legible enforcement, including for small offenses, for those things we want to actually prevent.

Story of a mostly homeless guy who scammed Isaac King out of $300. Isaac sued in small claims court on principle, did all the things, and none of it mattered.

Sarah Constantin: It really seems like “individuals using the court system to redress ordinary grievances” is no longer a realistic thing?

…and it seems pretty intuitive that the idea of “the People have legal rights against arbitrary monarchical power” would only arise in a culture where lots of people had the real-world experience that going to court was a practical way to reverse injustices (and get your $ back).

Oliver Habryka: Lightcone has filed like 8+ reports of people breaking into Lighthaven [which is in Berkeley] (especially early on before we upgraded our fences) and stealing stuff. A few of them lead to arrests, nothing ever had any real consequences on anyone beyond that.

My understanding is exactly Sarah’s. If someone simply keeps your money, or otherwise cheats you, what are you going to do about it? Even if you can prove it, it is definitely not worth going to court. Lawsuits are effectively nuclear weapons, the threat is far stronger than the execution unless scale is truly massive. Calling the cops for anything small is also not worth the effort and carries risk of backfire.

Personal example: Someone rich (or at least spending as if they were rich, could be broke, who knows) promised, in writing, as part of resolving the situation at a company, to pay me six figures. Then they didn’t pay, then once again in person affirmed this and promised to pay, then didn’t pay. What am I going to do, sue them? When they have a history of vindictive behavior? Money gone.

Mostly it works out fine. Credit cards are kind of an alternative to small claims court, and there are various reputational and other reasons that allow ordinary business to continue even if it is not in practice enforced by law. But also we all choose to avoid transactions that would depend on the threat of a small-stakes lawsuit.

If you’re wondering why we can’t go back? We’re not okay with things like this:

Son reports dad missing, police think son killed father, they psychologically torture him for 17 hours and threaten to kill his dog until he confesses. Father then found alive, son settles lawsuit $900k in damages.

I wonder how much of this is ‘we can’t do other things we’d prefer to do so instead we do other things that are even worse.’

Here’s an actually great idea from a (now defeated) presidential candidate who is also a former prosecutor, you can guess why we haven’t done it already.

Idejder: HOLY SHIT.

Harris, on “Breakfast Club,” just said there should be a database for police officers so they cannot commit wrongdoing and get away with it by being transferred to other districts or departments to conceal it.

I have never heard a politician say that so clearly.

Police training in alternative cognitive explanations of various phenomena and behaviors reduced use of force, discretionary arrests and arrests of Black civilians in the field, and reduced injuries, while ‘activity’ was unchanged. Odd to say activity did not decrease if discretionary arrests decreased. That is at the core of police activity. If you are making less arrests, then it stands to reason you would also use less force. We would like to think the training made police make better decisions, but we cannot differentiate that from the possibility that police got the message to find reasons to make fewer arrests.

A working paper says that police lower quantity of arrests but increase arrest quality near the end of their shifts, when arrests would require overtime work, and that this effect is stronger when the officer works a second job. File under ‘yes obviously but it is good that someone did the work to prove it, and to learn the magnitude of the effect.’ One could perhaps use this to figure out what makes arrests high quality?

In an ACX comments highlight post linked earlier, Performative Bafflement suggests more than 80% of American police hours are wasted not solving any crime at all, because most hours are spent writing traffic tickets. Which would explain why the police are so bad at solving crimes. I mention it because Scott finds this plausible. But that number seems absurd, and for example Free Think here puts the number directly spent on stops at 15%, even with additional paperwork that doesn’t get anything like 80%.

One should also note that the three hours a day on paperwork is quite a lot – that’s a huge tax on the ability to actually Do Things. Can AI plus body cameras help here? Seems like you should be able to cut that down a lot.

Here is the one and only Amanda Knox giving advice on how to deal with police.

If anything this seems advice rather willing to cooperate with the police?

Amanda Knox: You should never be in a room with police for more than an hour. If they read you your Miranda rights, you’re a suspect. Shut it down. Demand a lawyer. This is just some of the advice I got from a retired FBI Special Agent, and two renowned false confessions experts.

After talking with half a dozen exonerees who’d been coerced into making false confessions, and interviewing the world’s leading experts, I wanted to know what advice they’d give. Here’s what they said…

FBI Agent Steve Moore (@Gman_Moore): If they ever make an accusation against you, you’re no longer a witness. You say, I’m leaving. Get a lawyer.

If they say you’re not allowed to leave, to see a lawyer, or talk to a parent or spouse, get a piece of paper and write that down, write down the time, and ask them to sign it. If they won’t sign it, fold it up in your pocket.

Make a record of everything you do. They’re taking notes; you take notes. If you’re innocent, you’ve got nothing to lose.

Don’t repeat your story. When they say, Tell us what happened, tell them what happened. When they say, OK, let’s start over. Let’s go back to that room. You say, Nope, already asked and answered.

What they’re trying to do is manipulate and confuse you. Then when your stories don’t match up perfectly, they’ll say, But you said you went through the front door at 11, and now you’re saying 10: 30. What else could have you gotten wrong?

False confessions expert Steve Drizin (@SDrizin): Once they read you your Miranda warnings, you are a suspect. Do not sign a waiver to give them up. Those Miranda rights are precious. Don’t throw them away like garbage, no matter how much police try to get you to do that.

If you’re a witness, tell the truth and then shut up. When they start to try to change your truth to fit their truth, you shut it down. If they’re not accepting your truth, say, No more. I’m not going to talk until I have a lawyer.

False confessions expert Richard Leo: Insist on recording any statement. We all have smartphones. Once you start being accused, they’ve made up their mind and their goal is to get a confession.

Most people’s interactions with police are in automobiles. The police say, Do you know why I stopped you? It’s a trick question designed to get you to confess. And when the police stop you in your car, you’re not free to leave.

Remember, if you’re in custody and police seek to elicit incriminating statements, you’re entitled to Miranda warnings. But the Supreme Court, going back to the 80s, gaslighted Americans by saying that you’re not in custody when you’re pulled over.

So you’re not entitled to Miranda warnings. So the police just say, Do you know why I pulled you over? You might say, Well, maybe I was going 10 miles over the speed limit? You’ve just confessed. (Instead, say, I have no idea.)

Don’t ask police, Should I get a lawyer? Because they are your adversary if they are trying to get evidence against you of a crime and you may not even know it. Be instinctively distrustful. Be respectful, cooperate if it’s purely a witness role…

But once they start to accuse you or you feel accused, certainly if you’ve been read your Miranda warnings, there’s no percentage in talking to them. They are professional liars. They’re very good at it.

[thread continues, podcast link]

One can summarize that as ‘be respectful and comply with their physical demands, but if they treat you like a witness (and you didn’t do anything illegal) tell the truth, but if they ever accuse you of anything, try to get you to change your story or ask you to repeat yourself or act like you are a suspect get a lawyer and never ever confess to anything even speeding and of course never sign away Miranda.’

That seems like the bare minimum amount of wariness?

This section of ACX’s comments post on prisons discussed probation.

According to several commentators, probation is essentially a trap, or a scam.

In theory, probation says that you get let go early, but if you screw up, you go back. Screwing up means violating basically any law, or being one minute late to a probation meeting, or losing your job (including because the probation officer changed your meeting to be when you had to work and got you fired), or any number of other rules.

One can imagine a few ways this could work, on a spectrum.

-

This is leverage, to be used for your own good and for police business, but everyone wants you to succeed. If the police need your cooperation, they can threaten to send you back. If the police think someone or some activity is trouble, they can tell you to not associate with or do them. And so on. The rules have to mean something, but if you’re clearly trying to turn your life around then they’ll cut you some slack.

-

This is a test. The system is neither your friend nor your enemy, and actions have consequences. And yes, if we are pretty sure you’re up to no good we’ll go looking for a technical reason to violate you, but otherwise good luck.

-

This is a backdoor and a trap, and the system will be actively attempting to send you back to prison as soon as possible.

The reports are that the point of probation is the third thing, that probation officers will actively seek to violate people and send them back to prison even if they’re not doing anything wrong. Thus probation is a substantial portion as bad as being in prison, and then you probably fail and go to prison anyway.

In this model, the entire system is in bad faith here, and they’re offering you probation to avoid a trial knowing they’ll then be able to screw you over later.

And that this is true to the extent that realistic criminals often turn down probation if the alternative is a shorter overall sentence.

My quick search said about 54% of those paroled fail and get sent back, but a commenter says parole is actually the first thing, they want you to succeed and not have to imprison you, whereas straight probation off a plea bargain is the third thing, where they want to avoid a trial then imprison you anyway.

This is offered as the reason people don’t accept GPS monitoring. If you have a GPS monitor, then it is easy to notice you’ve technically violated the terms, and since everyone involved wants to violate you, that means you’re doomed.

Scott Alexander interprets ‘someone on probation got two years for driving the speed limit because it was technically a violation in context’ as a outrageous strictness. Which it is, but the two years was really for the original crime the guy got probation for, not for the technical violation. So it’s more like the system lying and being evil, rather than it being too strict per se.

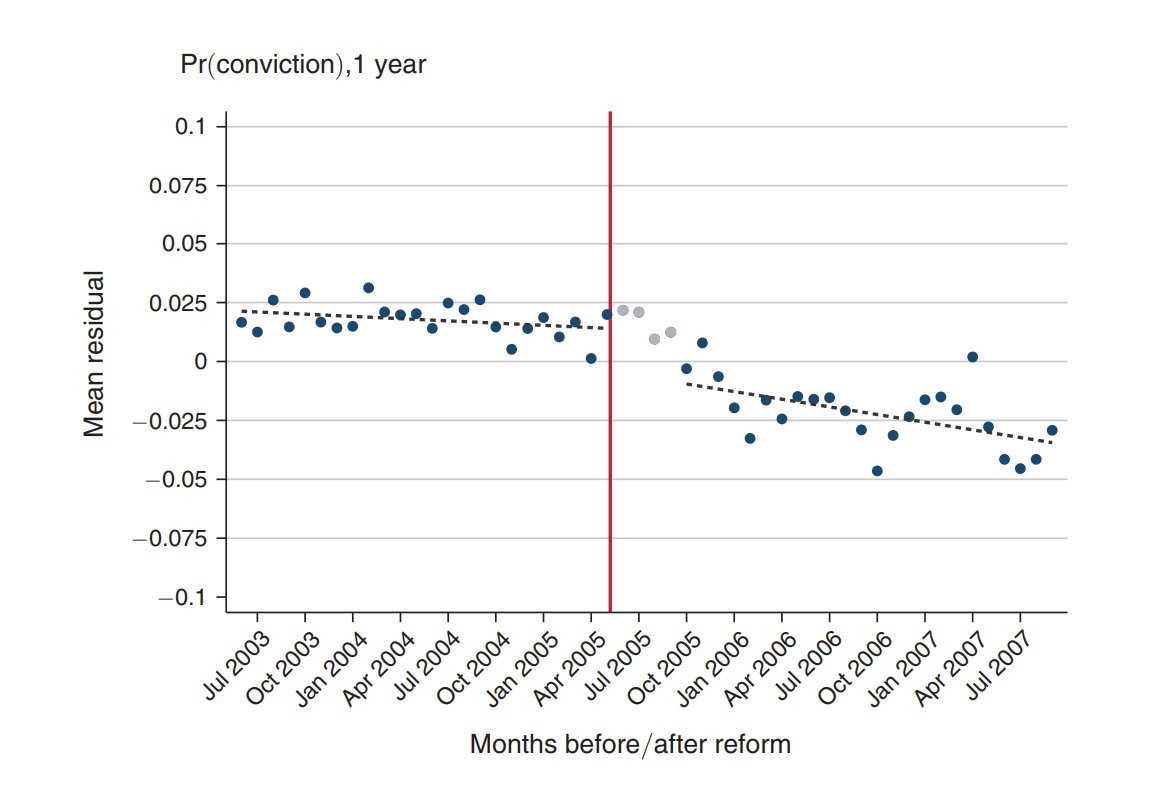

When Denmark expanded its database of genetic information, crime rates fell dramatically.

Cremieux: The magnitude of this effect is fairly staggering, too: the elasticity is -2.7, meaning that for every 1% increase in the number of criminals in the database, recidivist crime falls by 2.7%. That’s enormous (-42% to the 1-year recidivism rate), and that’s simply due to the fear that, if you commit a crime again, you’ll now be detected doing it.

This is insanely strong evidence that the prediction of being caught is a huge driver of recidivism rates. A 42% drop in 1-year recidivism is crazy. The rate of being caught went up, but it didn’t go up that dramatically.

America saw similar results, although smaller.

Cremieux: Several American states have mandated that prisoners need to provide their DNA to the authorities, and after those programs came into place, there was a large reduction in the odds of recidivism for affected criminals.

This result is highly significant for violent offenders, but less so for property offenders, with mandates reducing violent recidivism by a whopping 5.7pp over five years, or in percentage terms, they reduced violent criminal recidivism by 21%.

What we need to do is a study of criminals perceptions of likelihood of being caught with and without the DNA database, and in general, and how those perceptions predict their actions, so we can better establish the effect size here and what else impacts it.

There are obvious libertarian objections to a universal DNA database. But if the upside was a 42% overall drop in crime, potentially a lot more since this would then change norms and free up police for other cases? It is very hard for me to make the case for not doing it.

The Biden Administration attempted to move Marijuana to Schedule III. That would have helped somewhat with various legal and tax problems, but still have left everything a mess. It seems the clock will run out on them, instead, as per prediction markets.

I am libertarian on such matters, but also I am generally strongly anti-marijuana.

Matthew Yglesias: I like that “getting high a lot is probably a bad idea” has become a contrarian take.

Andrew Huberman: People have been experiencing the incredible benefits of reducing their alcohol intake & in some cases eliminating it altogether. I believe cannabis is next. For improving sleep and drive.

Note: I’m not anti-cannabis for people that maintain high productivity and mental health.

I don’t think marijuana is typically good for those that use it, especially for those that use it a lot. I believe you are probably better off using it never (or hardly ever but for humans never is easier) than trying to figure out the right times when you can get net benefits. There are of course exceptions, especially certain types of creatives, and also medical reasons to use it.

Similarly to what I concluded about sports gambling, I don’t think we should make it effectively criminal, but I believe that there needs to be some friction around marijuana use.

Another way to think about this is, you should ensure that your formerly illegal activities have at least as many regulatory barriers as your pro-social activities like selling food or building a house.

Steve Sailer points out that one important risk of legalizing vice is that legalization vastly increases the quality of marketing campaigns, the way that legalizing sports gambling made ads for it ubiquitous. In addition, it increases the reach and options available, grows the market and shifts norms.

So yes, I think some form of ‘soft illegal’ or semi-legal is the way to go here.

If you go fully legal, you get what we’ve been seeing in New York City. There is massive overuse, and a huge percentage of new leases on New York City storefronts have been illegal cannabis shops getting busted. They don’t cause trouble, but that leaves less room for everything else, and often the city actively smells of the stuff.

Brad Hargreaves: And so the process of shutting down all the unlicensed dispensaries in NYC begins. While they won’t be missed, it’s worth ruminating on the tremendous failure of governance that got us here.

Over the past ~three years, these weed shops were a big % of all retail leases in Chelsea.

This all started in 2021, when the State of NY legalized recreational marijuana. Per the law, weed could only be sold through state-licensed shops. A new regulatory agency was created.

These licenses would be provisioned through a complex process that prioritized (among other things) people with past drug convictions.

But the process of issuing these licenses was slow and the process onerous. It dragged on for years with no licenses issued.

In the meantime, cops stopped enforcing any laws around selling weed (among others). As enforcement waned, sneaky attempts to sell weed from bodegas transformed into full-blown unlicensed weed emporiums.

Entrepreneurs dumped 💰💰 into retail build-outs.

On a stretch of 8th Avenue in Chelsea, Manhattan, a majority of all new retail leases signed were for weed shops.

Some of them were really nice! The one at my corner – across from the PS 11 elementary school – looked like a candy store.

Of course, the entrepreneurs who had actually gone through the licensure process got hosed

Not only were they on the hook to pay 10x markups for retail build-outs to the state construction agency they were forced to use, they had huge, unfettered competitors.

So in the most recent (2024) state budget, the State finally gave New York City expanded enforcement powers and explicit instructions to shutter unlicensed dispensaries. Finally, it’s happening.

Now, all those new retail spaces that opened? Permanently closed.

Now there’s going to be a flood of new, vacant retail hitting the market in Manhattan, particularly in neighborhoods like Chelsea and the Village.

All just because the state couldn’t get its act together and police let the issue fester for three years.

Seriously, it was totally nuts. Still is, although I report things are improving, and Eric Adams did indeed shut down a lot of the illegal places, eventually. Nature is healing.

Here is a NYT article about New York’s disastrous legalization implementation. There is a tension between complaints about the botched implementation, of which there can be no doubt, versus worry that marijuana is too addictive and harmful to be fully legalized even if implemented well.

Similarly British Columbia is recriminalizing marijuana after a three year trial run amid growing reports of public drug use.

Alex Tabarrok does the math on an otherwise excellent Reuters exploration of Fentanyl production, and is suspicious of the profit margins. A gram of cocaine he says costs about $160 on the street and $13-$70 trafficked into the USA to sell, whereas Reuters claimed to have gotten precursors for 3 million tablets of Fentanyl for only $3607.18, which Alex estimates sells for $1.5 million. That’s crazy, although there are other costs of production, even if you assume they missed some ingredients.

One could model this as the cost of precursor goods being purely in risk of punishment during the process, with the dollar costs being essentially zero. Actually making the drug is dirt cheap, but the process of assembling and distributing it carries risks, and the street price (estimated at $0.50 per tablet) is almost entirely that risk plus time costs for distribution.

If you fully legalized Fentanyl, putting it into Category 1, the price would go to almost zero, which would not be pretty.

Here is some good news and also some bad news.

Matt Parlmer: There was just a fare compliance check on my bus in SF, they kicked off ~half the passengers, wild shit.

So the punishment if you are caught riding without paying is you get off the bus?

That doesn’t work. If I knew for sure that this is all that happens when you don’t pay, either you’re checking a huge portion of buses or I see no reason to pay the fare, given you’re not going to take this seriously.

New York City has the same problem with fare evasion. Suppose you get caught. What happens? Nothing. Their offer is nothing. All you get is a warning. Then the second time you are caught you owe $100 fine with a $50 OMNY credit, so a $50 fine if you then go back to paying. This gives you a very clear incentive to evade the fare until you’re caught at least twice. Most people will never get caught even once. The norms are rapidly shifting, with respectable-seeming people often openly not paying, to the point where I feel like the pact is broken and if I pay then I’m the sucker.

John Arnold rides along with the LAPD and offers thoughts. He is impressed by officer quality and training, notes they are short on manpower and the tech is out of date. He suggests most calls involve mental illness or substance abuse, often in a vicious cycle, and often involving fentanyl which is very different from previous drug epidemics.

One policy suggestion he makes is to devote more effort to solving non-fatal shootings, which he says are 50%+ less likely to get solved despite who survives being largely luck and shootings often leading to violence cycles of retaliation. Compared to what we pay for trials and prisons, the costs of investigation seem low, and the deterrence value of catching more suspects seems high. More strong evidence we are underinvesting in detective work.

If you believe you can hire more police and then they will spend their days solving capital-C crimes like shootings, then it’s hard to imagine failing a cost-benefit test.

How bad can non-enforcement get? London police do not consider a snatched phone, whose location services indicate it is six minutes from the police station, which is being actively used to buy things, is worth following up on.

I understand why the police in practice mostly ignore burglaries when they don’t have good leads, but when the case has essentially been pre-solved I find this rather baffling. It is impressive how long it is taking for criminals to solve for the equilibrium.

Your periodic reminder that some particular laws and methods of enforcement can be anti-poor, but not only is enforcing the law not anti-poor, the poor need us to enforce the law.

Sometimes I wonder how California still exists, after 6 years Scott Weiner finally ends the rule that to enforce the law against breaking into cars you have to ‘prove’ all the doors were locked. If the doors might not have been locked how does that make it okay? With crime this legal in so many ways we are fortunate that there are not (vastly more) organized bands going around taking all the things all the time.

Charging domestic abusers with crimes reduces likelihood of violent recidivism, whereas ‘the risk-assessment process’ had no discernable effect. It makes sense that criminal charges would be unusually effective here. Incentives and real punishments matter. They make future criminal threats credible. They weaken the abuser’s bargaining power. They give an opportunity to make changes, perhaps even end the relationship. There is also a distinction between most crimes, where putting someone in jail with other criminals leads to them networking on how to do more crime and forming obligations to do more crime, and domestic abuse, where that doesn’t make much sense.

It has always been very bizarre to me the things we tolerate in prisons, including letting them be effectively run by criminal gangs, and normalizing rape, but also commercial exploitations like the claim that some jails are preventing inmates from having visitors so Securus Technologies can charge over a dollar a minute for video calls instead.

Perhaps we should not have our prisons not be incubators of criminality, where inmates are isolated from any outside influences and left to largely govern themselves?

Even if prison isn’t purely rehabilitation, it shouldn’t be actively the opposite of that.

Abstract: Abstract: 45% of Americans have an immediate family member who has been incarcerated, and over $80 billion is spent each year on the public corrections system. Yet, the United States misses a major opportunity by focusing most rehabilitative programs right around the time that individuals are released from carceral facilities, instead of intervening throughout the period of incarceration. In this paper, we study an in-prison intervention targeting a costly aspect of life for incarcerated individuals—audio and video calls.

We evaluate the impact of free audio and video calls utilizing the staggered roll out of this technology across lowa’s nine state prisons between 2021 and 2022. We find evidence of a 30% reduction in in-prison misconduct, including a 45% decline in violent incidents and threats of violence. Our results indicate potentially large returns to prison communication policy reforms that are currently underway across the U.S.

Paul Novosad: These are billion dollar bills on the sidewalk. Crime and recidivism are so costly to communities. Just having the human decency to let incarcerated people talk to their relatives reduces violent behavior A LOT. It’s also just a basic human decency.

Gunnar Blix: The whole mindset switch from “punishment” to “rehabilitation” (for the 95% of cases where it makes sense) is huge. Growing up in Norway, I remember when the maximum sentence was reduced to 21 years (effectively 14). And “prison” in Norway is not what you see in American films.

As in, if you give prisoners free audio and video calls, which costs you approximately nothing, you see dramatic improvements in prisoner behavior, and a far less toxic environment. The direction makes sense, the magnitude of the change is stunning. I don’t want to trust a single study, but also there is little downside here – introduce this in a bunch more places, and take advantage of the natural experiment, then go from there.

If we want to do punishment for punishment’s sake, we have plenty of options for that. Cutting people off from the outside seems like one of the worst ones, especially since actual crime bosses can already get cell phones anyway. It also gives you powerful leverage – you default to calls for free, and have the power to take them away.

Instead, we do the exact opposite. Not only are calls not free, we actively limit contact with the outside, especially the influences that would be most positive, in the name of extracting tiny amounts of money.

Whenever I hear about such abuses, two things stick in my mind: The absurdly high amount we pay to keep people in prison, and the absurdly low amounts of money over which we torture the inmates and their families. I don’t ‘have a study’ but it seems obvious that allowing more outside communication would be good for rehabilitation, as well as being the right thing to do.

On a related note: Almost all prison rape jokes are pretty terrible. We have come a long way on so many related questions, yet somehow this is still considered acceptable by many who otherwise claim to champion exactly the reasons this is not okay. If we are going to ruin comedy, let’s do it where it is maximally justified.

Would criminals largely be unable to handle hypotheticals? If they were, asks River Tam, then how is it they can handle sports hypotheticals with no trouble, as you can demonstrate by asking them if LeBron would beat Jordan one-on-one. This seems entirely compatible with the same people being unable, in practice, to follow other hypotheticals that don’t match their own experiences. They have learned that such tactics are in practice often a trap In Their Culture, so they are having none of it. That doesn’t mean they can’t or don’t think about hypotheticals.

A well-known result is that releasing a Grand Theft Auto game, or other popular entertainment product, short term lowers crime as criminals consume the entertainment rather than commit crime (paper). Whereas Pokemon Go was deadly, since it encouraged people to go outside. This is of course a deep confusion of short run versus long run effects. What matters are the long run impacts, which this fails to measure. Presumably we all agree that ‘go for a walk’ is a healthy thing, not a deadly thing, even though today it would be ‘safer’ to stay home on a given day.

From Vital City, the case that violence tends to be a crime of passion, and jobs and transfer programs and prosperity help with property crime but have little impact on violence, versus the cast that investments in things like summer jobs, neighborhood improvements and services reduces crime, including violent crime.

My instinct is to believe the second result directionally, while strongly doubting the magnitude of the intervention studies quoted. There is not as big a contradiction here as one might think. Violent crimes are typically crimes of passion, but even among those incidents, the circumstances that cause that, and the cultural conditions that cause that, are not randomly distributed. You can alter that.

Unfortunately anti-poverty programs as such do not seem to move the needle on that in the short term. In the short term, my guess is that if you want to act at scale you must shift the social conditions more directly linked to violence triggers, or teach people various variations on CBT and help them alter their behavior.

As for the first result, I can believe that the correlations and intervention effects are smaller, but I find the general claim of ‘prosperity and employment do not decrease violence’ as obviously absurd, especially when stated as ‘poverty and unemployment do not lead to increased violence.’

How should we think about homelessness, and the fear of becoming homeless? Most of those who are long term homeless, as I understand it, have severe problems, but that doesn’t mean the threat of this isn’t hanging over a lot of people’s heads all the time, especially with the decreasing ability to turn to family and friends. Also people correctly treat the nightmare as starting with the threat becoming real and close, that’s already bad enough. I think this is part of why a lot of people are under the impression that a huge percentage of Americans live ‘paycheck to paycheck’ in ways that put them ‘one paycheck away’ from homelessness and total collapse, in a way that seems clearly untrue – a lot of people are one paycheck away from being too close to the edge and starting to panic, or having to tap resources and options they’d rather not tap, which sucks, but that’s different from actually falling over that edge.

From Scott Alexander’s report on the Progress Studies conference, it turns out forcing people to pay the fare instantly transformed SF’s BART trains to be safe and clean? Although there is skepticism given how few stations even have the new gates yet.

Tim Galebach: A big influencer, with a lot of online haters, once told me that gating any piece of content at > $1 screened out nearly all haters.

Visakan Veerasamy: and the haters who are willing to pay to show up are quite entertaining, they’re more high-effort haters who actually do the reading etc.

The government of San Francisco has historically been the epitome of the pattern of putting up (Category 2) barriers to productive and pro-social activities like doing business and building housing, but giving de facto (Category 1) carte blanche to a variety of activities we would like to see happen less often.

Thus, San Francisco has some issues with drugs and homelessness, an ongoing series.

Sam Padilla: Holy fucking shit.

I just walked from Soma to Hayes Valley through Market St and easily sketchiest walk of my life.

And dude I grew up in Brazil and Colombia. I’ve walked real sketchy shit before. Doesn’t even compare.

Felt like a scene straight out of the walking dead. Sad.

I walked by a corner where there were easily 100 people, many visibly high. Probably a shelter.

Right then, an unoccupied FSD Waymo car drove by. It was the first time I saw one without a human at the wheel.

And it just hit me. San Francisco is the epitome of a tech dystopia.

This can’t be the future of tech.

It’s not only crime. What if it’s me, too?

Noah Smith: It wasn’t until I moved to San Francisco that I realized that the reason rich people live on hills is only slightly about the views, and mostly about the fact that thieves don’t like to walk uphill.

When calculating crime in San Francisco, you also have to adjust for dramatically lower foot traffic, which in downtown is still 70% below 2019 levels. What matters in practice is how much a given person would be at risk, so the denominator matters.

Meanwhile, the city does enforce the law against things like hot dog venders, or those who violate building codes, while (at least until recently?) not enforcing it against venders of illegal drugs. The only businesses you can reasonably open are the illegal harmful ones. That does not seem likely to go well over time.

Many nonprofits in San Francisco seem increasingly inaccurately named.

Swann Marcus: lol this is like the third SF non-profit that got in trouble for this exact thing in the last three months.

SF District Attorney: The SF District Attorney’s Office announced today the arrest of Kyra Worthy, the former executive director of the nonprofit SF SAFE, on 34 felony charges related to misappropriation of public money, submitting fraudulent invoices to a City department, theft from SF SAFE, wage theft from its employees & failing to pay withheld employee taxes + writing checks w/ insufficient funds to defraud a bank.

Ms. Worthy is accused of illegally misusing over $700,000 during her tenure w/ SF SAFE.

Ms. Worthy was arrested today by SFDA Investigators.

Because of SF SAFE’s relationship w/ SFPD, SFPD asked the SFDA Office to undertake this investigation. The SFDA Office executed 25 search warrants, obtained hundreds of thousands of pages of financial & business records + interviewed more than two dozen witnesses.

These charges are a result of an on-going investigation by the San Francisco District Attorney’s Office Public Integrity Task Force.

Anyone with information is asked to call the Public Integrity Task Force tip line at 628-652-4444. You may remain anonymous.

I constantly hear that various nonprofit organizations working with the city of San Francisco are, rather than trying to help with social problems, very much criminal for-profit enterprises working to warp public policy to increase profits.

Is the underlying situation quite bad? Periodically I see people say yes.

Cold Healing: I thought “San Francisco is dangerous” was a fabrication before I went, paranoid tech bros being odd about homelessness. But there are streets where more than 50 percent of the people in public are homeless and actively using drugs. It is difficult to write about this without seeming cruel, but I have never seen anything like it in America.

Note Able: I was in San Francisco less than eight hours in 2017. Being from a small Southern town, I remember thinking, “I cannot believe this is a major city in a first-world country.” When I got home, I researched the city to see if my experience was anecdotal. It was not.

My experiences have not been this, but I haven’t been in the areas people claim this is happening. So yes, most of the city blocks were never like this, but that’s not good enough. The good news is things seem to be improving now.

One option is to arrest people who commit crimes. Another is to close stores?

SFist: Convenience stores in a 20-block area of the Tenderloin will not be allowed to stay open between midnight and 5 a.m. for the next two years, as City Hall says those corner stores “attract significant nighttime drug activity.”

Eoghan McCabe: Closing local family-owned businesses and reducing their income instead of policing the streets like a first-world city 🥲

Emmett Shear: Jane Jacobs is rolling in her grave. Eyes-on-the-ground, particularly merchants, are what keep cities safe and vibrant. Maybe try arresting the drug dealers instead of the shopkeepers?

Danielle Fong: Instead of stopping the actual problems—open drug sales, drug use, littering, petty and violent crime, encampments, and trash—they’re stopping legitimate businesses from operating.

OK, sure, maybe this will help a little bit. Just starve the addicts out! But, maybe, just maybe, you should think about what you’re doing.

This is the pure version of enforcing the law against pro-social legible activity because you refuse to do so against anti-social activity, and thinking you’re helping.

There was a dispute a while ago about cleaning up a particular street that happens to be were Twitter lives. Very confident people were saying it was safe and nice, others were very confident it is a cesspool. Both would know. What was up with that?

Jim Ausman: Elon Musk said he was leaving San Francisco because roving gangs of violent drug addicts in front of his business were terrifying him and his employees. This was alarming to me because the last time I had been there it was pretty nice. I decided to take a look myself.

I walked around the neighborhood and took some photos. I used to work around Square in the mid-10s so I am very familiar with the neighborhood. It was lively then and sort of emptied out during Covid, like many places. It’s actually coming back pretty nicely. All the empty spaces from a year ago have filled back up.

The Market – a posh grocery store – is still there and doesn’t really lock up its goods, which is what you might expect if you believed the doom loopers. Looks like there are fewer restaurants in the back than there used to be. Not as many people are coming into the office daily.

This post really triggered the Elon-stans. People told me all kinds of crazy stuff. “Go there at night” “go north a block” “go south a block”

Tyler Art: Come on Jim. Stop gaslighting.

Steady Drumbeat: I’m sorry man, but this just isn’t accurate. I lived there for a long time, walked this intersection multiple times a day. It’s a really messed up part of the city. Have you ever been chased around Berkeley by a guy with a 4×4? I was, right in the TL!

Danny X: I live on 9th at the avalon building, spitting distance from these photos. I saw a dead body IRL for the first time because I chose to live here. Show GoGo market. Show the parking garage entrance. This is as clean as it gets. As soon as the day begins to end, it turns into a nightmare.

Elon Musk: A couple I know finally moved from SF, because there was a dead body in front of their house. They called 911 and no one even showed up until the next day.

Squirtle: they’re actively moving the homeless away from market and 9th now, and cleaning it up.

This is 100% the city reacting to Elon tweets for reasons I won’t speculate on. This area isn’t safe and clean and it hasn’t been for over a YEAR. This is just fing APEC all over again.

The guy who posted images yesterday did it to with the intention of baiting people into saying BUT WHAT ABOUT THIS STREET AT NIGHT and by the time that comes around it’s empty and fucking clean and they get the nice shots to say “I TOLD YOU SO!”

Roon: I live 3 blocks from here it’s been safe for more than a year and a half and essentially clean since november. I straight up don’t believe the story about gangs violently doing drugs or whatever outside X or whatever.

Beff Jezos: Idk man the times I visited X HQ area it was pretty rough.

The good news is that this was ages ago. By ages I mean months. Everything escalates quickly now.

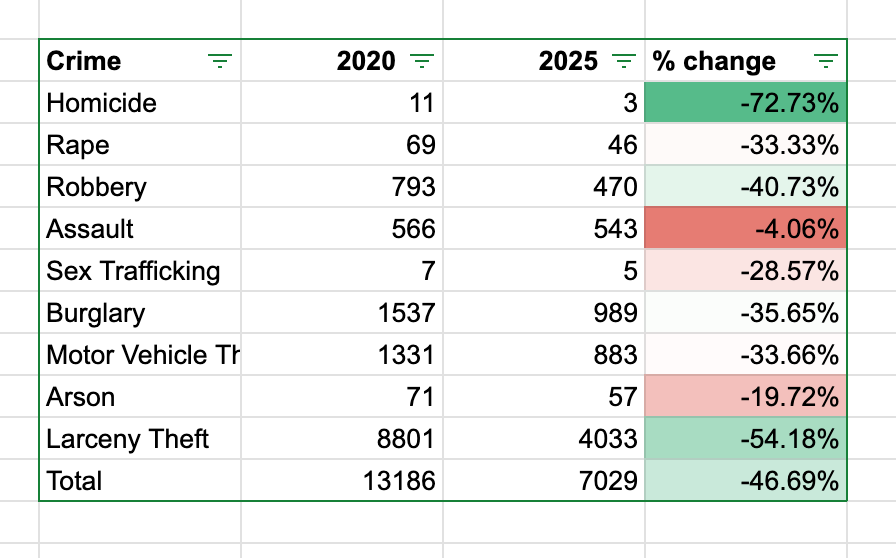

At this point, I’ve seen several claims that in a matter of months they’ve managed to clean up San Francisco. This includes that reported property crimes dropped by 45%, which of course has two potential causes that imply very different conclusions.

Here’s some actually hard evidence that the San Francisco cleanup is working, presented in the absolute funniest way possible.

Joey Politano: the headline is perhaps one of the greatest examples of media’s negativity bias in history.

Rohin Dhar: Auto glass repair companies in San Francisco are apparently seeing a drastic decline in business:

“We used to get 60 to 80 calls a day,” said Hank Wee, manager of In & Out Auto Glass

“Now we’re lucky to get 25”

Perfect. We now have a highly reliable car theft index. We used to get 70 calls a day, now we get about 20. Combining this with other sources from both auto shops and other crime stats, we are likely looking at about a 65% drop in car break-ins, although only a 12% decline in whole-car thefts.

This also can make us confident that other crime statistics are moving due to reduced actual crime, rather than due to reduced reporting of crime.

Michelle Tandler: Crime is down 46% in San Francisco compared to 2020.

In 2020, we had a Progressive DA and Board.

Now we have Moderate leaders. The results are astonishing.

Kelsey Piper: Notably SF produced a huge drop in crime and increase in city safety just by electing moderate Democrats focused on that, they did not have to resort to the extreme Trumpist measures I’m constantly told are our only choice.

Last night I took the BART to Civic Center and walked through the Tenderloin to go meet my wife for a D&D date. It was clean and safe; people were out and about. I remember being told that only right wing authoritarianism could achieve this and Democrats would never.

Ryan Moulton: I’m curious whether there was any meaningful policy change, or if it was just “when the police like the mayor they work harder.”

Kelsey Piper: partially that, partially ‘when the police expect people they arrest to be successfully convicted they work harder’, partially self-reinforcing cycles (once there are more people on the streets, that further drives down crime)

I also think fare gates that cannot be hopped are a huge deal for the safety of the train system. people who are disoriented, intoxicated, aggressive and violent are unwilling/unable to pay $3 so if they can’t hop the gates, boom, trains are completely safe and comfortable.

Deva Hazarika: I’m surprised how effective the fare gates seem to be given how easy it is for a person to run in behind someone (tho yeah obv that itself lot more friction than just hopping gate)

Kelsey Piper: yeah I wasn’t expecting such a big difference but it’s very notable!

Vanquez runs for Portland District Attorney on Law & Order. He won 54-46.

Sacramento warns Target it would face fines if it kept reporting thefts. That sounds absurd, but Jim Cooper, Sacramento County Sheriff, actually has a good response.

That response is: If you are not allowing the police to enforce the law, the least you can do is stop whining about it.

Jim Cooper (Sheriff of Sacramento) (Nov. 2023): I can’t make this up. Recently, we tried to help Target. Our property crimes detectives and a sergeant were contacted numerous times by Target to assist with shoplifters, mostly known transients. We coordinated with them and set up an operation with detectives and our North POP team.

At the briefing, their regional security head told us we could not contact suspects inside the store; we could not handcuff suspects in the store; and if we arrested someone, they wanted us to process them outside, behind the store, in the rain.

We were told they did not want to create a scene inside the store and have people film it and post it on social media. They did not want negative press. Unbelievable.

Our deputies observed a woman on camera bring her own shopping bags, go down the body wash aisle, and take a number of bottles of Native-brand body wash. Then she went to customer service and returned them! Target chose to do nothing and simply let it happen. Yet, somehow, locking up deodorant and raising prices on everyday necessities is their best response.

We do not dictate how big retailers should conduct their business; they should not dictate how we do ours.

This is so insane. How are you going to deter crime if you literally are afraid to have anyone spreading the word that those who rob your stores might face punishment?

I hope we have now reached the point where ‘shoplifters arrested in your store by police’ is seen as a good thing?

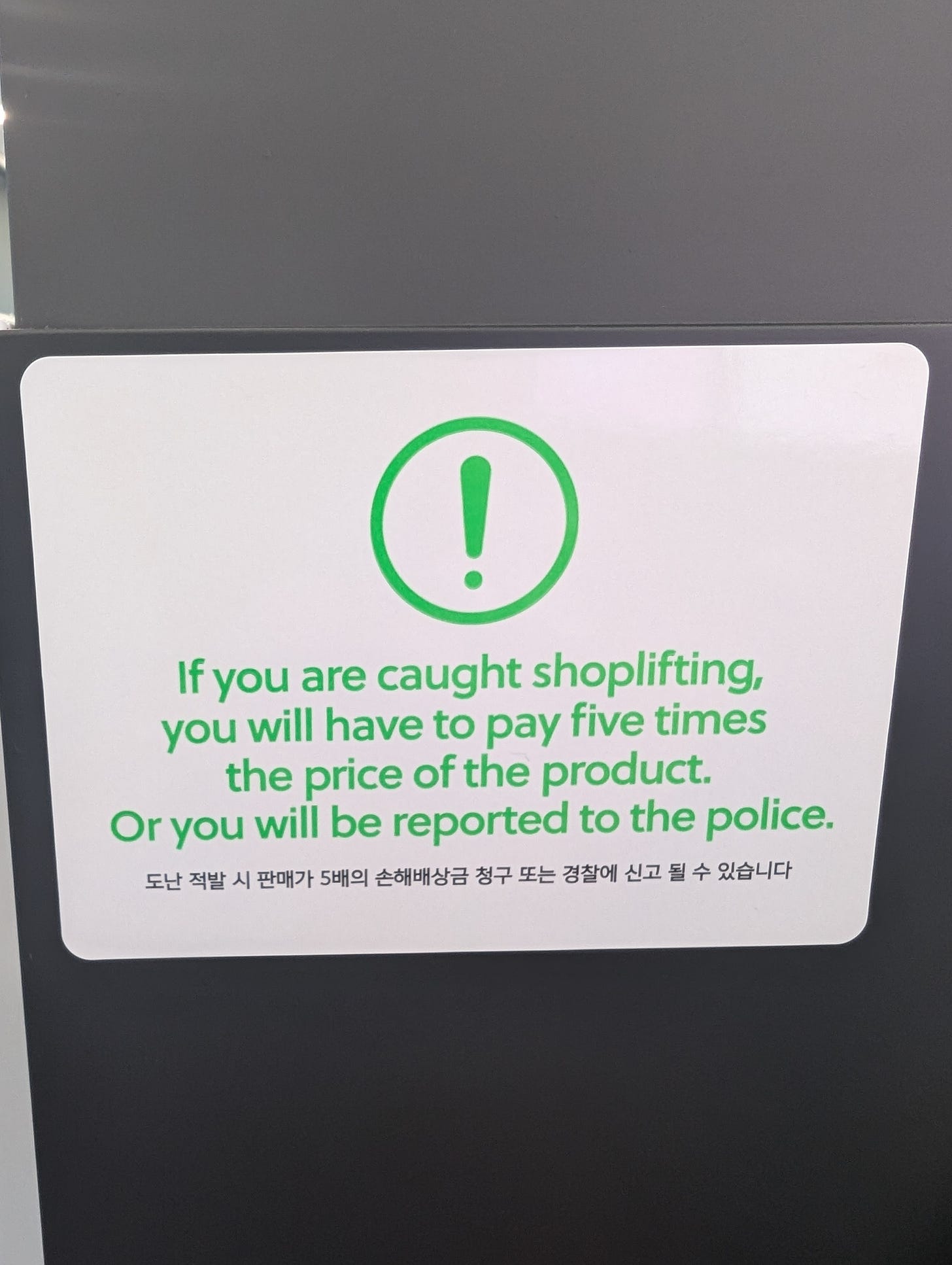

This is an interesting gambit, fine the shoplifters directly or else.

Bryan Caplan: From a reader:

Spotted this in Olive Young (a cosmetics store in Seoul).

Should this be normalised? I feel allowing Private businesses to ask for payment in exchange for non reporting criminals is something you’d love but the general public would dislike (as where does it end? Do you run CCTV blackmail schemes for muggers? Or is it only the victim who can ask for the payment?).

Also it is written in English, clearly the criminals are often not Korean, perhaps East Asia experiences more immigrant crime as the baseline is so low?

Jared Walczak: I fear it would increase casual (not organized) shoplifting because the stakes are substantially lowered. It becomes something more akin to deciding to “risk” going ten minutes over on a 2-hour meter rather than a stark line you simply can’t cross.

TakForDetElon: This policy wouldn’t work in San Francisco. Shoplifter would just say “call the police”.

Indeed, this only works if calling the police is worse than paying the fine. If the police wouldn’t do anything, then the threat is empty.

The bigger issue is that if the chance of being caught is under 20%, or people think it is, then all you are doing is minimizing their risk. And indeed, it is widely believed that 5% or less of shoplifting attempts are caught. One can even see people treating this as an invitation as noted above – shoplifting is now a game, win one or lose five, not a hard line.

Of course, they are not quite promising to make this offer to everyone, merely to do one action or the other.

The obvious ethical objection is that those who can pay and really should therefore have the police called will pay, and those who cannot pay and thus have some real need get the police called. And the worries about where does it end.

Where I do think this is good is as a way to use limited police resources to get full deterrence. You can’t call the police every time, it’s too expensive for everyone. But you can credibly threaten to call the police if the conditional means you rarely have to actually call them. The threat is stronger than its execution, but to make that work the threat needs to be credible.

The other equilibrium is that shoplifters are essentially not prosecuted at all because there are too many of them, and this seems to be a case of many jurisdictions fing around and finding out.

Lawrence Newport (talking about UK): Shoplifting is up in 97% of constituencies [since 2022]. Some have almost doubled in a year.

Reality is worse as most go unreported.

There’s been a complete collapse in the ability/availability of police to catch or stop this.

In many cases shoplifting has become essentially legal.

Then there’s the statistics on bike theft, which are even more dismal, and a rather clear test example.

Lawrence Newport: We left a bike with GPS trackers somewhere we assumed would be safe.

Right outside Scotland Yard.

It was quickly stolen.

Police did not check security camera footage, could not follow a “moving” GPS signal or one at an address.

The government has given up, and police cannot focus on the rampant theft.

This only changed when The Telegraph called the Metropolitan Police, and suddenly . . . they are now going to check the security camera footage.

Crush Crime: CSEW estimates 207,000 bikes were stolen last year.

Only 2 percent of cases resulted in a suspect being charged.

Theft is legal in Britain.

The government must be made to act, or else this will not stop.

Vinesh Patel: I owned a small chain of fast food outlets once, teenage gangs would steal thefts and even assault staff. We supplied cctv footage, never received a response. I wrote a letter of complaint to the Police Lambeth. They had lost the CCTV footage & asked for it again 6 months later.

A simple heuristic needs to be ‘if you provide me with video evidence of the crime I will at least then actually look into it.’ If not, well, we should be thankful it’s taking so long to solve for the other equilibrium.

Whereas this is your reminder that it seems in the UK the police claim they are actively not allowed to act on location data even for a stolen phone that is still on, let alone CCTV footage? Even with everything else I know, I can’t even at this point. It is perhaps amazing that London isn’t Batman’s Gotham.

Another solution to the equilibrium, San Francisco edition, since the previous solution clearly wouldn’t work:

Here’s another, via MR: staging armed robberies so the ‘victims’ can get immigration visas.

New meta-analysis finds (draft, final study) publication bias in studies that measure the effect of lead on crime, leading to large overstatement of effect sizes. Note that even if new result is true, lead is still a really big deal and a large part of the story.

Does lead pollution increase crime? We perform the first meta-analysis of the effect of lead on crime, pooling 542 estimates from 24 studies. The effect of lead is overstated in the literature due to publication bias. Our main estimates of the mean effect sizes are a partial correlation of 0.16, and an elasticity of 0.09. Our estimates suggest the abatement of lead pollution may be responsible for 7–28% of the fall in homicide in the US. Given the historically higher urban lead levels, reduced lead pollution accounted for 6–20% of the convergence in US urban and rural crime rates. Lead increases crime, but does not explain the majority of the fall in crime observed in some countries in the 20th century. Additional explanations are needed.

Do I feel this pain? Oh yes I feel this pain.

Jane Coaston: Law & Order when you actually know something about the legal subject at hand is a uniquely horrifying form of torture.

The entire premise of this episode would be moot because of Section 230, as I told both the television and my dog.

“We’re going to arrest the guy who runs this forum because people posted the address of a guy on that forum and a mentally ill woman went and killed that guy and charge him” this would last like ten seconds in court.

Billy Binion: This is how I feel watching Ozark!

Aella estimates (in rather noisy fashion) that 3.2% of active, in-person sex workers in America are actively being sex trafficked. As she notes, that is a lot. That probably would then translate to a lot more than 3.2% of encounters.

This seems like a strong argument that you have an ethical obligation, as a potential client, to ensure that this isn’t happening.

Story of a very well-executed scam about claiming you failed to appear for jury duty.